When migrating from an on-premises DC to VMware Cloud on AWS it is usually recommended to use Hybrid Cloud Extension (HCX) from VMware. However, in some cases the IT team managing the on-prem DC is already using Veeam for backup and want to use their solution also for the migration.

They may also prefer Veeam over HCX as HCX often requires professional services assistance for setup and migration planning. In addition, since HCX is primarily a tool for migrations, the customer is unlikely to have had experience setting it up in the past and while it is an excellent tool there is a learning curve to get started.

Migrating with Veeam vs. Migrating with HCX

| Veeam Backup & Recovery | VMware Hybrid Cloud Extension (HCX) |

|---|---|

| Licensed (non-free) solution | Free with VMware Cloud on AWS |

| Arguably easy to set up and configure | Arguably challenging to set up and configure |

| Can do offline migrations of VMs, single or in bulk | Can do online migrations (no downtime), offline migrations, bulk migrations and online migrations in bulk (RAV), etc. |

| Can not do L2 extension | Can do L2 extension of VLANs if they are connected to a vDS |

| Can be used for backup of VMs after they have been migrated | Is primarily used for migration. Does not have backup functionality |

| Support for migrating from older on-prem vSphere environments | At time of writing, full support for on-prem vSphere 6.5 or newer. Limited support for vSphere 6.0 up to March 12th 2023 |

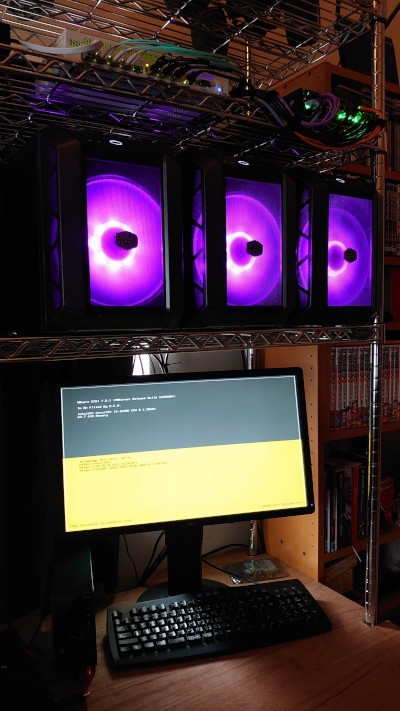

What we are building

This guide covers installing and configuring a single Veeam Backup and Recovery installation in the on-prem VMware environment and linking it to both vCenter on-prem as well as in VMware Cloud on AWS. Finally we do an offline migration of a VM to the cloud to prove it that it works.

Prerequisites

The guide assumes the following is already set up and available

- On-premises vSphere environment with admin access (7.0 used in this example)

- Windows Server VM to be used for Veeam install

- Min spec here

- Windows Server 2019 was used for this guide

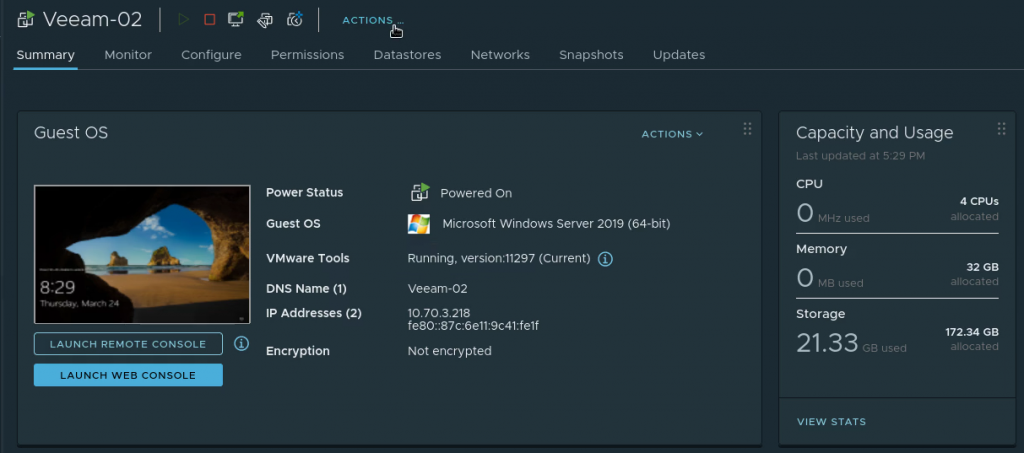

- Note: I initially used 2 vCPU, 4GB RAM and 60 GB HDD for my Veeam VM but during the first migration the entire thing stalled and wouldn’t finish. After changing to 4 vCPU, 32Gb RAM and 170 GB HDD the migration finished quickly and with no errors. Recommend to assign as much resources as is practical to the Veeam VM to facilitate and speed up the migration

- One VMware Cloud on AWS (VMC) Software Defined Datacenter (SDDC)

- Private IP connectivity to the VMC SDDC

- Use Direct Connect (DX) or VPN but it must be private IP connectivity or it won’t work

- For this setup I used a VPN to a TGW, then a peering to a VMware Transit Connect (VTGW) which had an attachment to the SDDC, but any private connectivity setup will be OK

- A test VM to use for migration

Downloading and installing Veeam

Unless you already have a licensed copy, sign up for a trial license and then download Veeam Backup and Recovery from here. Version 11.0.1.1216 used in this guide.

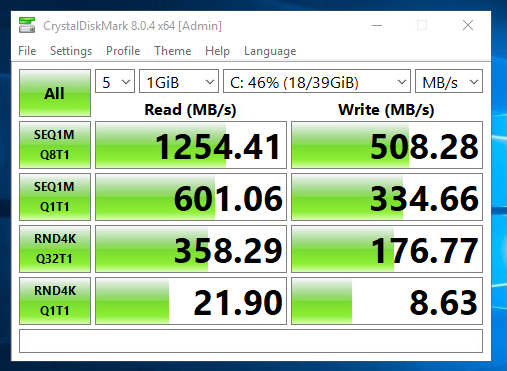

In your on-premises vSphere environment, create or select a Windows Server VM to use for the Veeam installation. The VM spec used for this install are as follows:

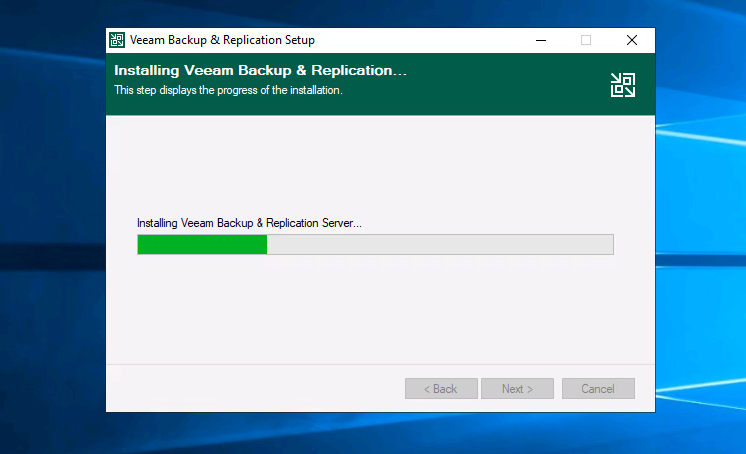

Run the install with default settings (next, next, next, etc.)

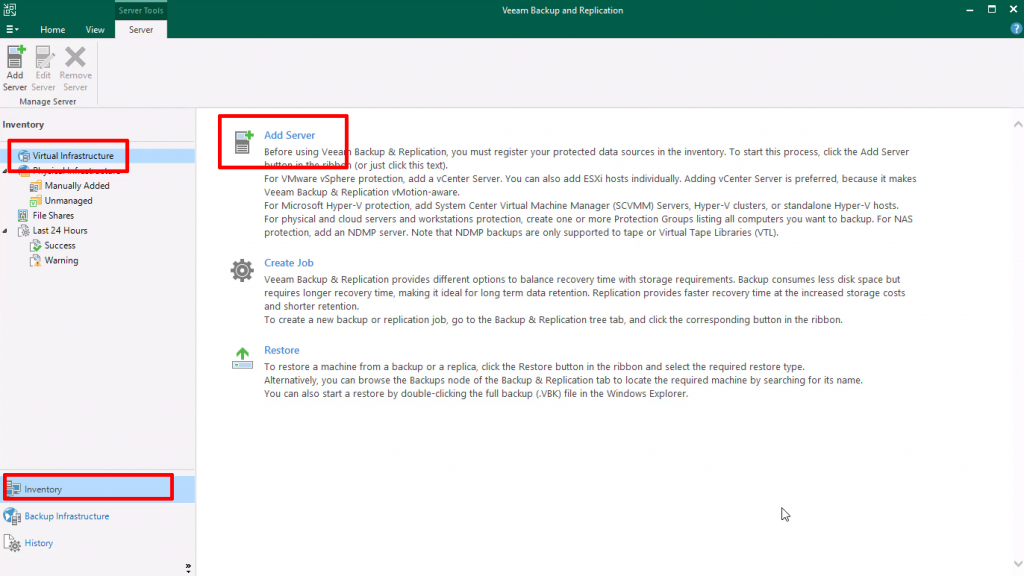

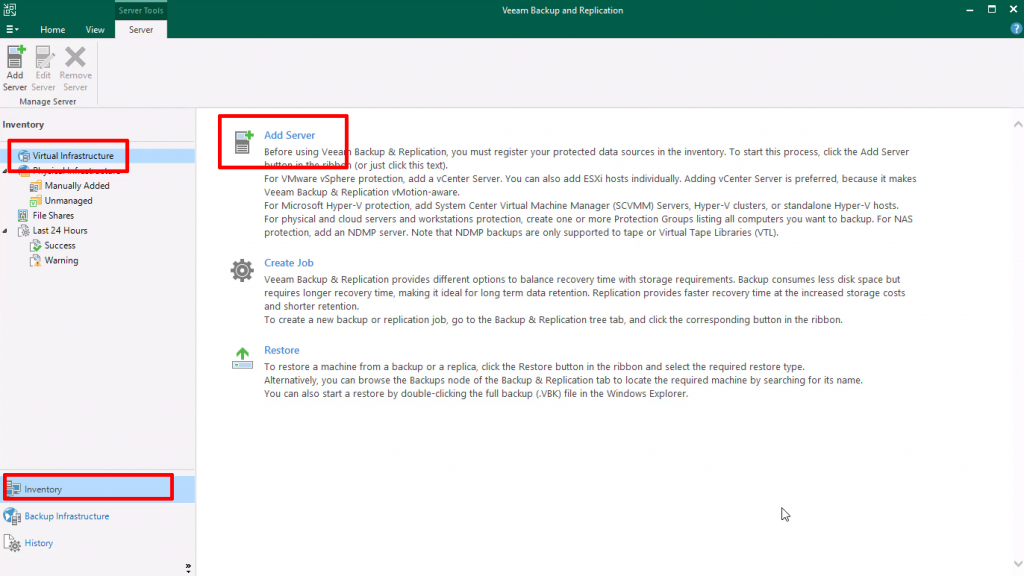

Register the on-prem vCenter in Veeam

Navigate to “Inventory” at the bottom left, then “Virtual Infrastructure” and click “Add Server” to register the on-prem vCenter server

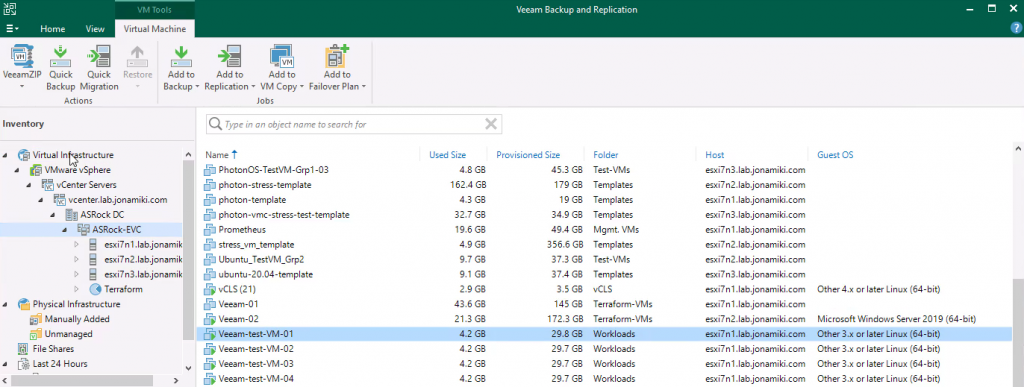

Listing VMs in the on-prem vSphere environment after the vCenter server has been registered in the Veeam Backup & Recovery console

Switching on-prem connectivity to VMware Cloud on AWS SDDC to use private IP addresses

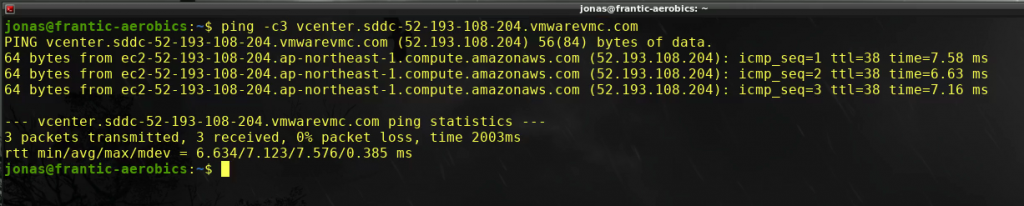

For this setup there is a VPN from the on-premises DC to the SDDC (via a TGW and VTGW in this case) but the SDDC FQDN is still configured to return the public IP address. Let’s verify by pinging the FQDN

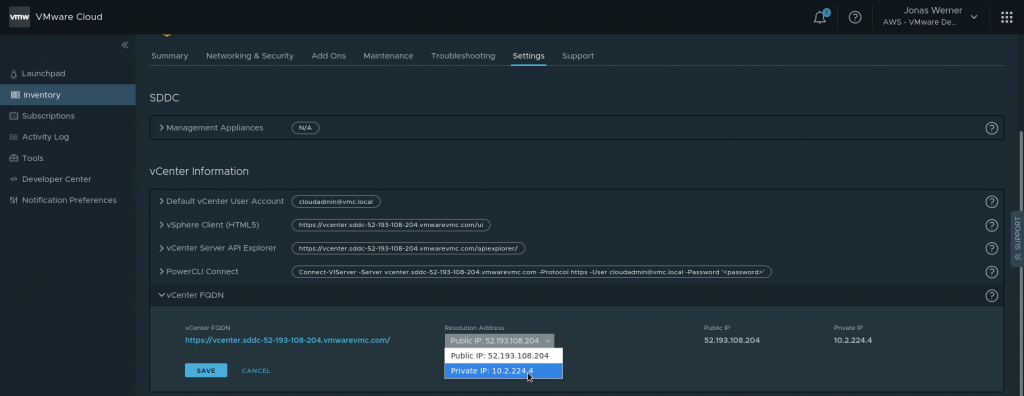

Switching the SDDC to return the private IP is easy. In the VMware Cloud on AWS web console, navigate to “Settings” and flip the IP to return from public to private

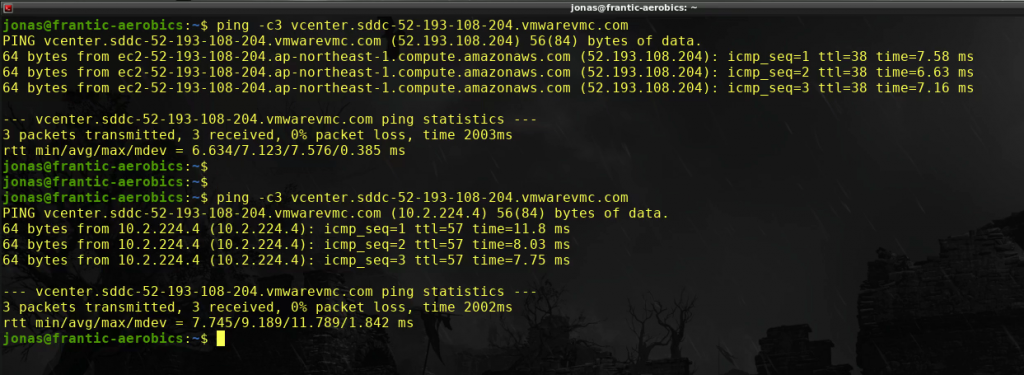

Ping the vCenter FQDN again to verify that private IP is returned by DNS and that we can ping it successfully over the VPN

All looks good. The private IP is returned. Time to register the VMware Cloud on AWS vCenter instance in the Veeam console

Registering the VMC vCenter instance with Veeam

Just use the same method as used when adding the on-premises vCenter server: Navigate to “Inventory” at the bottom left, then “Virtual Infrastructure” and click “Add Server” to register the on-prem vCenter server with Veeam

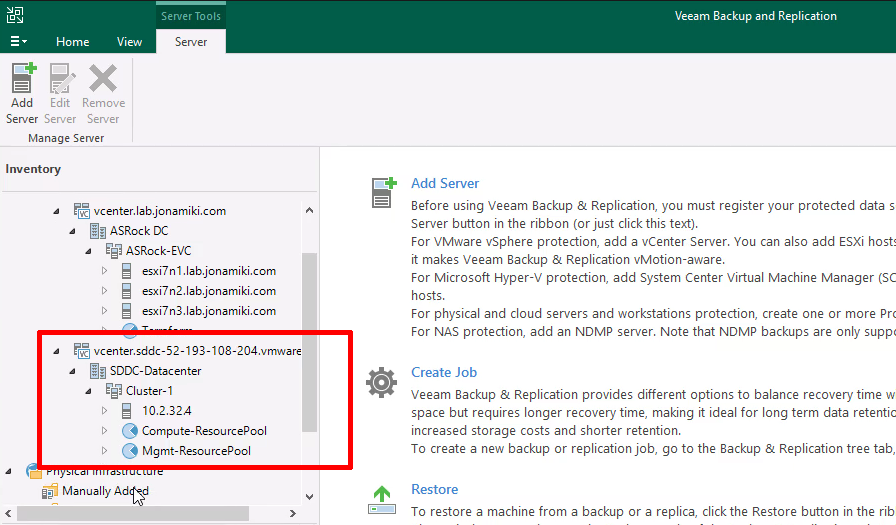

After adding the VMware Cloud on AWS SDDC vCenter the resource pools will be visible in the Veeam console

Now both vSphere environments are registered. Time to migrate a VM to the cloud!

Migrating a VM to VMware Cloud on AWS

Below is both a video and a series of screenshots describing the migration / replication job creation for the VM.

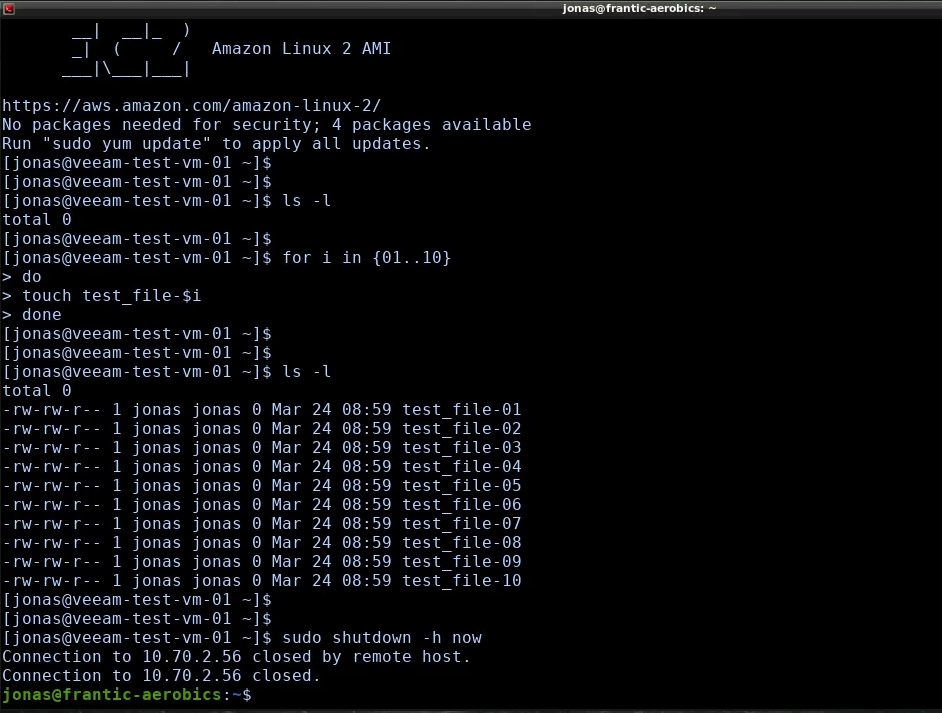

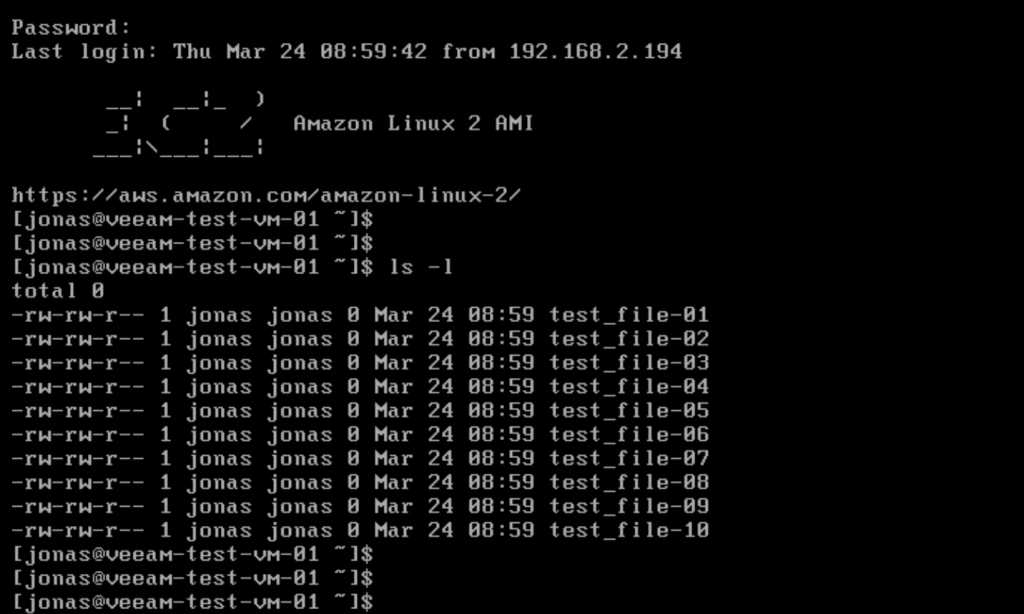

Creating some test files on the source VM to be migrated

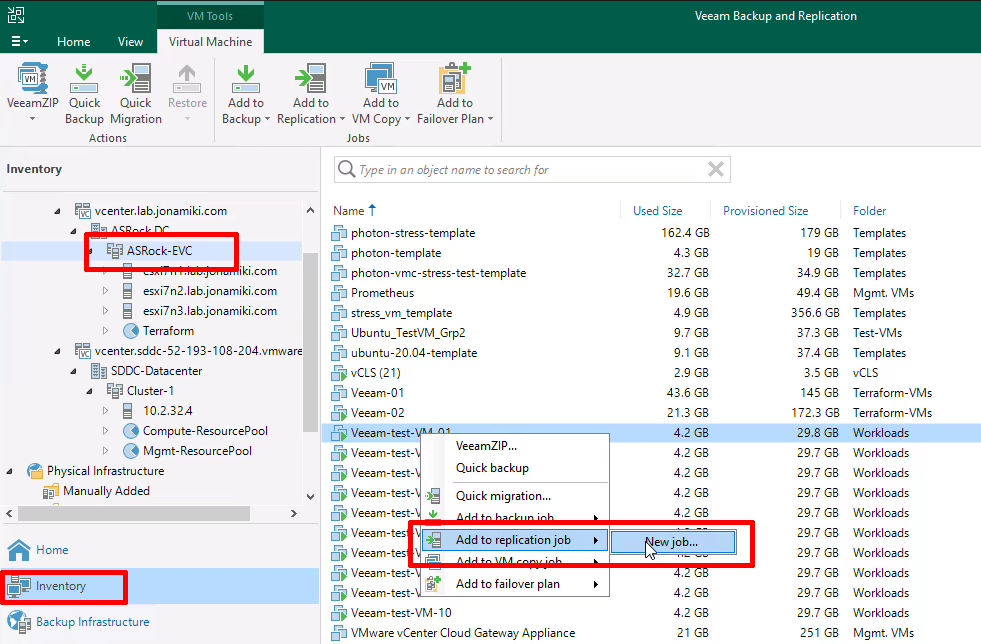

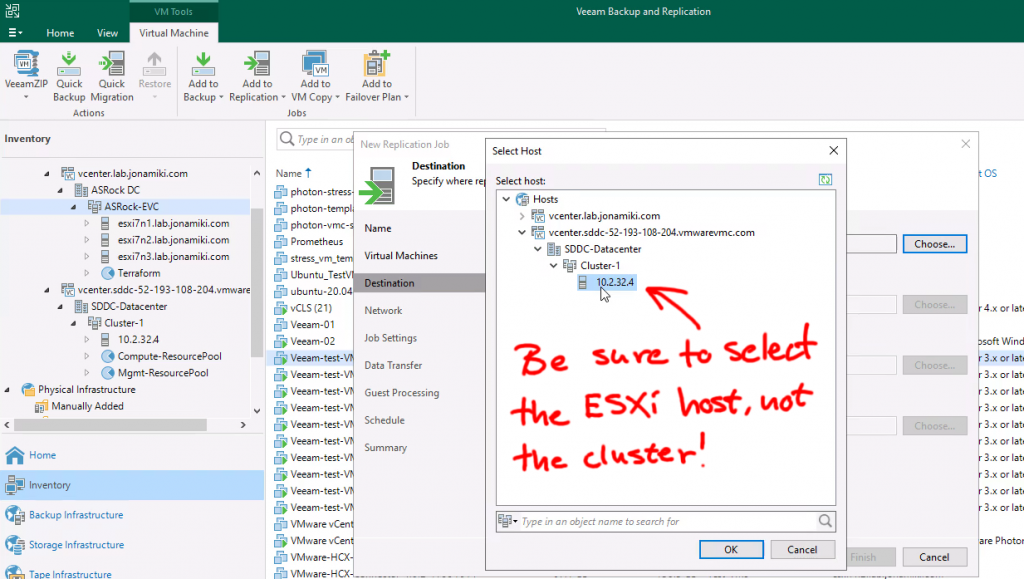

Navigate to “Inventory” using the bottom left menu, click the on-premises vCenter server / Cluster and locate a VM to migrate in the on-premises DC VM inventory. Right-click the VM to migrate and create a replication job

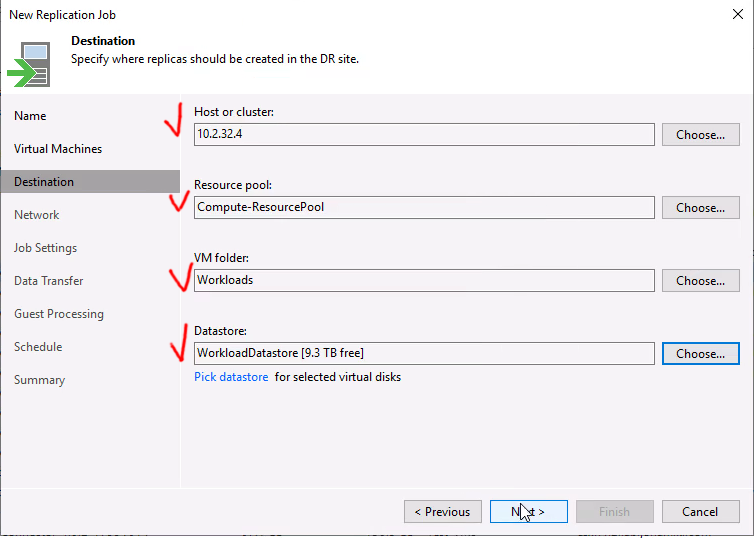

When selecting the target for the replication, be sure to expand the VMware cloud on AWS cluster and select one of the ESXi servers. Selecting the cluster is not enough to list up the required resources, like storage volumes

Since VMC is a managed environment we want to select the customer-side of the storage, folder and resource pools

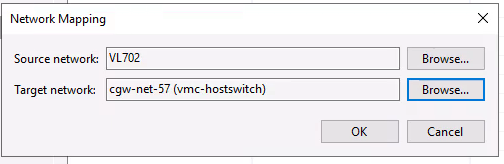

If you checked the box for remapping the network is even possible to select a target VLAN for the VM to be connected to on the cloud side!

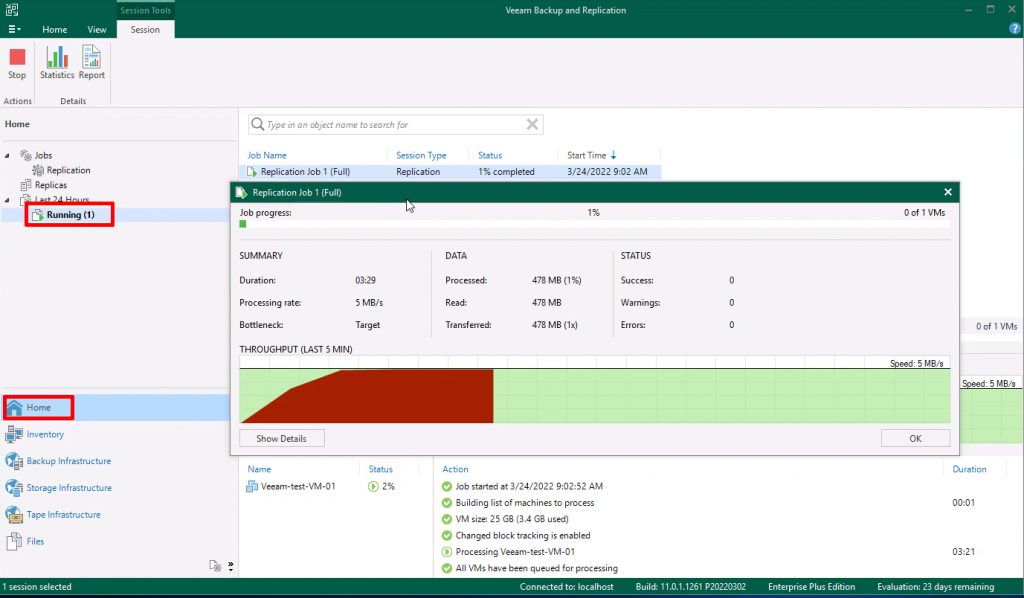

Select to start the “Run the job when I click finish” and move to the “Home” tab to view the “Running jobs”

The migration of the test VM finished in less than 9 minutes

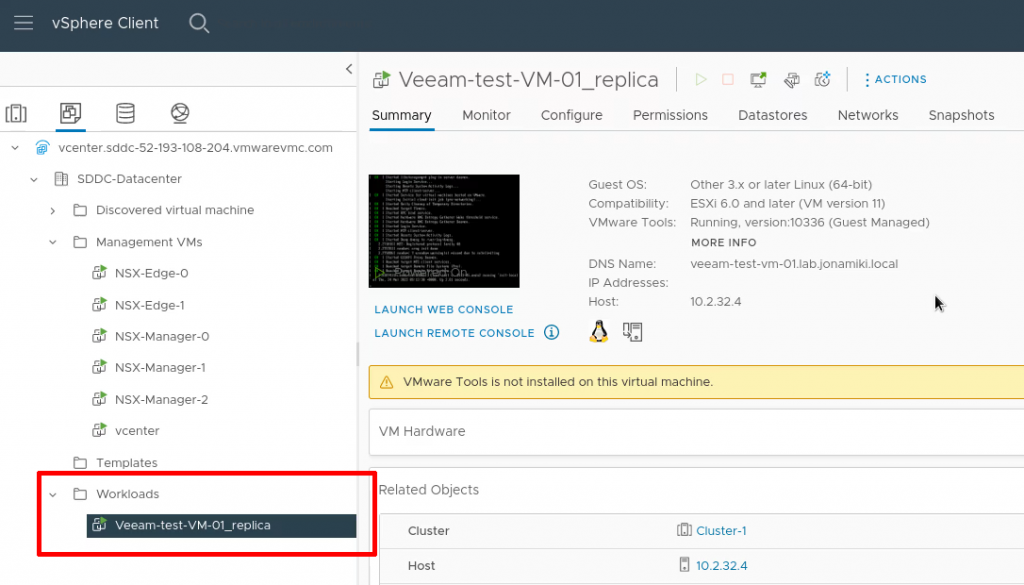

In the vCenter client for VMware Cloud on AWS we can verify that the replicated VM is present

After logging in and listing the files we can verify that the VM is not only working but also have the test files present in the home directory

Thank you for reading! Hopefully this has provided an easy-to-understand summary of the steps required for a successful migration / replication of VMs to VMC using Veeam