How to set up NAT and No-NAT networking with NC2 on AWS

Nutanix Cloud Clusters (NC2) on AWS support native AWS networking but it is also possible to deploy Flow overlay networking as part of the cluster creation process. Flow overlay networking enables many powerful features. One of those is the ability to create completely new Flow VPCs with subnets using entirely different CIDR ranges than the native AWS VPC. This gives great flexibility in handling networking for Virtual Machines (VMs) running on NC2 on AWS.

When Flow overlay networking is used, VMs can communicate with the outside world in a few different ways. One is through NAT:ed networking, where the overlay subnet which the VMs are connected to is internal to NC2 only and is not visible outside the cluster from the AWS native side.

The other is to use No-NAT. In that case the overlay subnet which the VMs are connecting to is added to the native AWS VPC routing table. Thereby it is possible for entities in the native AWS VPC, or elsewhere, to access VMs on NC2. This is despite those VMs being connected to overlay networks with CIDR ranges which doesn’t exist on the AWS native side.

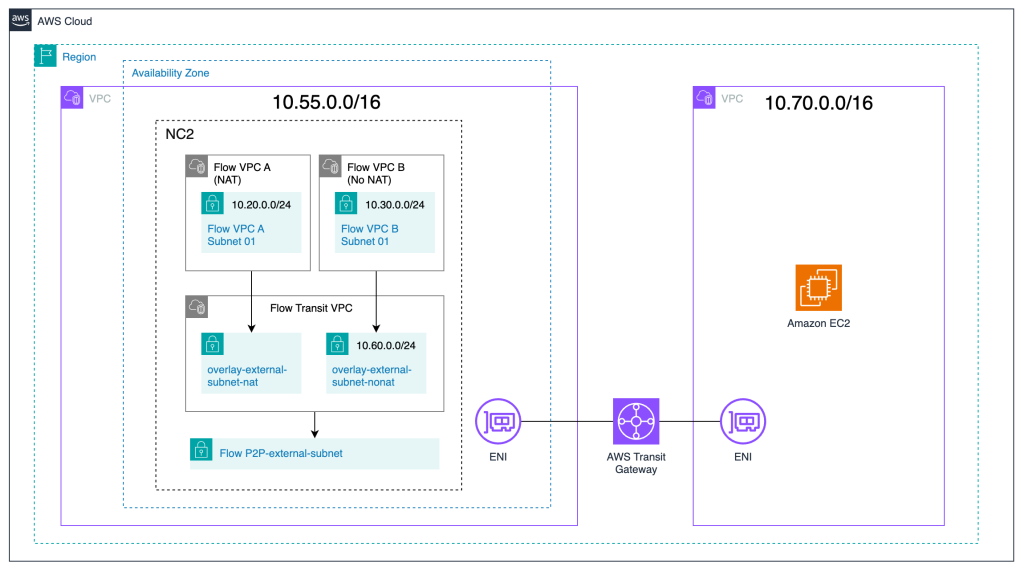

Architecture

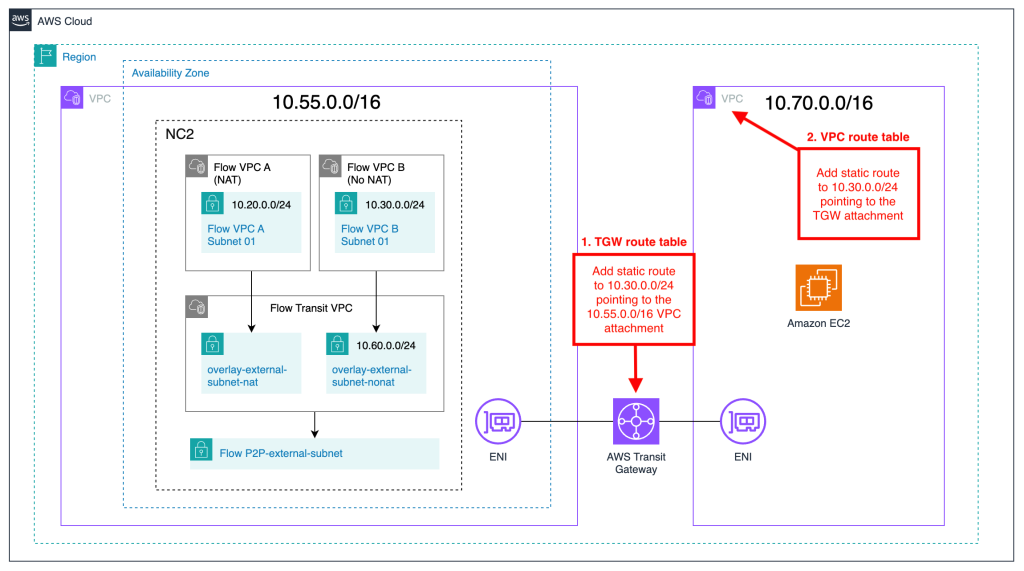

In this example, two Flow overlay VPC’s are created with one subnet each. One is the NAT:ed network with a CIDR range of 10.20.0.0/24 and the other is a No-NAT network with a CIDR range of 10.30.0.0/24.

The neighboring VPC with CIDR 10.70.0.0/16 is connected to the VPC holding the NC2 cluster via an Amazon Transit Gateway (TGW). A Windows EC2 instance will be used to verify connectivity once routing has been set up for the No-NAT network.

Flow Transit VPC

To handle North-South connectivity for the workloads on NC2 there is a Flow Transit VPC deployed as part of the cluster creation. It is a special VPC in that while it handles external connectivity for VMs on NC2, those VMs don’t connect to it directly. Instead separate Flow VPC’s are created for VM connectivity and those VPCs are in turn attached to the Flow Transit VPC.

NAT network connectivity

In this section a new Flow VPC called “VPC A” is created and attached to the Flow Transit VPC. VPC A will be used for VMs which use NAT:ed communication with the outside world.

In Prism Central on NC2 on AWS, navigate to “Network and Security” and create a new VPC.

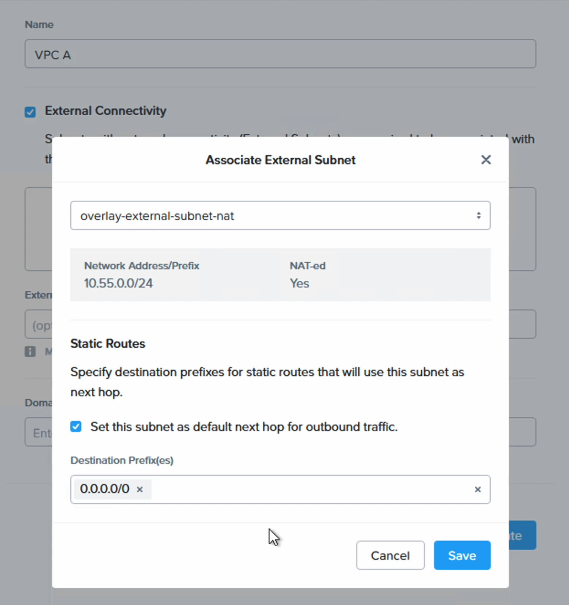

The VPC is given the name “VPC A” and for “External Connectivity” the already existing “overlay-external-subnet-nat” subnet is used. Check the box for using this subnet as the next hop / default route for all outbound traffic.

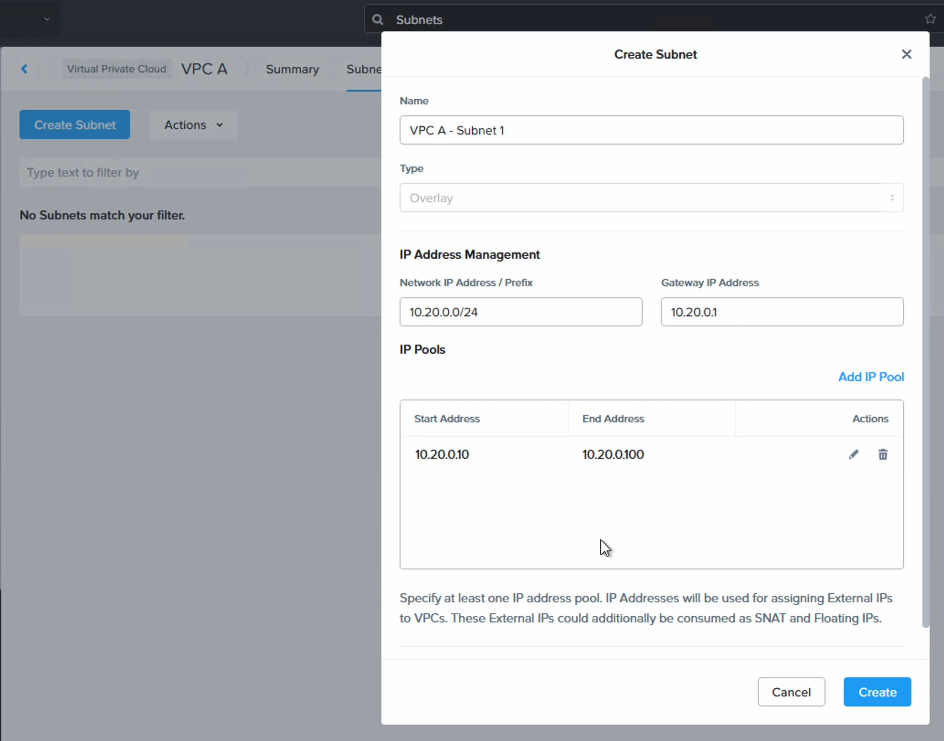

The VPC is now created but we also need a subnet for VMs to attach to. Click on “VPC A” and then “Create Subnet” from the “Subnet” tab. In this case the CIDR range 10.20.0.0/24 is used.

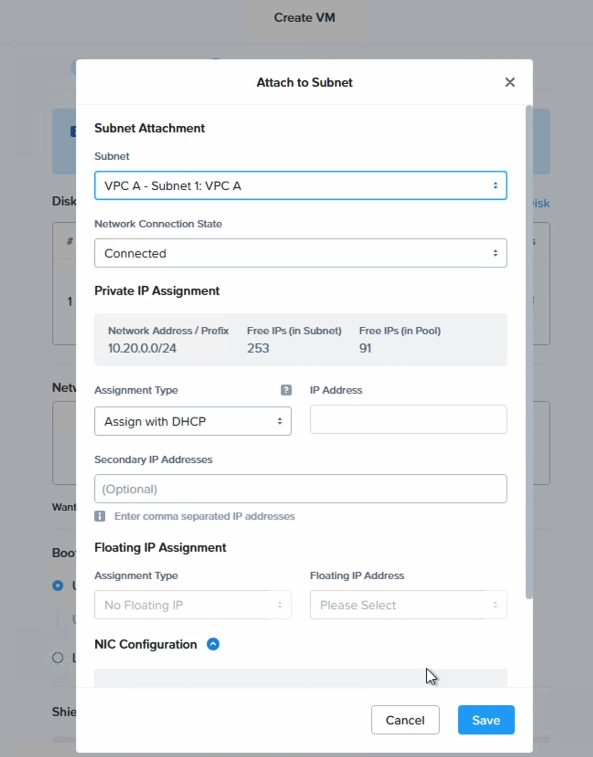

Now the NAT network configuration is complete. We can test connectivity by creating a new VM and attaching it to the new “VPC A – Subnet 1” NAT network.

In Prism Central, navigate to “VM” and create a new VM

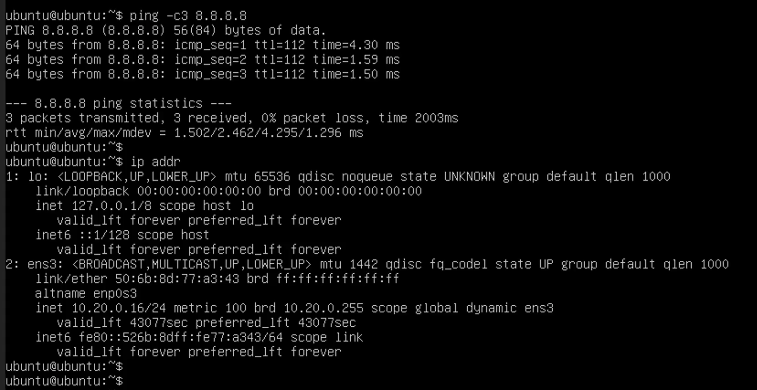

Once the VM is created, power it on and verify that it can connect to the outside world.

In this case we get an IP of “10.20.0.16” and can verify North-South connectivity by pinging a Google DNS server.

This concludes the NAT portion of the setup.

No-NAT network connectivity

For No-NAT connectivity, the steps are very similar to those just performed for the NAT:ed portion. However, there are two additional steps to perform. The first is to create a new subnet in the Flow Transit VPC specifically for No-NAT connectivity. The second is to add the Flow overlay network CIDR range that we want to use for No-NAT as an Externally Routable Prefix, or ERP, to both the Flow Transit VPC and the Flow VPC we will create for No-NAT connectivity. Please read on to see how to set this up.

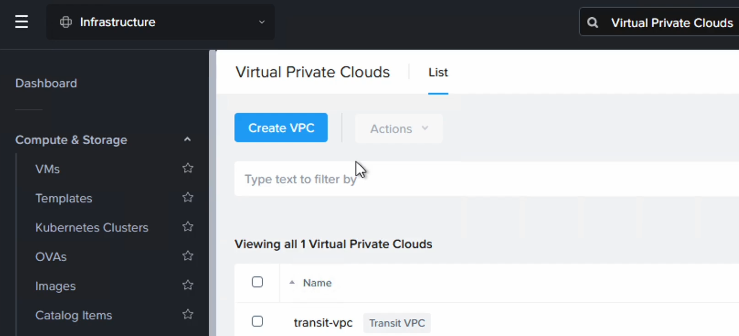

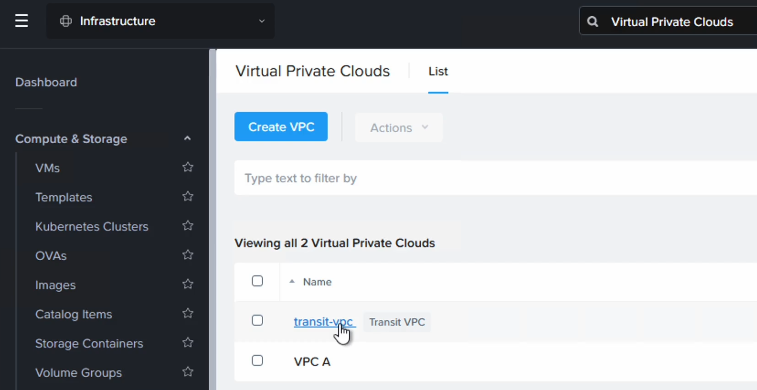

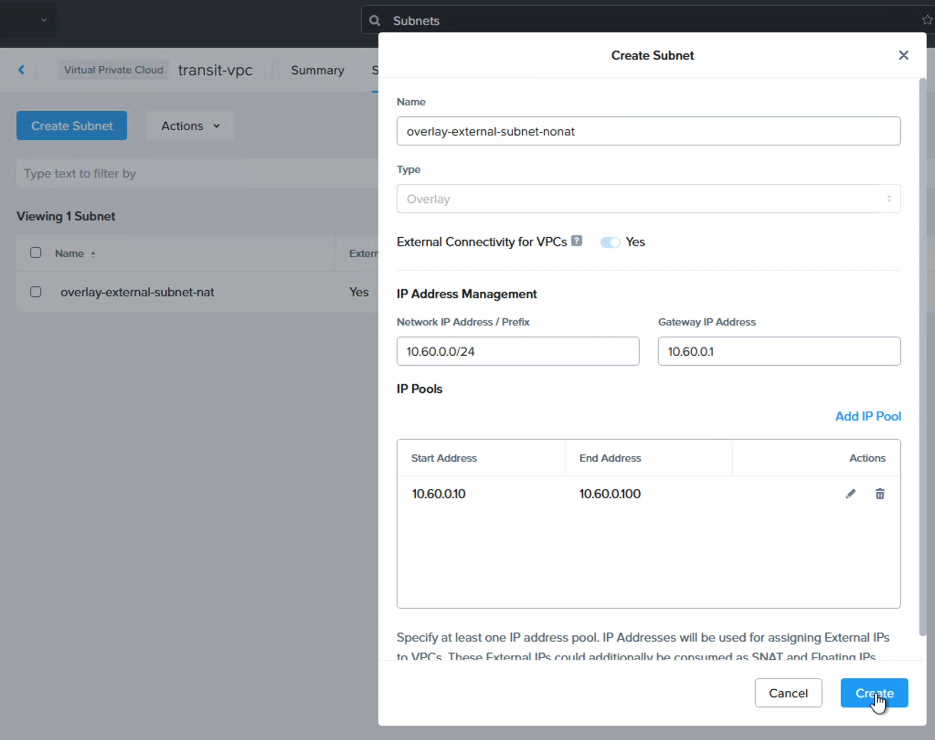

First we create a new subnet in the Flow Transit VPC and call it “overlay-external-subnet-nonat”. Navigate to “Network and Security”, select “Virtual Private Clouds” and click on the “transit-vpc”.

Go to the “Subnets” tab and select “Create Subnet”. Give it a name, like “overlay-external-subnet-nonat” and for IP address management, add any CIDR range which isn’t used elsewhere in your organization. This CIDR range will be used internally in NC2 but will not be routable or visible outside the cluster.

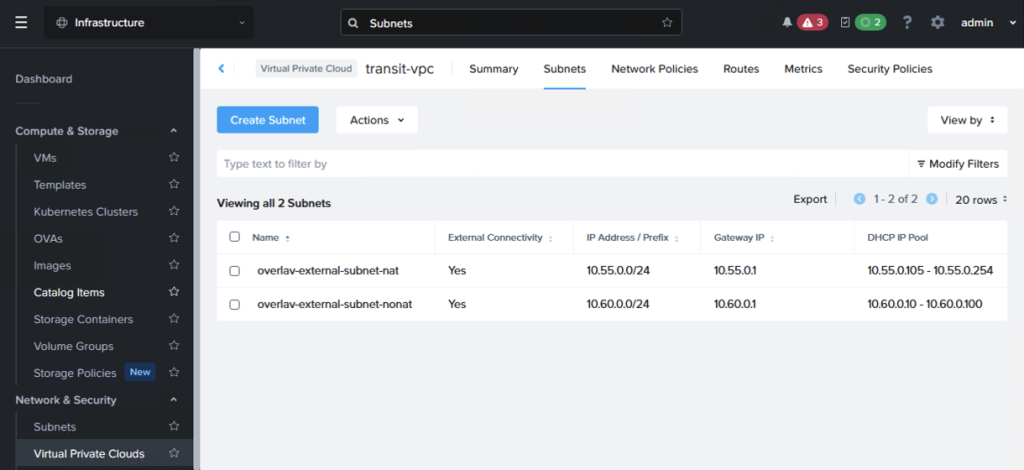

The “transit-vpc” will now have two subnets, like the below. The “overlay-external-subnet-nat” CIDR range will depend on the AWS native subnet NC2 has been deployed into.

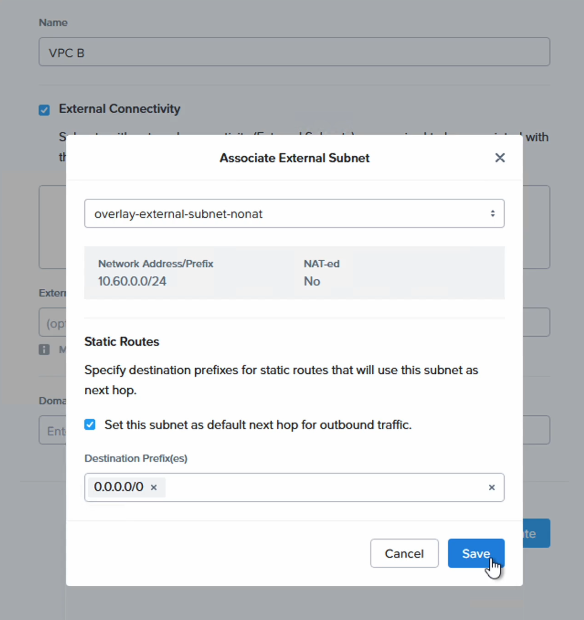

Next, create a new VPC, called “VPC B” in this example and for external connectivity use the newly created “overlay-external-subnet-nonat”. Note that “NAT-ed” is set to “No”.

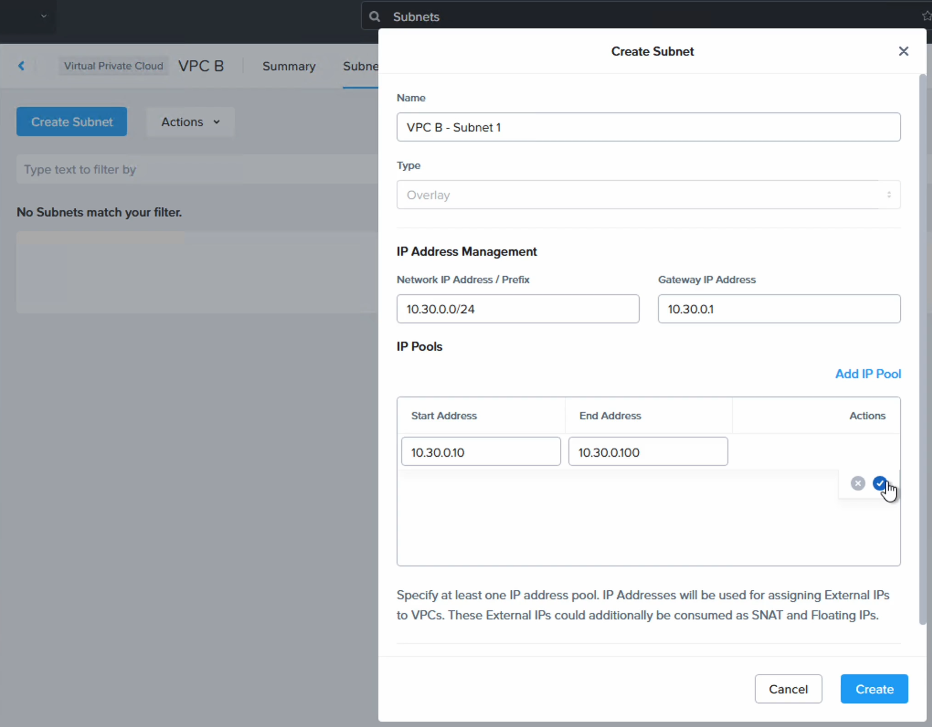

We also create a new subnet in that VPC so VMs have something to connect to. In this case we use a CIDR range of “10.30.0.0/24”.

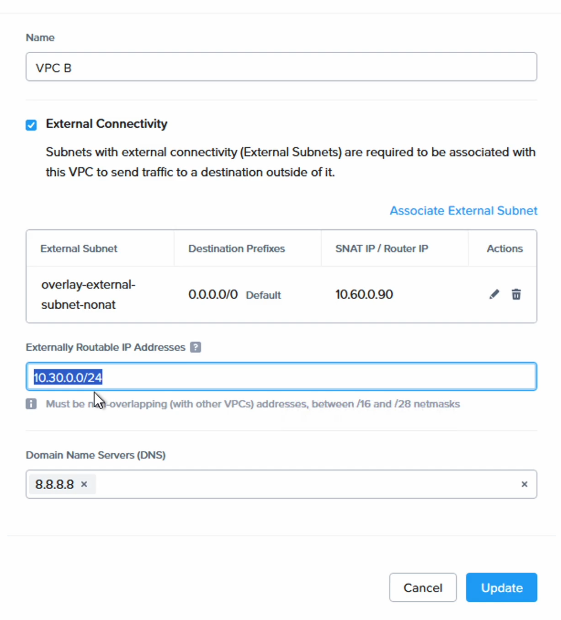

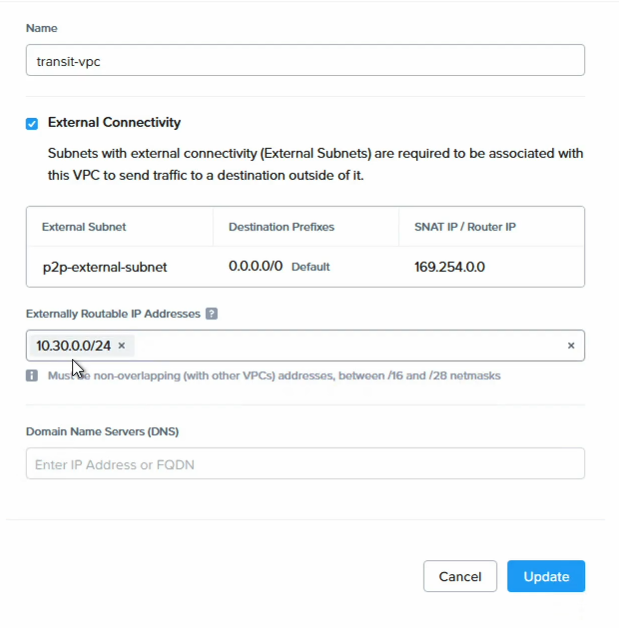

Finally, the most important point is to add the CIDR range of the newly created subnet to both the Flow Transit VPC and to VPC B as an ERP or Externally Routable Prefix.

Select “VPC B” and click “Update” to add “10.30.0.0/24” as the ERP

Next, do the same to the “transit-vpc”

That is all we need to do on the configuration side. Congratulations!

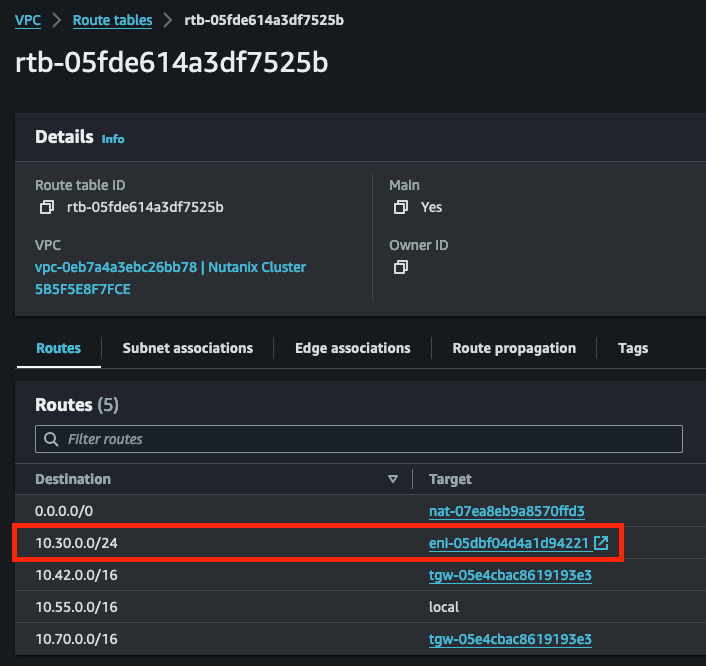

Verify that the new No-NAT network shows up in the native AWS route table

In the AWS console, go to the default route table of the VPC which NC2 is deployed into and verify that the “10.30.0.0/24” network has been automatically added to the route table. It will be pointing to the ENI (Elastic Network Interface) of the currently active NC2 bare-metal node.

Verifying No-NAT routing and connectivity

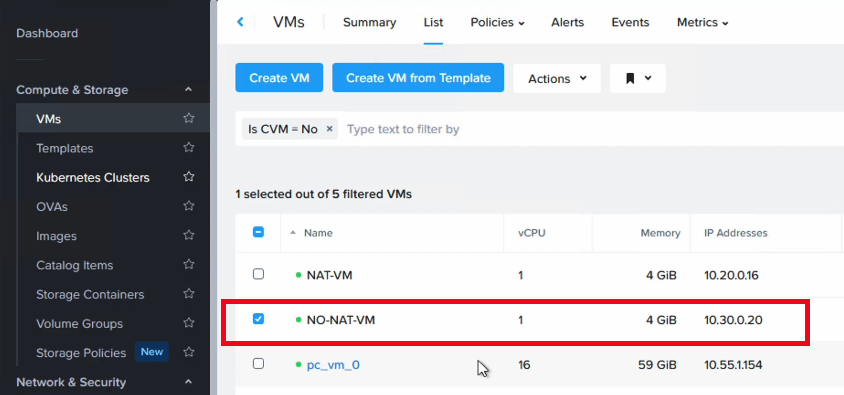

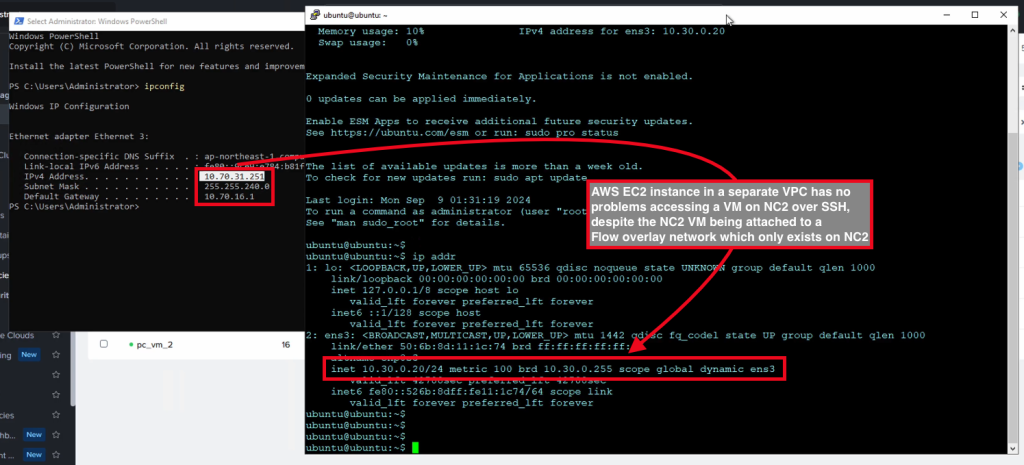

For testing purposes, deploy a VM in NC2 and attach it to the newly created No-NAT network. In this case the VM received an IP of “10.30.0.20”.

Next we add a static route to 10.30.0.0/24 in the TGW route table, marked as step 1 in the diagram below.

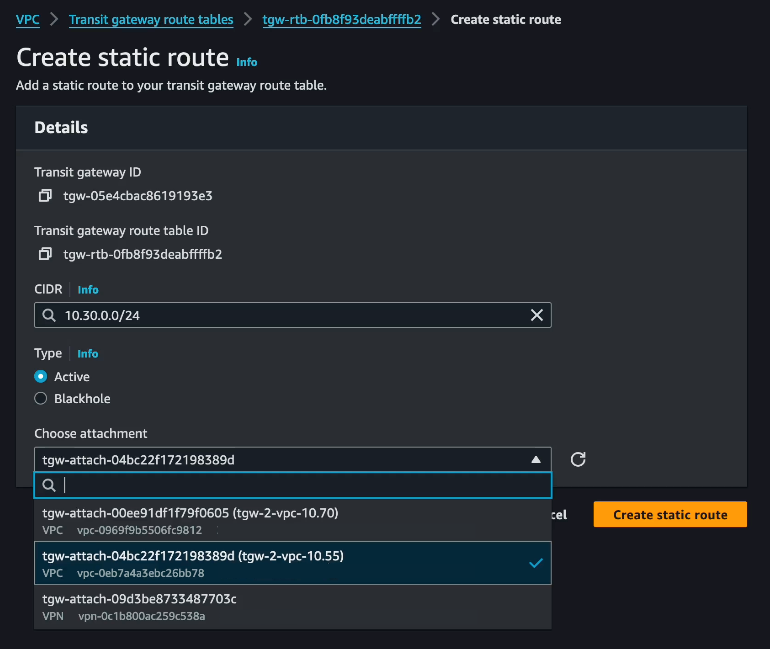

In the AWS console, navigate to “Transit Gateway route tables”, select the route table for the TGW used to provide connectivity between the two AWS native VPCs and add a static route for “10.30.0.0/24” pointing to the VPC attachment for the VPC holding the NC2 cluster.

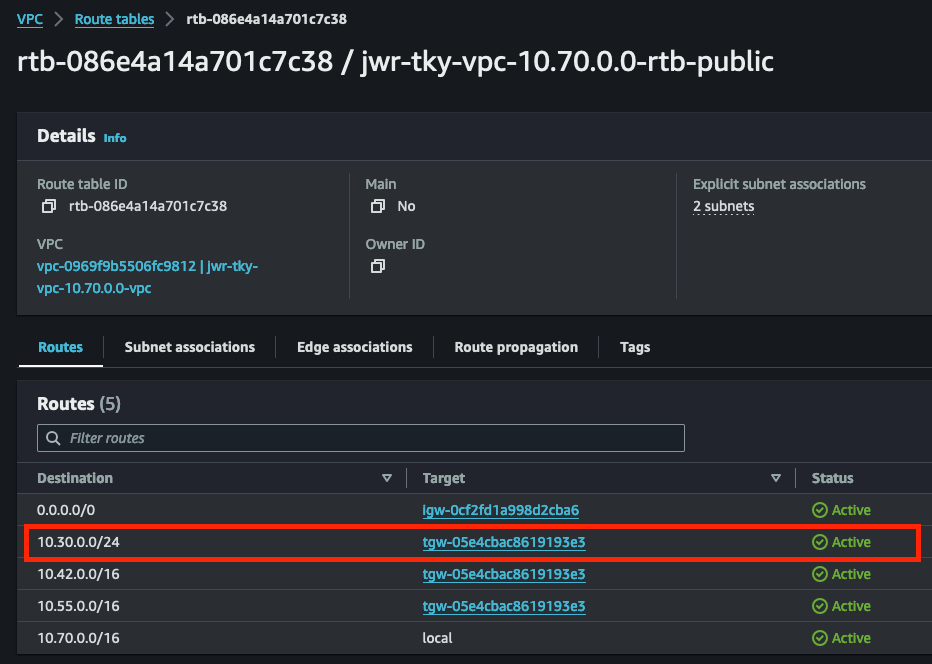

Finally, we add a static route for “10.30.0.0/24” to the route table of the native AWS VPC with CIDR “10.70.0.0/16” to enable the EC2 instances there to communicate with the test VM running on NC2. The route here will be pointing to the TGW attachment since the TGW will be handling the communication between the NC2 cluster VMs and the attached AWS native VPC.

This concludes the routing configuration. As the final step we can verify connectivity by connecting from the AWS native Windows EC2 instance to the Ubuntu test VM on NC2 on AWS (with IP 10.30.0.20″) using SSH.

As shown in the screenshot, there is no problem to access the NC2 VM from an AWS EC2 instance, despite the NC2 VM being attached to a Flow overlay network which doesn’t exist outside NC2. The No-NAT configuration makes connectivity from the outside world possible.