In early 2020 a new feature was added to the PowerEdge 14G servers called “Telemetry Streaming’. This feature makes it possible to send a continuous stream of telemetry containing in-depth information about the state of the server and its various components including, but not limited to, the following:

- CPU, Memory and Fans

- FPGA and GPU

- PCIe slots

- Airflow inside server

- Power usage information

Since the level and depth of information collected with this method FAR exceeds what has been previously possible using IPMI or other tools, this feature can help in several areas. For example:

- Power ML algorithms for Anomaly Detection

- Provide detailed inventory, usage and status information

- Assist with security and auditing

Blog posts in this series

- Introduction and overview (this post)

- Enabling telemetry streaming (3 methods of enabling Tel. Streaming)

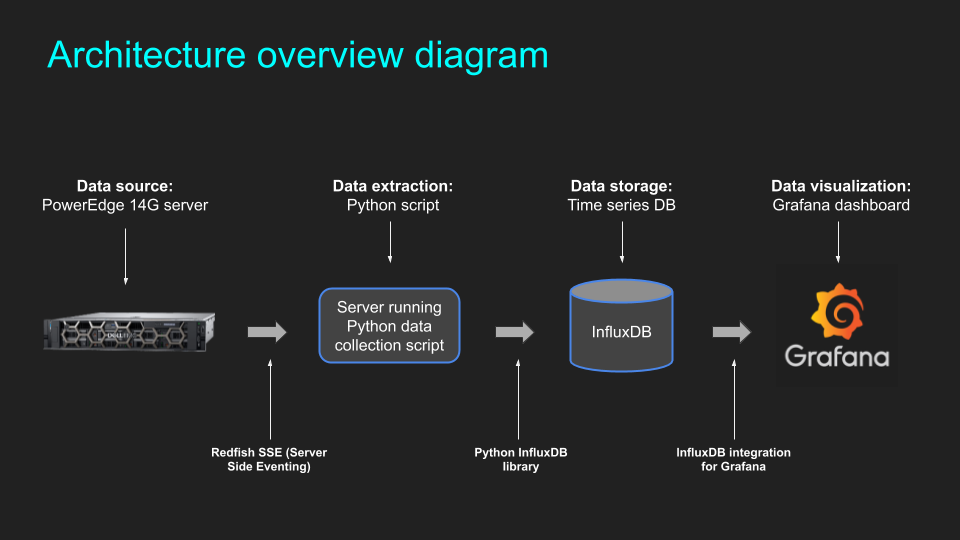

- Configuring telemetry streaming (setup of InfluxDB, Grafana, etc.)

Introductory video and demo

Links to useful documents / scripts published elsewhere:

Implementation of the DMTF Redfish API on Dell EMC PowerEdge Servers: http://en.community.dell.com/techcenter/extras/m/white_papers/20442330

Python scripts on Github: https://github.com/dell/iDRAC-Telemetry-Scripting

Introduction to Telemetry Streaming (highly recommended practical guide!): https://downloads.dell.com/Manuals/Common/dell-emc-idrac9-telemetry-streaming-basics.pdf

Analyst report on the Telemetry Streaming feature: https://www.dellemc.com/resources/en-us/asset/analyst-reports/products/servers/tolly-dell-emc-idrac9-v4-telemetry-streaming.pdf

Feature overview whitepaper: https://www.dellemc.com/resources/en-us/asset/white-papers/products/software/direct-from-development-datacenter-telemetry.pdf