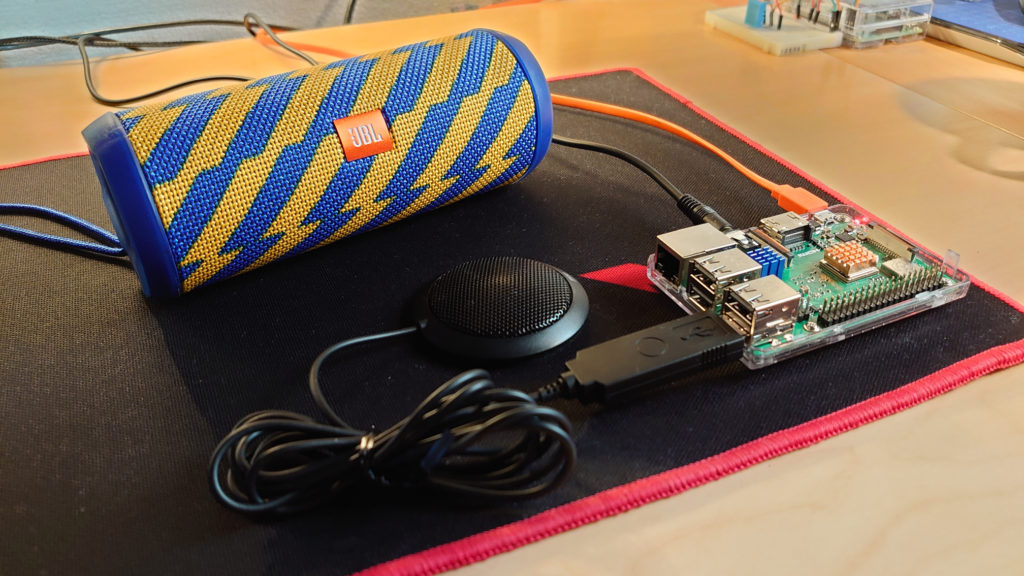

While seemingly simple, getting a USB microphone + a speaker connected to 3.5mm audio jack working AT THE SAME TIME can be very challenging.

Some of the common errors seen when trying to record and play audio

- arecord: main:788: audio open error: No such file or directory

- aplay: set_params:1305: Channels count non available

Find out device IDs

Speaker device

To see which devices are available to use as a speaker, use the following command

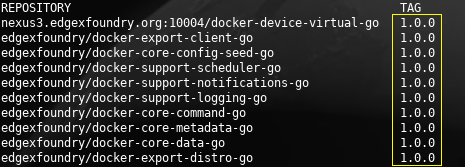

pi@raspberrypi:~ $ aplay -l **** List of PLAYBACK Hardware Devices **** card 0: ALSA [bcm2835 ALSA], device 0: bcm2835 ALSA [bcm2835 ALSA] Subdevices: 7/7 Subdevice #0: subdevice #0 Subdevice #1: subdevice #1 Subdevice #2: subdevice #2 Subdevice #3: subdevice #3 Subdevice #4: subdevice #4 Subdevice #5: subdevice #5 Subdevice #6: subdevice #6 card 0: ALSA [bcm2835 ALSA], device 1: bcm2835 IEC958/HDMI [bcm2835 IEC958/HDMI] Subdevices: 1/1 Subdevice #0: subdevice #0 card 1: Microphone [USB Microphone], device 0: USB Audio [USB Audio] Subdevices: 1/1 Subdevice #0: subdevice #0

Based on the above output we have three devices:

- The onboard 3.5mm plug listed as BCM2835 ALSA: Card 0, Device 0 (“hw:0,0”)

- The onboard HDMI connection listed as bcm2835 IEC958/HDMI: Card 0, Device 1 (“hw:0,1”)

- The USB microphone listed as USB Audio: : Card 1, Device 0 (“hw:1,0”)

In this example we want to use the 3.5mm audio jack, so we’ll use Card 0, Device 0 as the way to locate our speaker device. The USB microphone doesn’t have audio output and is not valid as a speaker setting. It is listed however, which can cause confusion.

Microphone device

To see which devices are available to use as a microphone, use the following command

pi@raspberrypi:~ $ arecord -l **** List of CAPTURE Hardware Devices **** card 1: Microphone [USB Microphone], device 0: USB Audio [USB Audio] Subdevices: 1/1 Subdevice #0: subdevice #0

We only have one device and that’s the USB microphone listed as Card 1 and Device 0 (“hw:1,0”).

Configuring the audio settings

To set the audio settings, create or modify the .asoundrc file in the users home directory as follows. That would be /home/pi/.asoundrc for the default user.

pi@raspberrypi:~ $ cat .asoundrc

pcm.!default {

type asym

capture.pcm "mic"

playback.pcm "speaker"

}

pcm.mic {

type plug

slave {

pcm "hw:1,0"

}

}

pcm.speaker {

type plug

slave {

pcm "hw:0,0"

}

}

Logging out / in, rebooting or reloading the audio service would apply the settings.

To apply the settings system-wide, copy the .asoundrc file to /etc/asound.conf

Test the new settings

To test recording audio, use arecord without specifying the device to record from (if our settings are correct, the default device would already be configured and picked up by arecord):

arecord -f S16_LE -r 48000 test.wav

To play the recorded sound use aplay

aplay test.wav

Sample rate

If things still don’t work, consider checking the sampling rate of the microphone. I had bought a new mic for use with AWS Lex and Alexa but it wouldn’t work. The sampling rate required was 16000 and the mic didn’t support it.

It turns out an old PlayStation3 camera has a 16000 sample rate. Checking rate by the following:

cat /proc/asound/card1/stream0 | egrep -i rate<br> Rates: 16000