The Open Source IoT solution called EdgeX Foundry has just had it’s first Long-Term Support (LTS) release. This is a big deal and a real milestone since it’s finally out of 0.x versions and into the first, big 1.x release. After a journey of over 2 years it’s finally ready for broad adoption. EdgeX Foundry is an official Linux Foundation project and part of LF Edge.

Why is EdgeX Foundry so relevant?

Data ingestion

- It is a native speaker of the protocols and data formats of a myriad edge devices

- Without the need for agents, it ingests data from most edge devices and sensors

- It converts the data to XML or JSON for easy processing

- It streams the data in real-time to internal or external cloud and big-data platforms for visualization and processing

Control and automation

- It supports the native commands of edge devices and can change camera angles, fan speeds, etc.

- It has rules that can act on input and trigger commands for instant action and automation

Architecture

- EdgeX Foundry is cloud-native

- It’s open source and can be downloaded by anyone free of charge

- It’s made up of microservices running in docker containers

- It’s modular, flexible and can be integrated into other IoT management systems

How does it fit in with the rest of the IoT world?

While the Internet of Things is fairly new and very much a buzzword, the concept of connected devices runs back much longer through the Machine 2 Machine era.

Oftentimes these solutions are vendor-specific, siloed off and lack any unified layer for insight, control and management. EdgeX Foundry bridges not only the old M2M with the new IoT solutions but also connects the Edge to the core DC to the Cloud. It’s the glue that holds the world of IoT together.

It can favorably be used both stand-alone, as a part of a larger IoT solution (containers can easily be integrated as they contain individual services) or together with a commercial IoT solution such as VMware Pulse IoT Center 2.0.

How to get started

Many resources are available for those looking to get started with EdgeX Foundry. There are starter guides and tutorials on the project page as well as docker-compose files on GitHub as per the below

- Main project page: https://www.edgexfoundry.org/

- Slack: https://slack.edgexfoundry.org/

- Wiki: https://wiki.edgexfoundry.org/

- Docs: https://docs.edgexfoundry.org/

- Docker Hub: https://hub.docker.com/u/edgexfoundry/

- Docker-Compose files: https://github.com/edgexfoundry/developer-scripts/tree/master/releases/edinburgh/compose-files

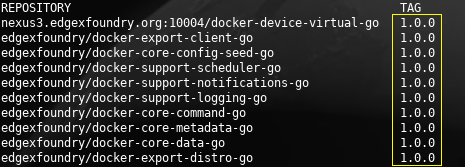

Pulled images from Docker hub

Version 1.0.0 as far as the eye can see 🙂