Some customers require cross-region disaster recovery (DR) in AWS but often face the challenge of changing IP addresses during a failover to another region. This change can disrupt external access to services running on instances covered by the DR policy.

Nutanix Cloud Clusters (NC2) address this challenge with built-in DR functionality that ensures IP addresses remain consistent during failovers between regions. Bonus: It is possible to over-provision CPU on NC2, so it may actually be possible to save on compute costs after the migration to NC2. However it can only retain IP addresses during DR for workloads which are already residing on a Nutanix cluster, so we have to migrate the EC2 instances first.

The free Nutanix Move migration tool can migrate workloads from Amazon EC2 to Nutanix Cloud Clusters, though it currently lacks support for IP retention. In this blog we use some creative workarounds to maintain consistent IPs throughout the migration, although note that MAC addresses will change. NC2 then retains the IPs during regional failovers as part of the Nutanix DR solution. Let’s dive in!

Software versions used during testing

| AOS | 6.10 |

| Prism Central | pc.2024.2 |

Solution architecture

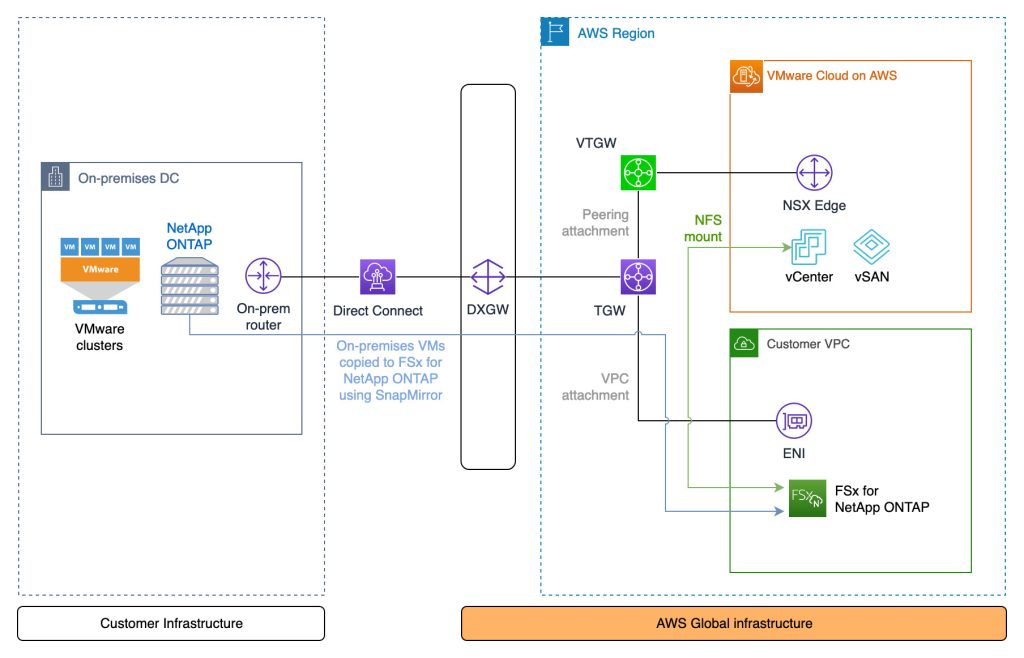

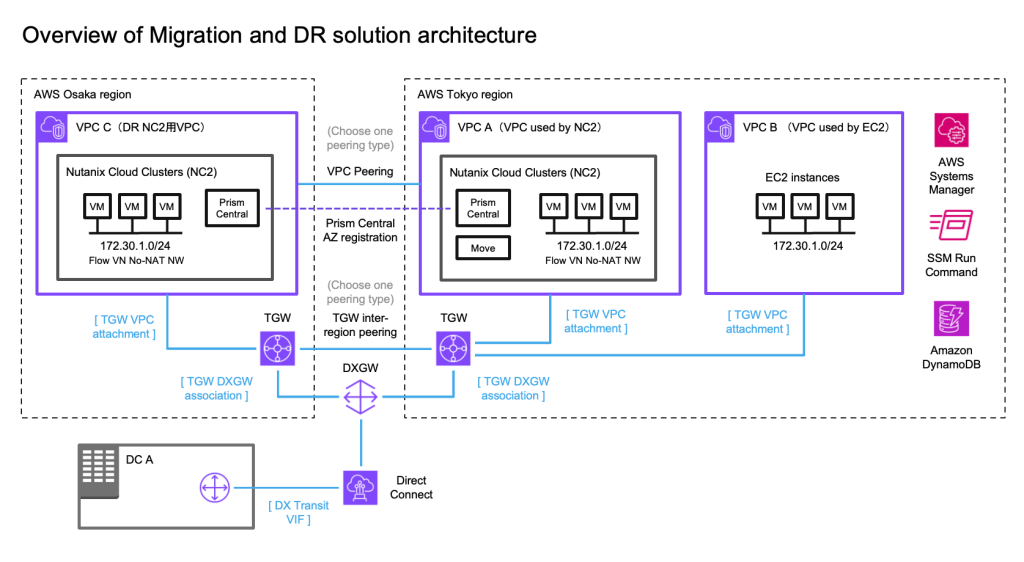

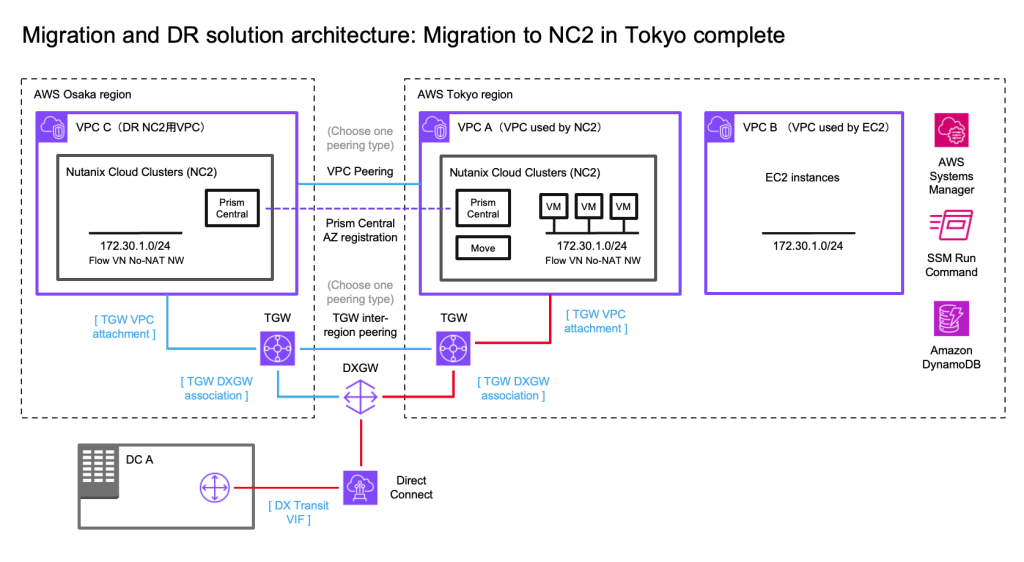

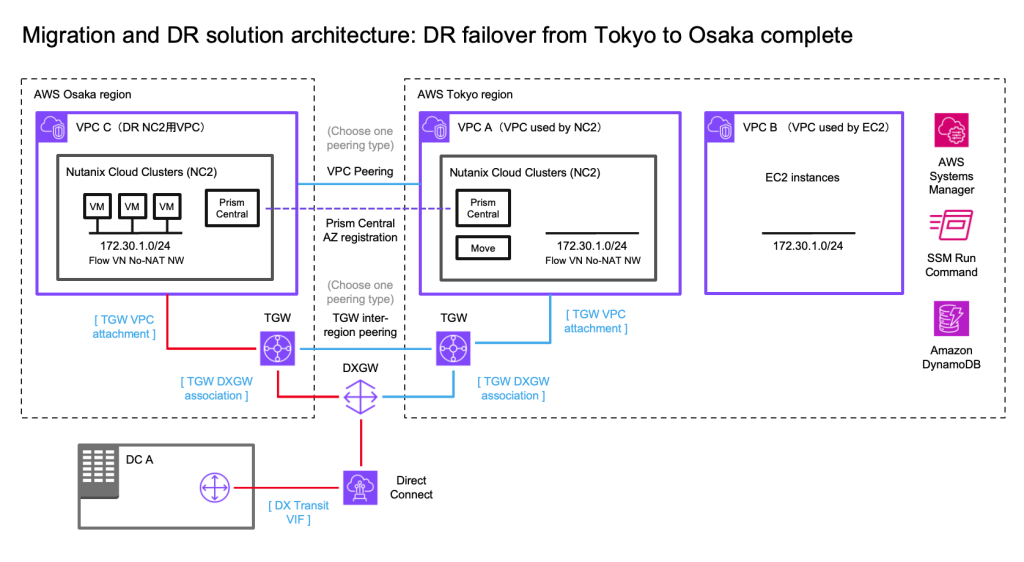

In this case we have an on-premises datacenter (DC), an AWS VPC with EC2 instances which we want to have covered by a DR policy (so they can fail over to another region without changing IP addresses) and finally two NC2 clusters – one in the primary region and one in a separate region for DR purposes. We use Tokyo (ap-northeast-1) as the primary AWS region and Osaka (ap-northeast-3) as the disaster recovery location in this example.

We illustrate connectivity to the on-premises environment by using Direct Connect. Note that all the testing of this solution has been done with S2S VPN attached to the TGW’s in each region. Peering between the two DR locations can be done by using cross-region VPC peering or peering of two Transit Gateways (TGW).

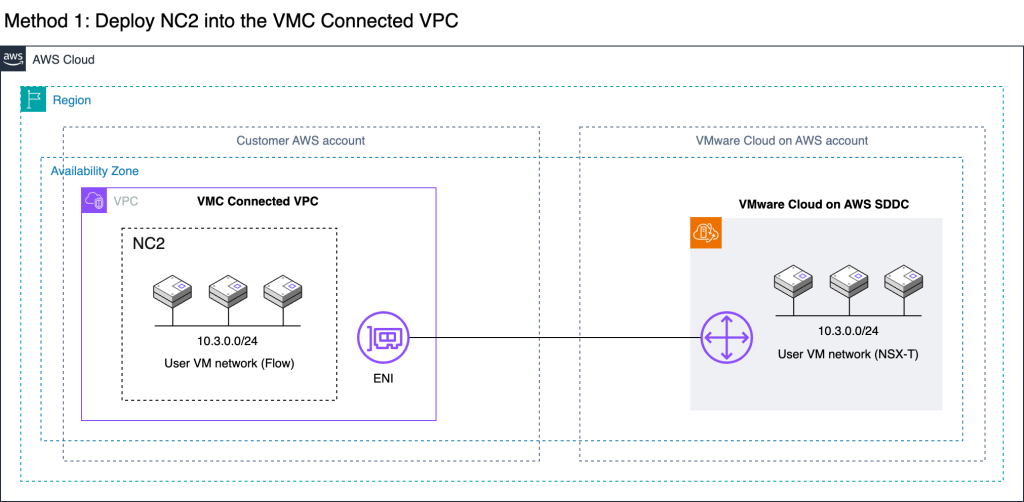

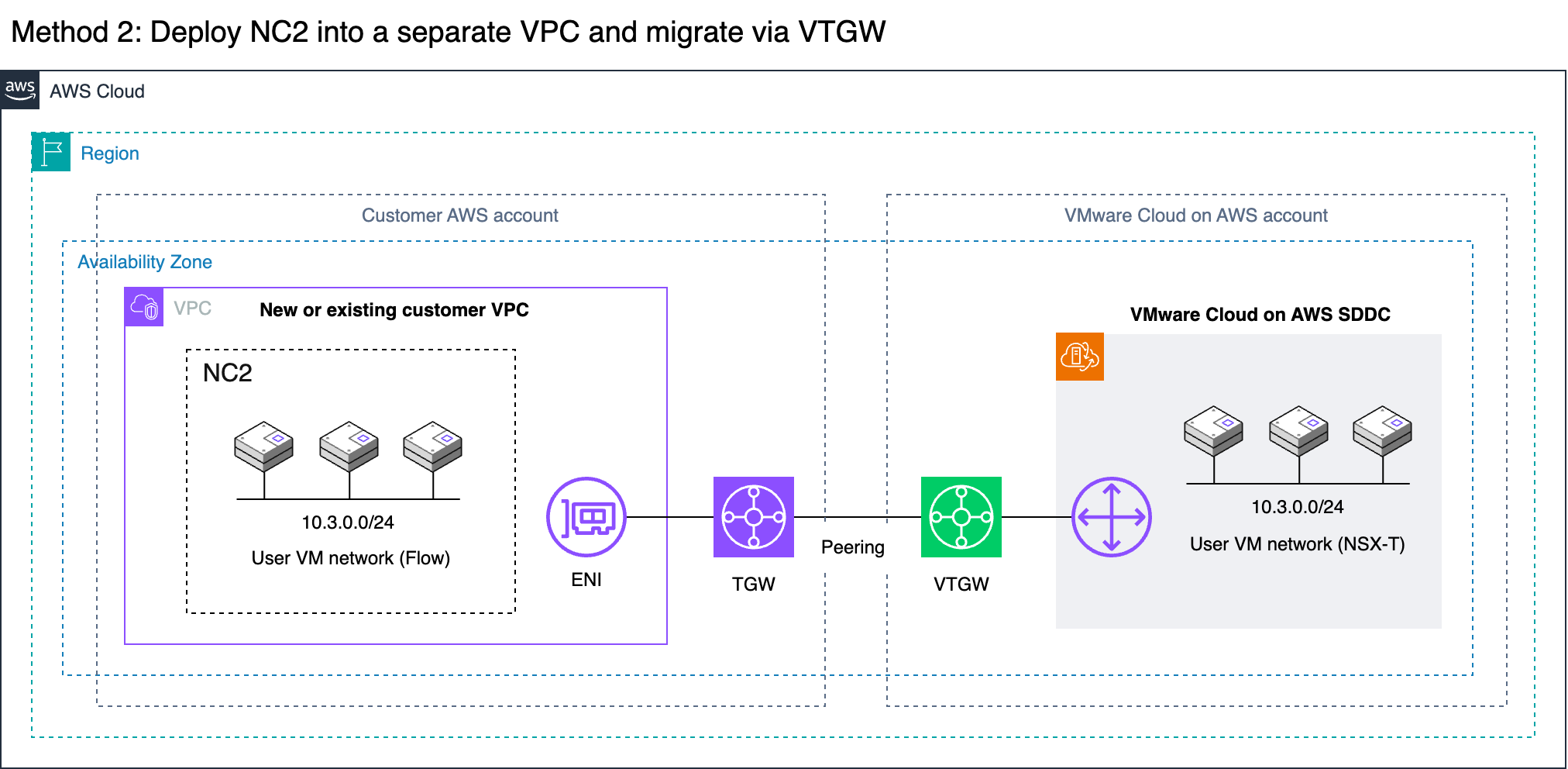

Networking

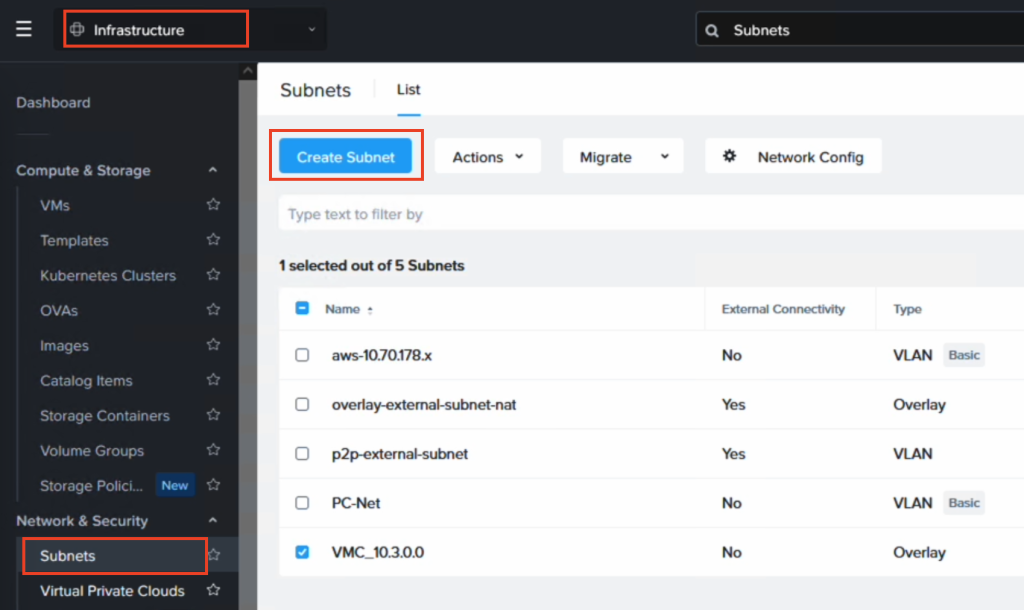

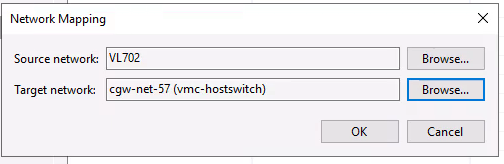

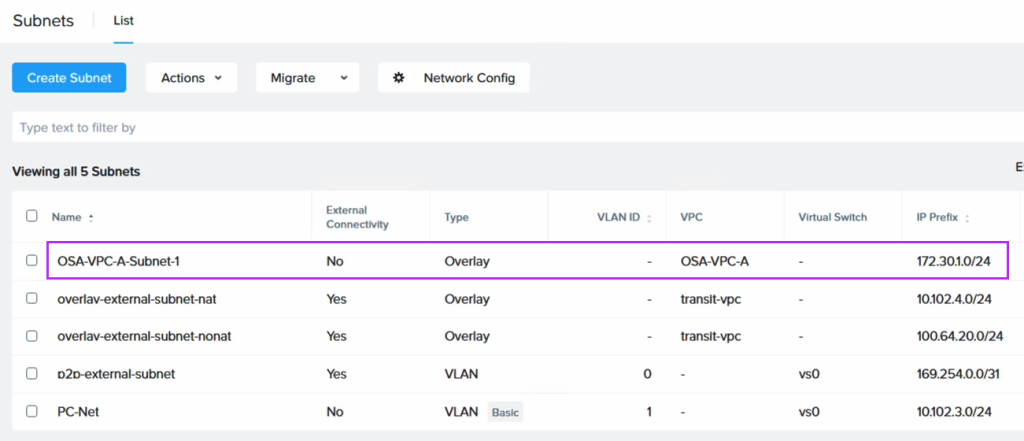

To retain the IP addresses of the migrated EC2 instances we use Flow Virtual Networking (FVN) to create overlay no-NAT overlay networks on NC2 with a CIDR range which matches that of the original subnet the EC2 instances are connected to. We create this overlay network in both Tokyo and Osaka NC2 clusters so that we can later fail over the VMs and have them attach to a network with the same CIDR range.

To ensure the on-premises DC is able to access the VMs we modify the route tables throughout the process. That way we maintain routes which point to the migrated EC2 instances, regardless of where they are located.

Automating the VPC and TGW creation with Terraform / Open Tofu

In the case you’d like to try this out yourself, the Terraform / Open Tofu templates for deploying the VPC’s, TGW’s and the routing for these can be found on GitHub here:

https://github.com/jonas-werner/aws-dual-region-peered-tgw-with-vpcs-for-nc2-dr

When to use which peering type for inter-region connectivity

Generally it can be said that VPC Peering is better for lower traffic scenarios or when the simplicity of direct peering is desirable, despite slightly higher data transfer costs incurred for VPC peering.

TGW Peering is more cost-efficient for high-traffic environments or complex architectures, where the centralized management and lower data transfer rates outweigh the additional costs per TGW attachment. Note that although traffic passes through two TGW’s, the peering interface doesn’t incur data transfer charges so the data is only charged once (roughly 0.02 cents / GiB in the Tokyo region).

The workaround for IP retention

As mentioned in the introduction, while the Nutanix Move virtual appliance is very capable at migrating from EC2 to NC2, it is at time of writing unable to retain the IP addresses of the workloads it migrates. To work around this we do the following:

- Prior to the migration we use AWS Systems Manager (SSM) to run a PowerShell or Bash script on the instances to be migrated. The script captures the EC2 instances ID, hostname and local IP address and stores that information into a DynamoDB table for use later

- We perform the migration from EC2 to NC2 using Nutanix Move. The IP address will change although we migrate the instance to a Flow Virtual Networking (FVN) overlay network with the same CIDR range as the original network the EC2 instances are connected to.

- We run a Python script which uses the DymamoDB information as a template and then connects to the Nutanix Prism Central API. It then removes the existing network interface and adds a new one with the correct (original) IP address to each of the migrated instances.

Once the instances are migrated to NC2 the process of configuring DR between Tokyo and Osaka regions is trivial.

You can download the PowerShell and Python scripts used in this blog on GitHub:

https://github.com/jonas-werner/EC2-to-NC2-with-IP-preservation/tree/main

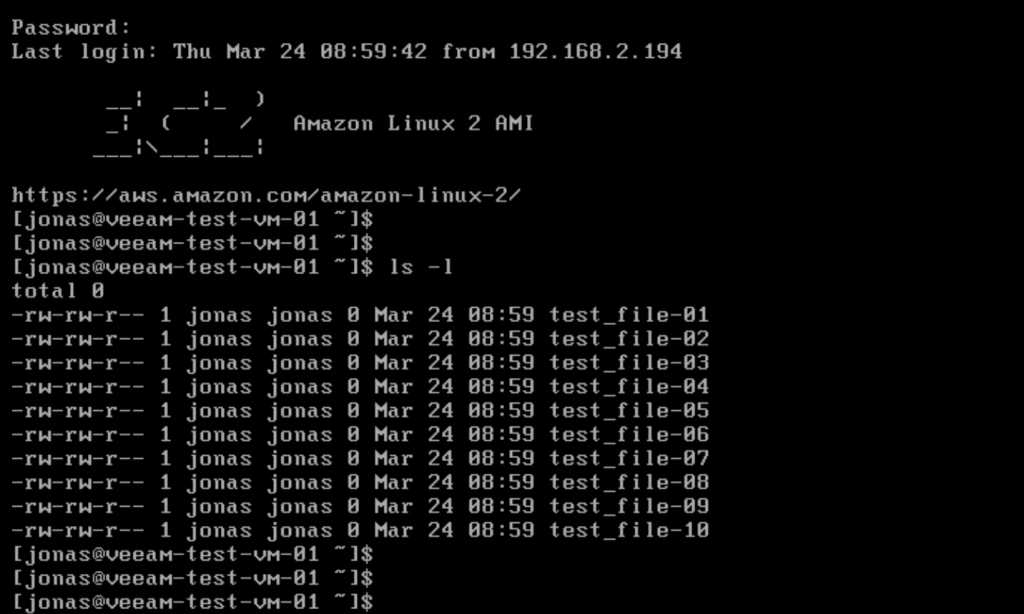

Step 1: Capture IP addresses of the EC2 instances

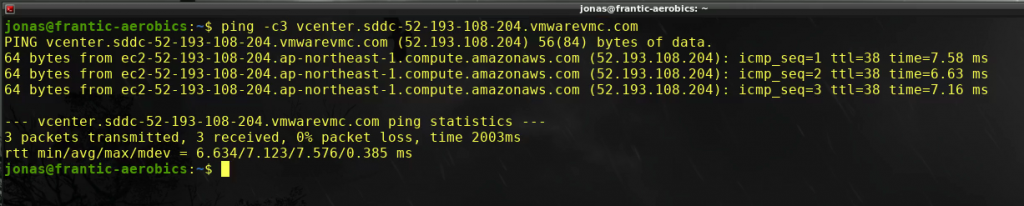

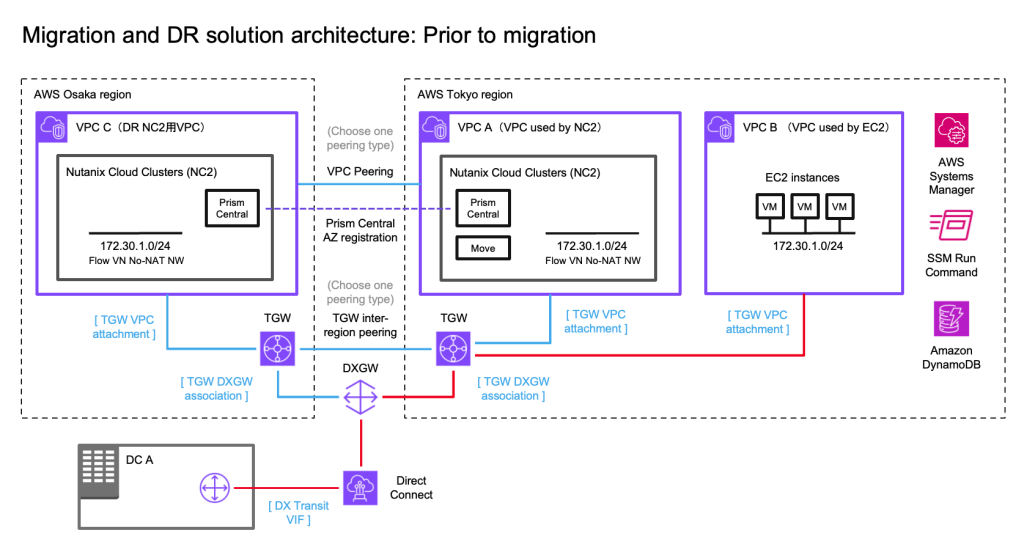

In this step we prepare for the migration from EC2 to NC2. The initial state of the network, the workloads and the route to the 172.30.1.0/24 network is as illustrated by the red line in the below diagram.

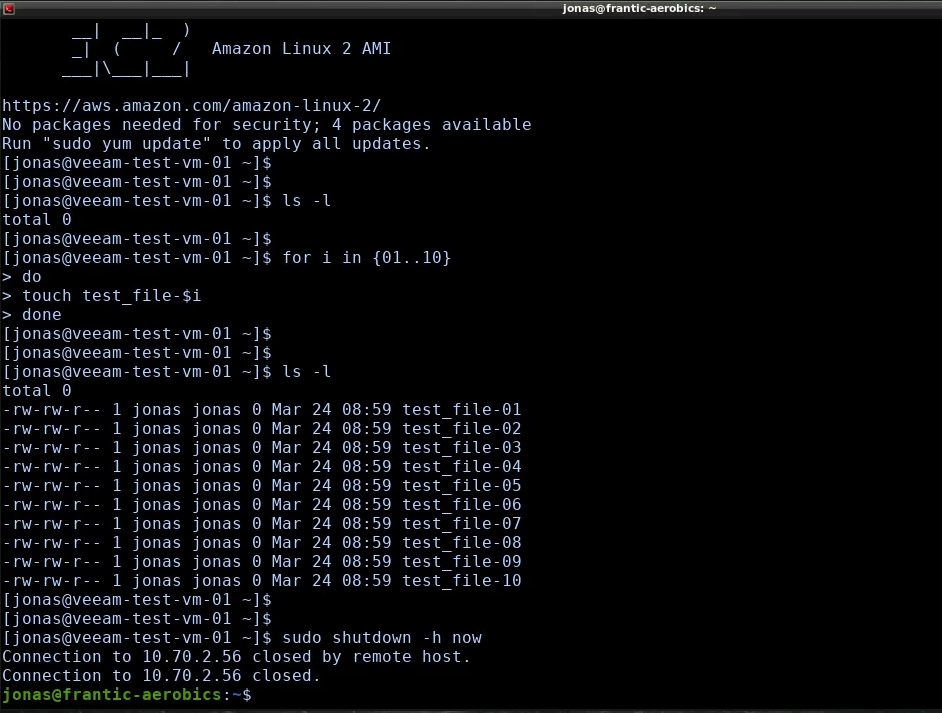

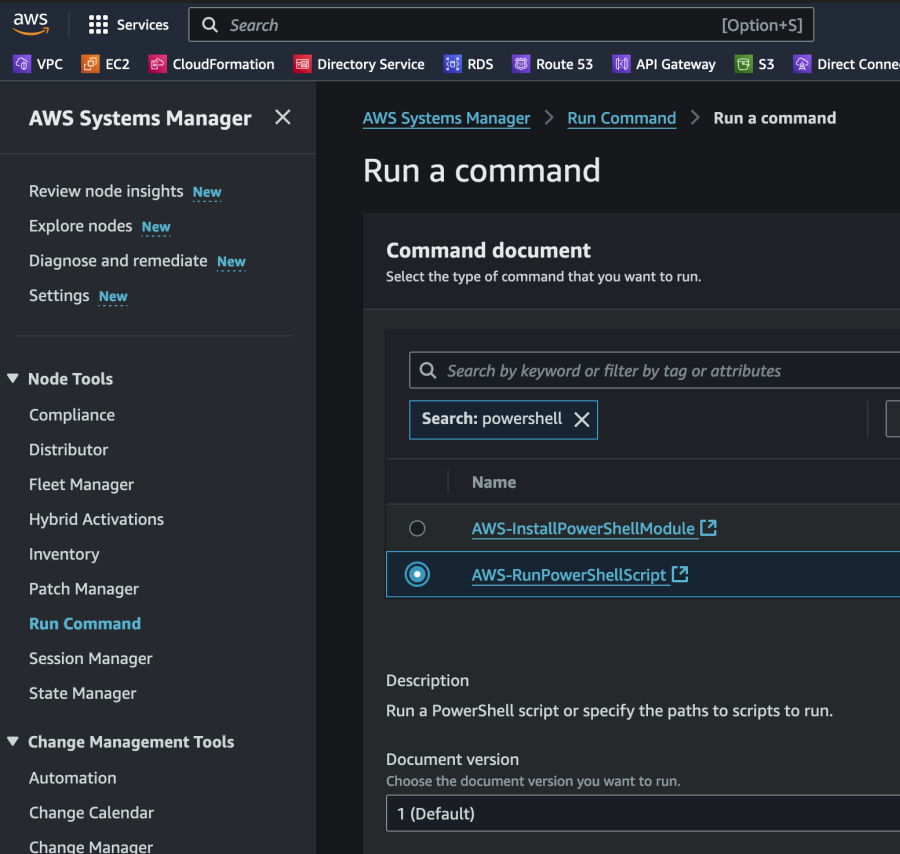

To start with we gather information about the EC2 instances and store that info in DynamoDB. In the name of efficiency we use the SSM Run command to execute the PowerShell script. This makes it easy to get this done in a single go (or two “goes” if we do both Windows and Linux workloads). We test with a single Windows 2019 Server EC2 instance in this example.

First create a DynamoDB table to hold this information. Nothing special is required for this table as long as it is accessible to SSM as it runs the script. We need to give the IAM role used when running SSM commands access to DynamoDB of course, so we add the following permissions to the standard SSM role:

{

"Sid": "AllowDynamoDBAccess",

"Effect": "Allow",

"Action": [

"dynamodb:PutItem"

],

"Resource": "arn:aws:dynamodb:<your-aws-region>:<your-aws-account>:table/<dynamodb-table-name>"

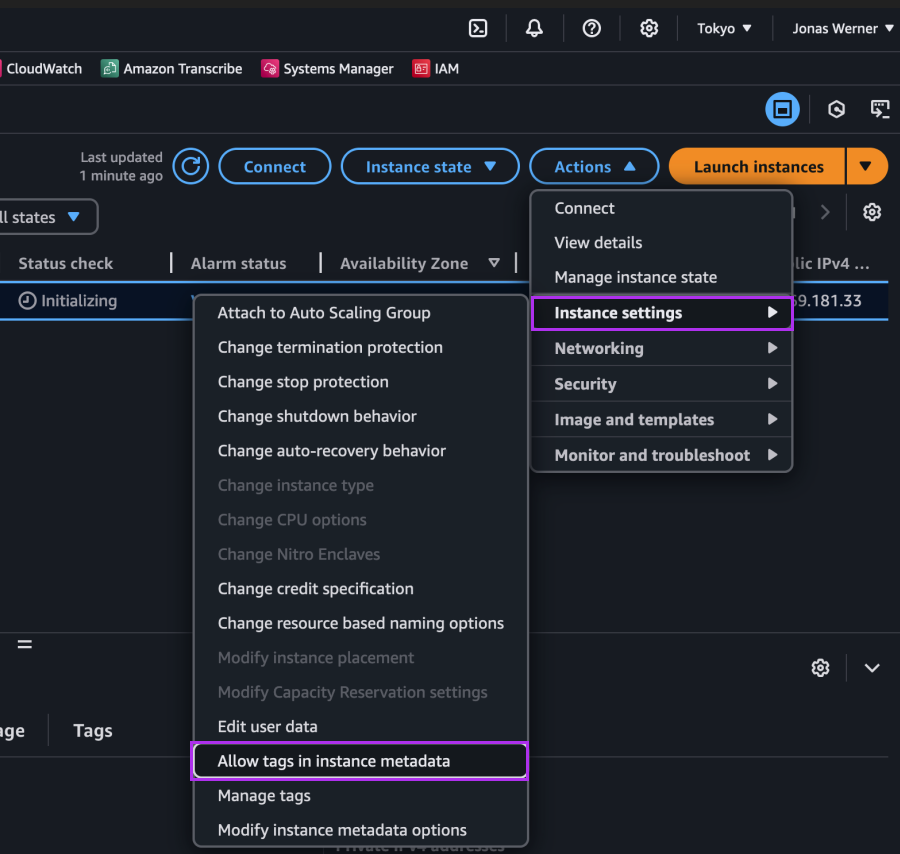

},In order to collect the instance name from meta data we enable the “Allow tags in instance metadata” setting in the EC2 console. This is important as we will use the “Name” tag in EC2 as the Key to look up the instance in NC2 post-migration. Of course other methods could be used – most obviously the name of the instance itself. However in this case we use the EC2 name tag, as this is also how the VM will show up in NC2 post migration.

The we execute the script on our instances through the SSM Run command as follows

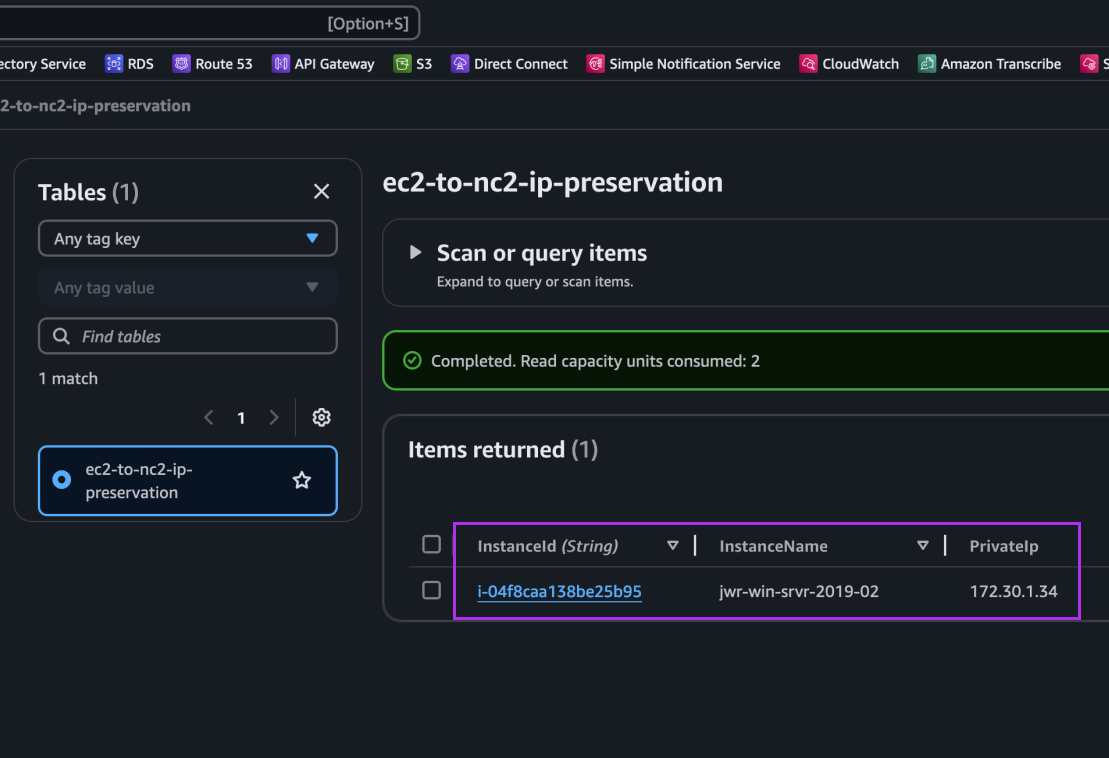

After execution we can see an entry for our Windows EC2 instance showing its instance ID, hostname and IP address: 172.30.1.34. This is the IP we want to retain.

That’s all for this section. Next we perform the migration from EC2 to NC2.

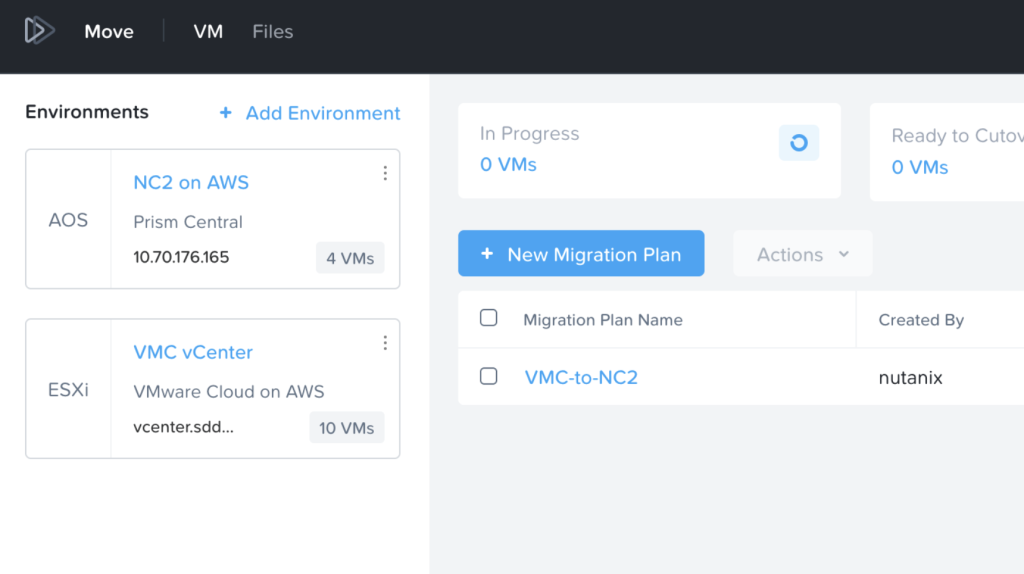

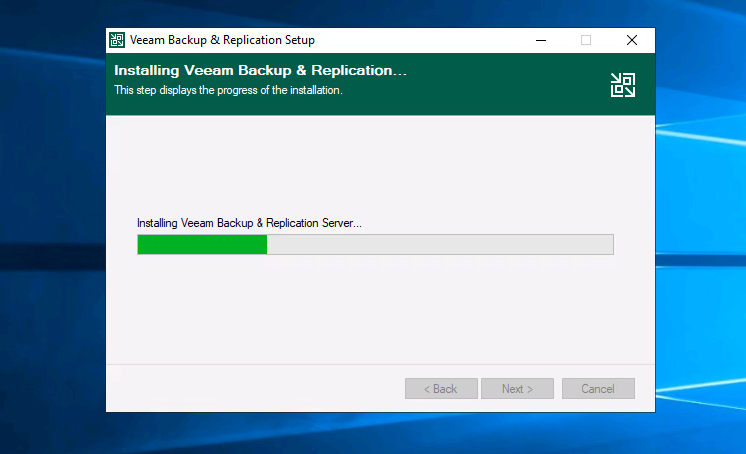

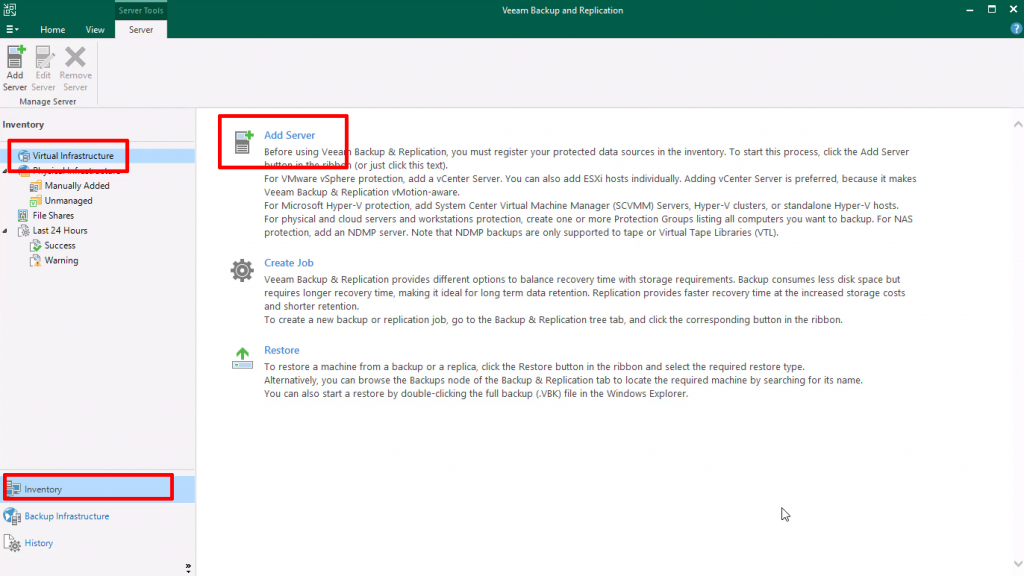

Step 2: Migrating from EC2 to NC2

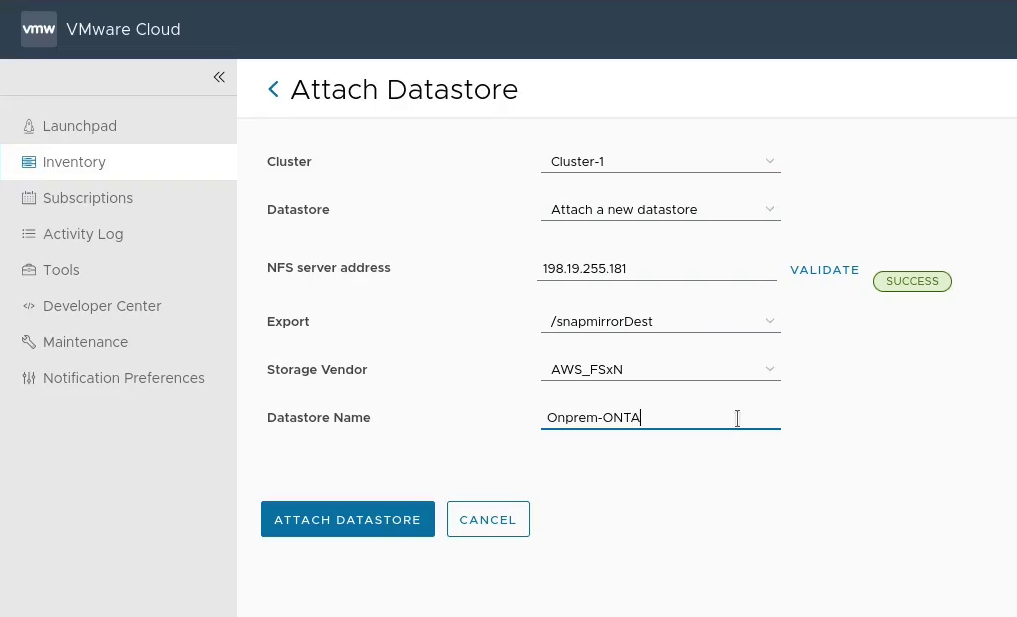

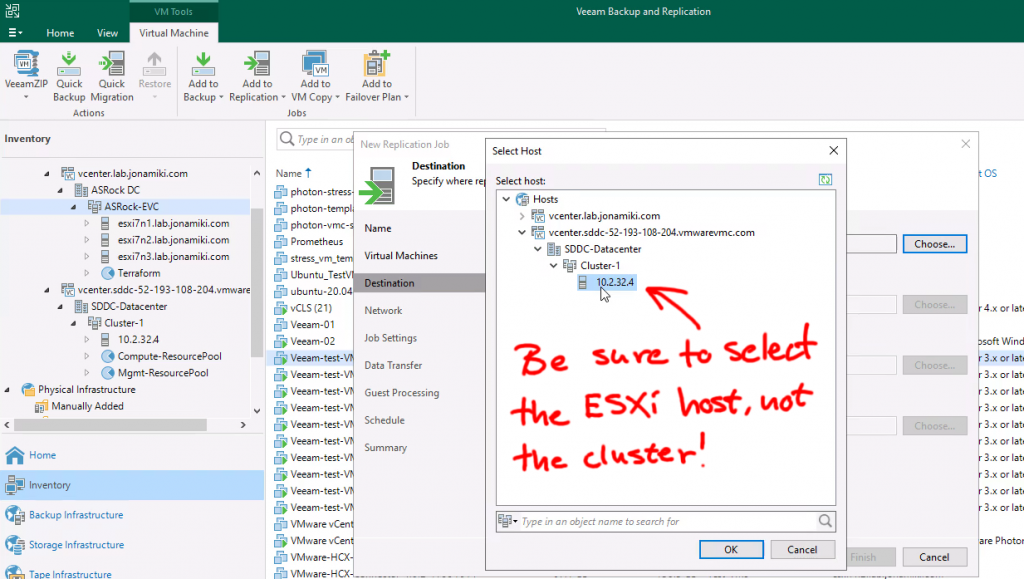

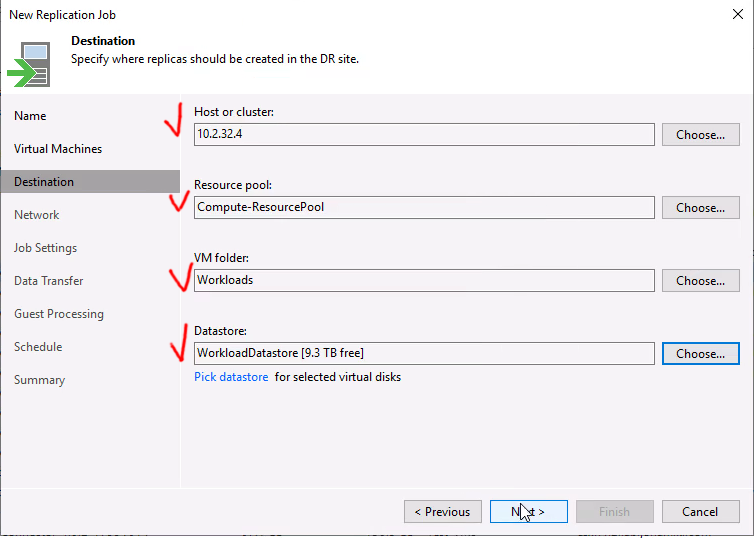

For the migration we have deployed Nutanix Move on the NC2 cluster. We have also created an FVN overlay no-NAT network with the same CIDR as the subnet the EC2 instance is connected to, although the DHCP range is set to avoid any of the IPs currently used by instances on that subnet.

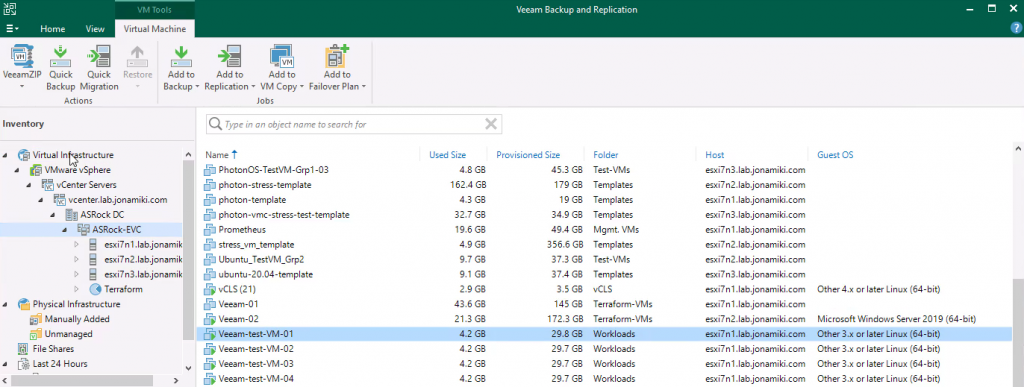

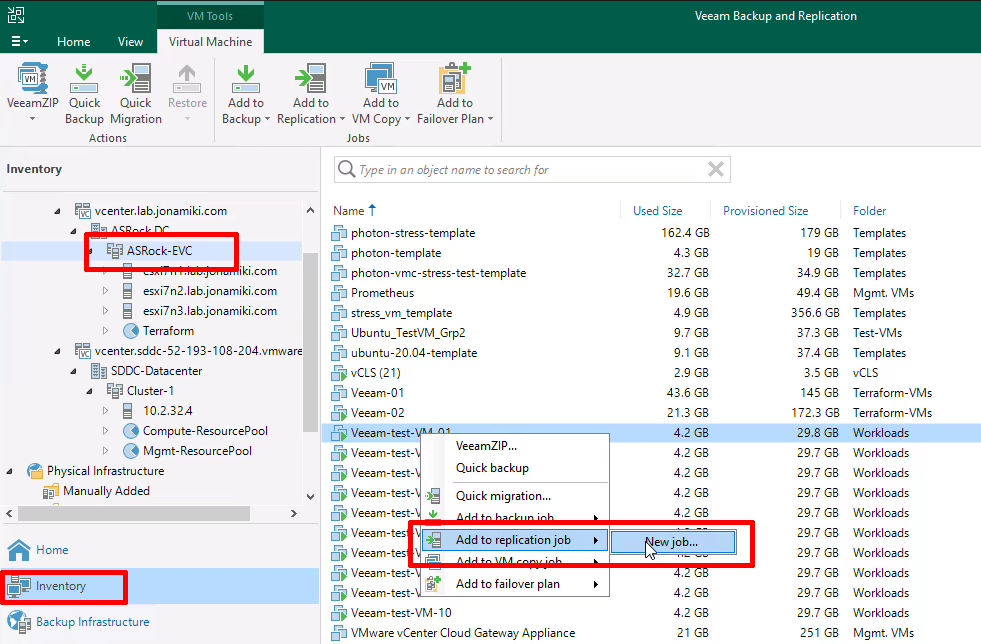

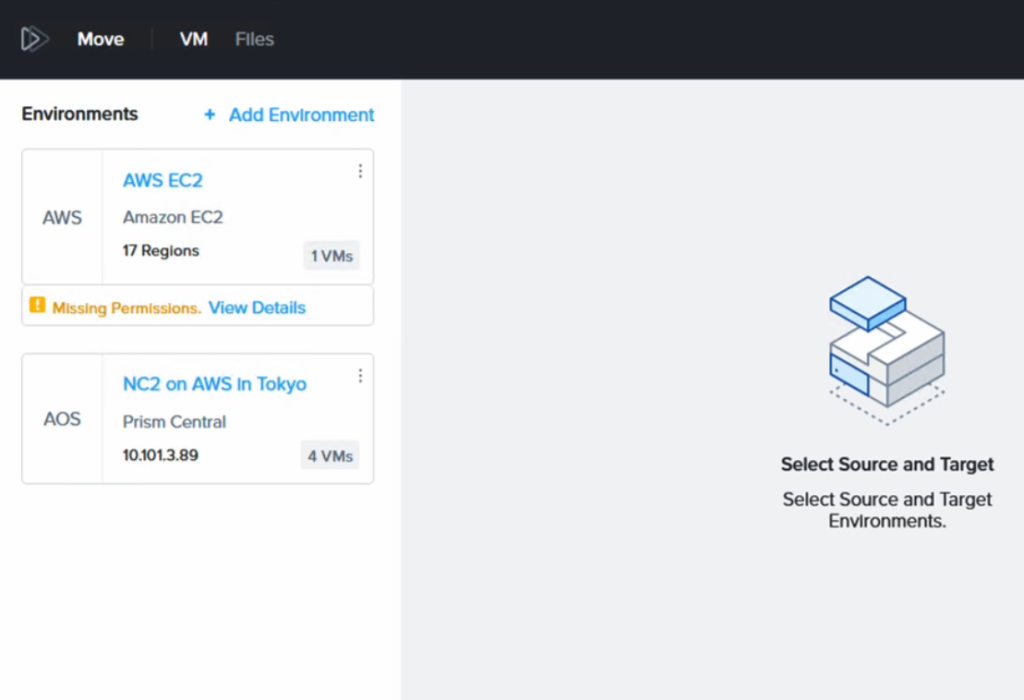

Move has the NC2 cluster and the AWS environment added in as migration sources / targets.

It complains about “missing permissions” but this is because we have only given it permission to migrate FROM EC2, not TO EC2. Since that is all we want to do, this is fine. Please refer to the Move manual for details on the AWS IAM policy required depending on your use case.

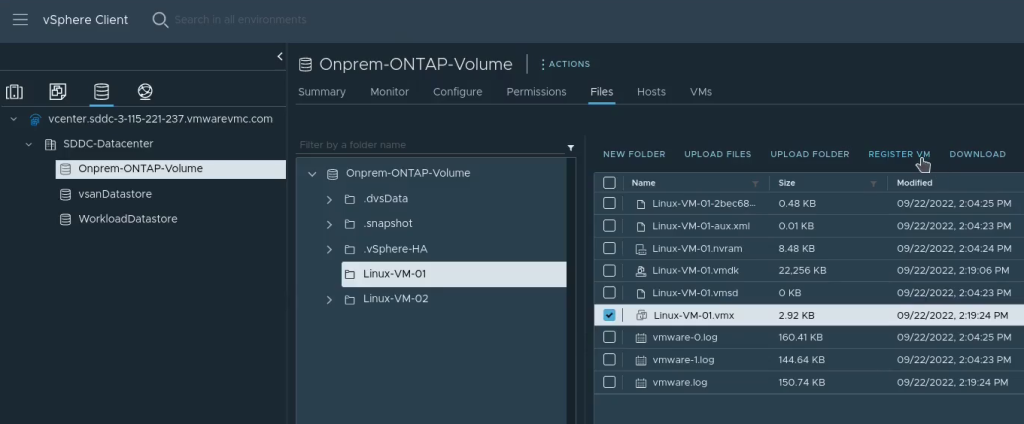

Post migration the VM will have a different IP address (taken from the DHCP range on the FVN subnet it is connected to).

We use a Python script in the next section to revert the IP address to what it was while running as an EC2 instance.

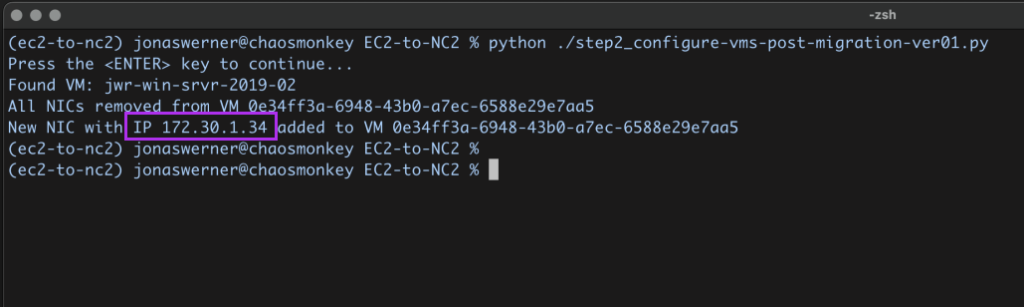

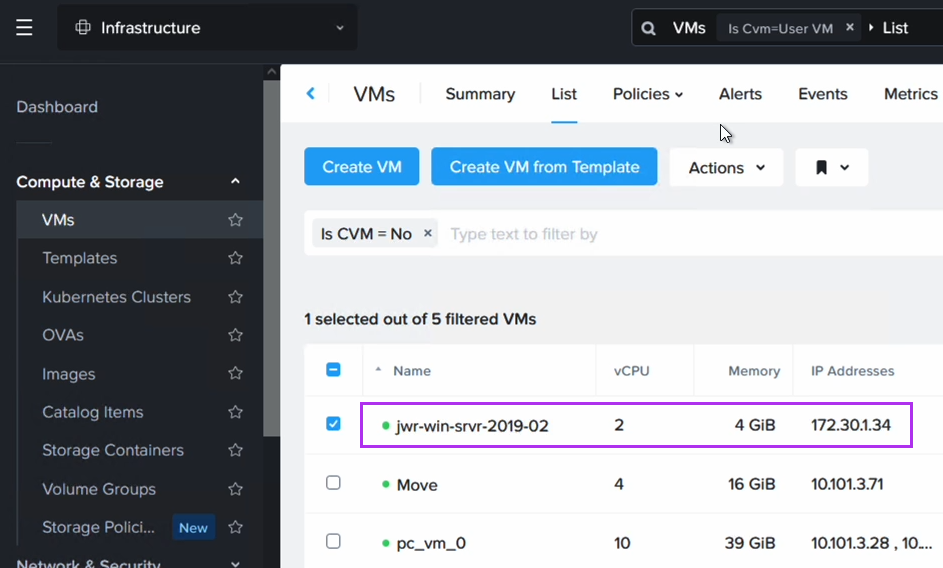

Step 3: Revert the IP address to match what the EC2 instance had originally

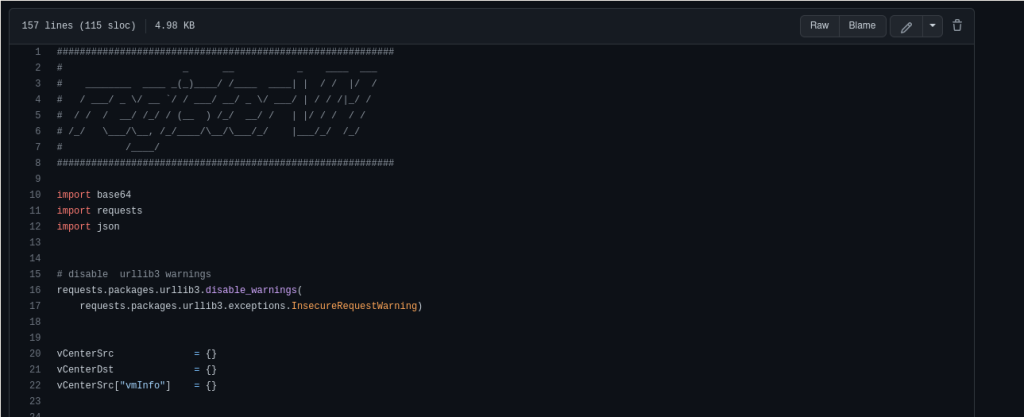

Now we execute a Python script which will look up the instance name in DynamoDB, match it with the VM name in NC2 and then remove and re-create the network interface using the Prism Central API. The new interface will have the original IP configured as a static address.

The script can be downloaded from GitHub here. Please export the Prism Central username and password as environment variables to run the script. Also update the Prism Central IP and the subnet name to match the one used in your environment as well as the AWS region and the DynamoDB table name.

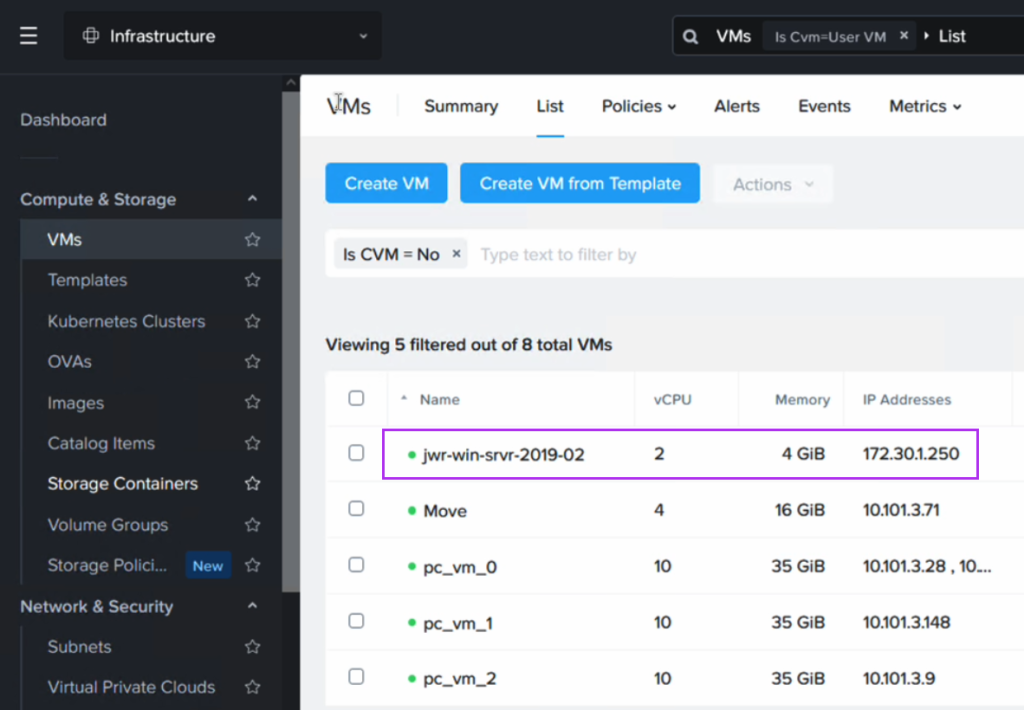

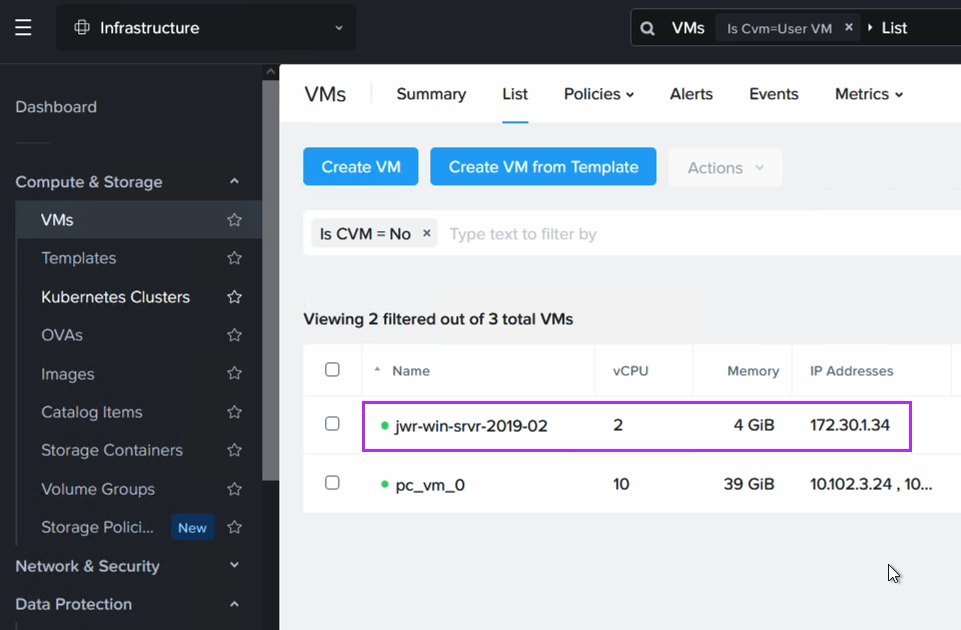

After running the script we can now verify that the VM has received its original IP address. Note that since we have replaced the NIC in this process, the IP is the same as before, but the MAC address will have changed.

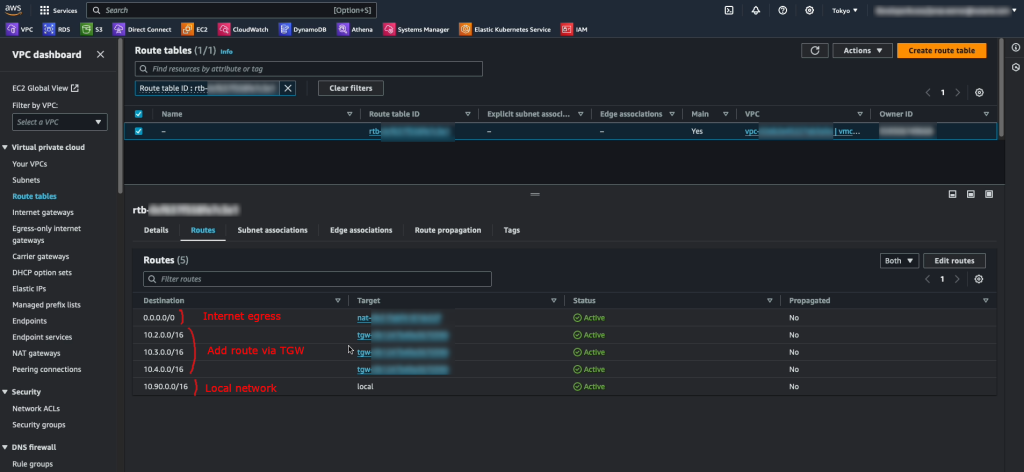

Routing after migration from EC2 to NC2 in Tokyo

Now that the VM exists on NC2 we need to update our routing to ensure that traffic is directed to this VM and not the original EC2 instance (which has now been shut down by Move after the migration).

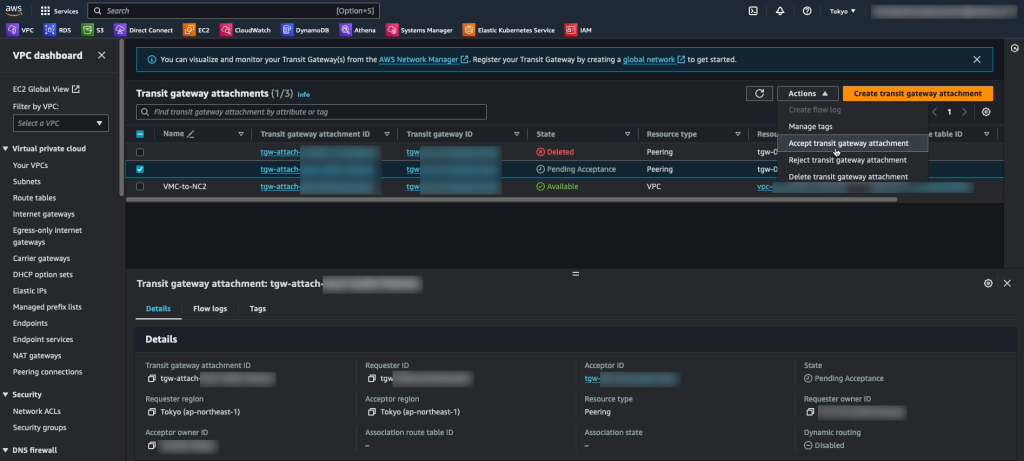

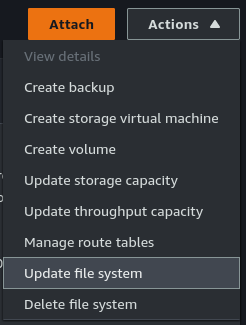

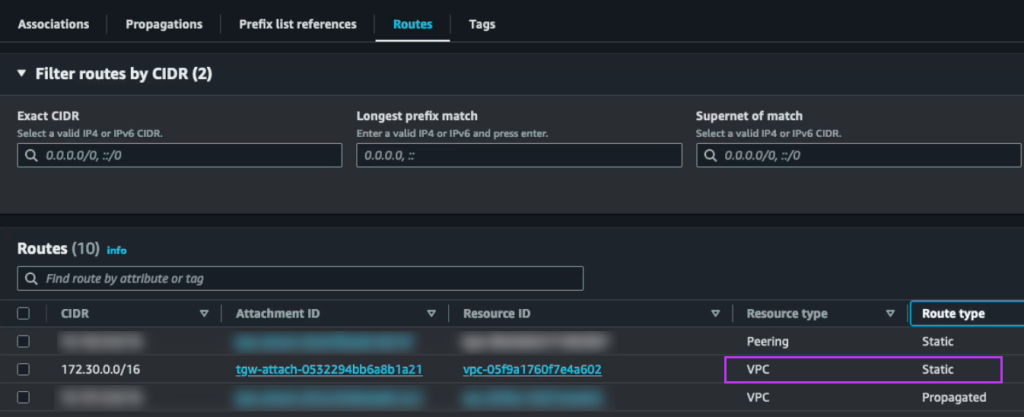

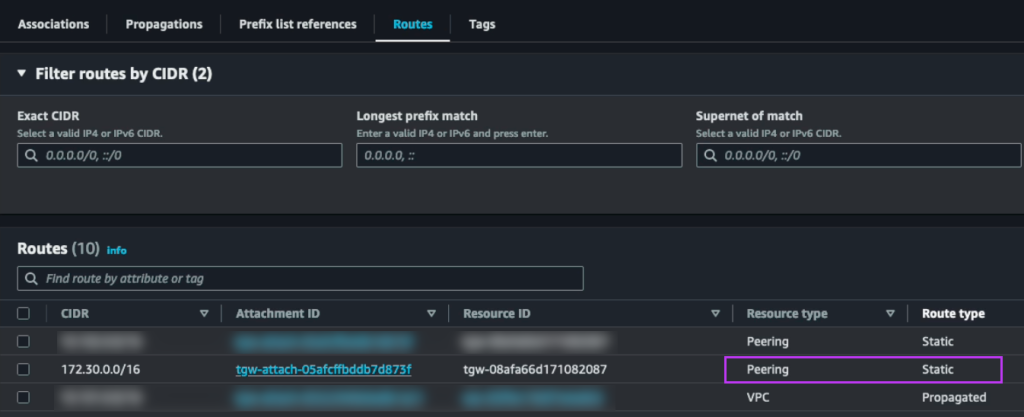

To do this we disconnect the EC2 VPC from the TGW and add the subnet as a static route in the TGW, this time pointing to the NC2 VPC rather than the EC2 VPC. The subnet should already exist as an “Allowed prefix” on the DXGW, so that part can be left as-is.

The attachments highlighted in red shows the active route to the 172.30.1.0/24 subnet, which has now been changed to point to the NC2 VPC. Since the subnet is a FVN no-NAT subnet it will show up in the NC2 VPC route table.

Wrapping up the migration part

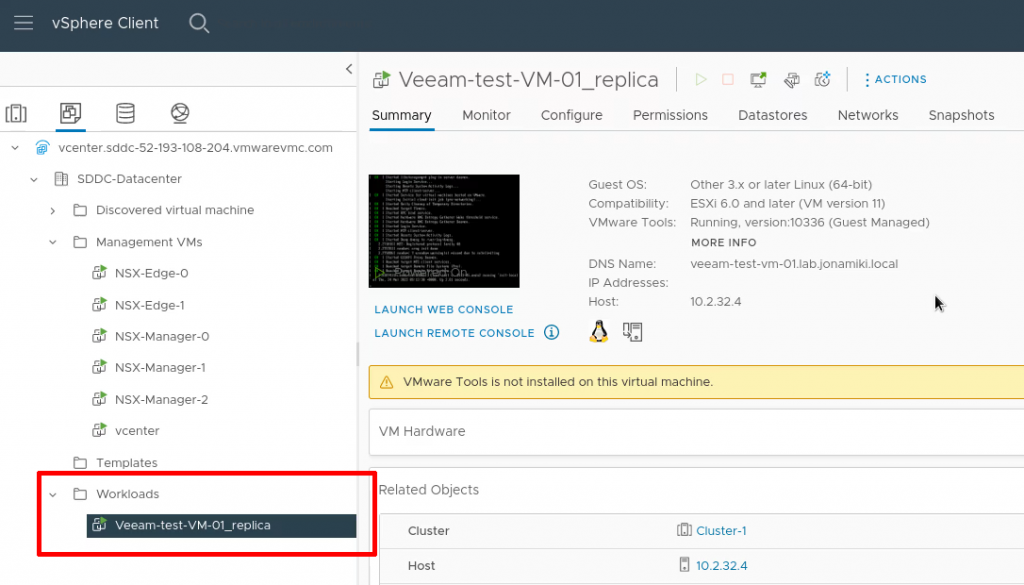

Now our EC2 instance has been migrated to NC2. Its IP address is intact and since we have updated the routing between AWS and the on-premises DC, the on-prem users can access the migrated instances just like they normally would. In fact, apart from the maintenance window for the migration, VM power-up on NC2 and the routing switch, they are unlikely to notice that their former EC2 instance is now running on another platform.

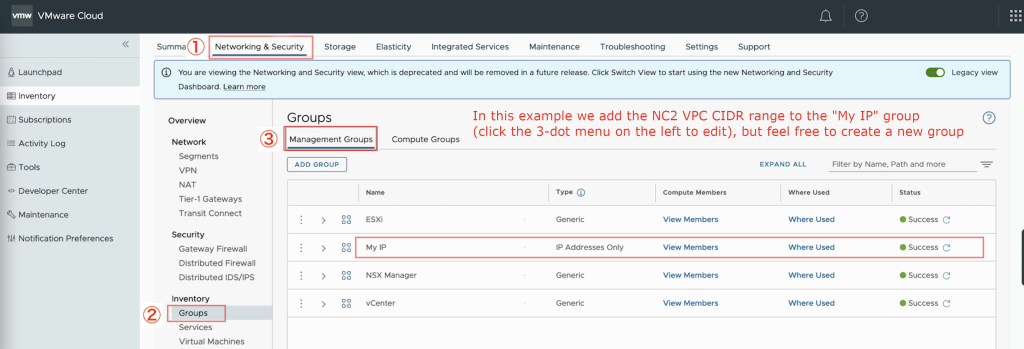

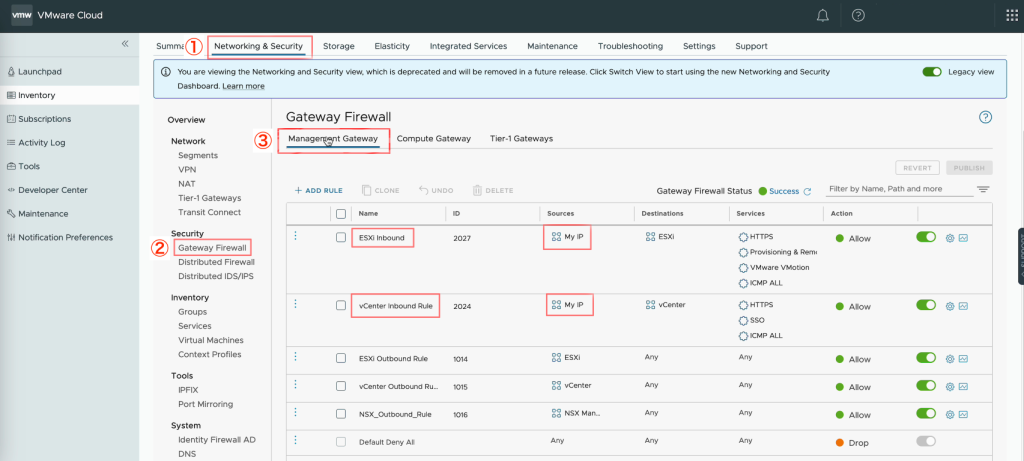

Configuring DR between the Tokyo and Osaka NC2 clusters

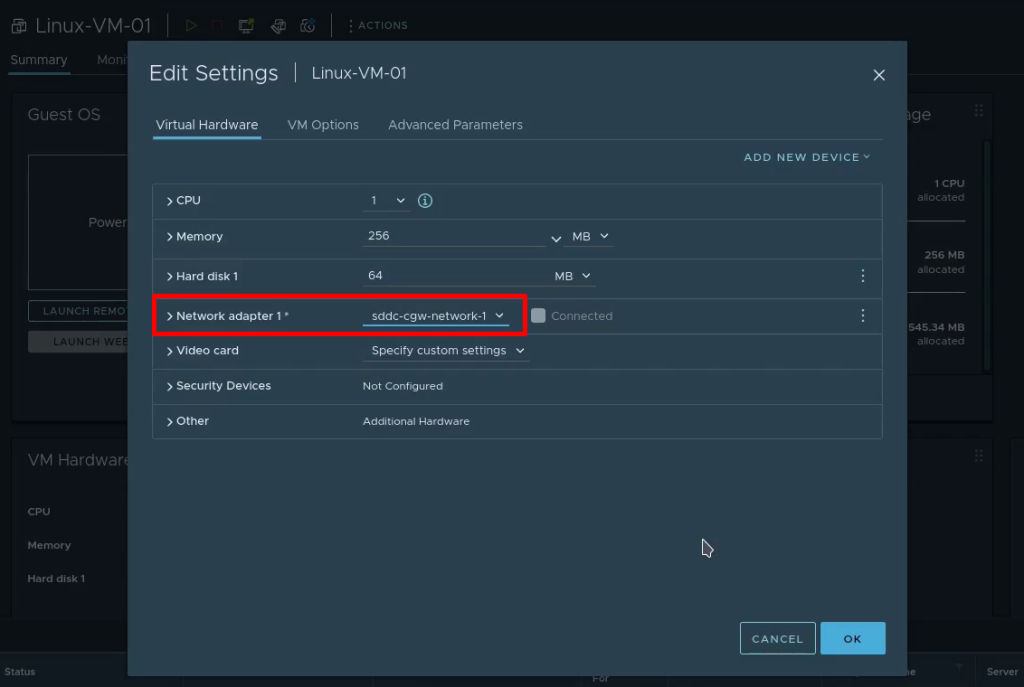

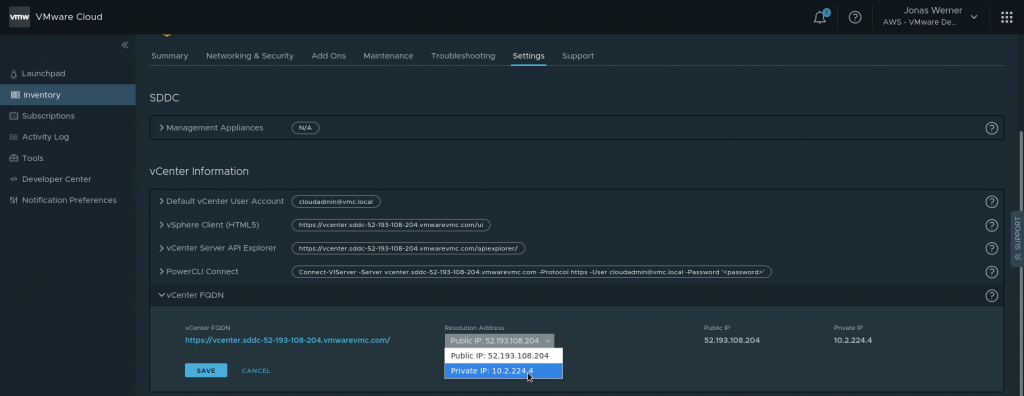

At this point all we have left to do is set up the DR configuration between the two Nutanix clusters in Tokyo and Osaka. Since DR is a built-in component, this is very straight forward. We link the two Prism Central instances and of course create the FVN overlay network on the Osaka side as well to ensure we can keep the same CIDR range also after failover.

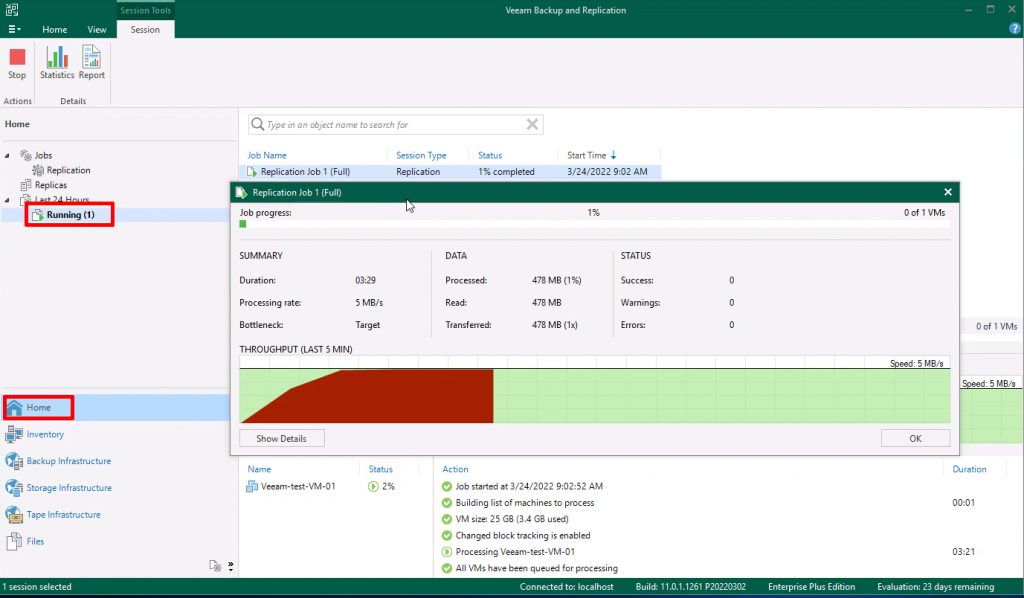

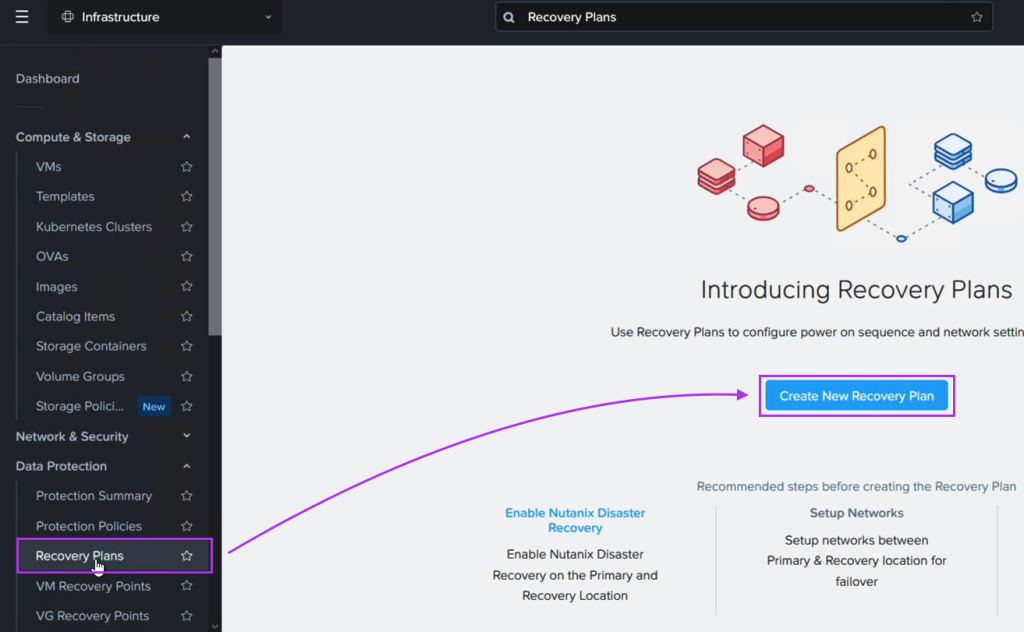

After enabling Disaster Recovery we can easily create the DR plan through Prism Central

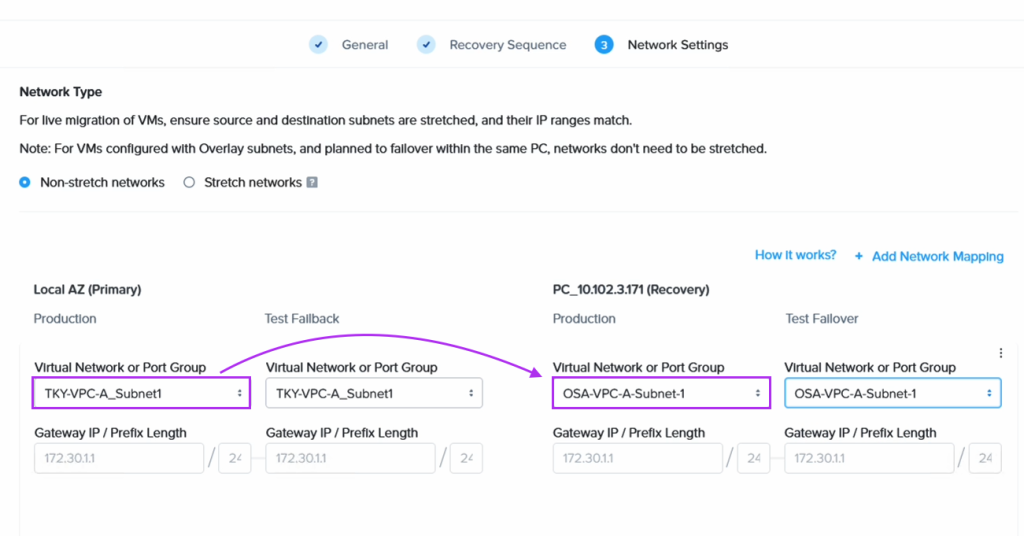

When we create the DR plan we set the VMs on the Tokyo network to fail over to its equivalent on the Osaka DR site

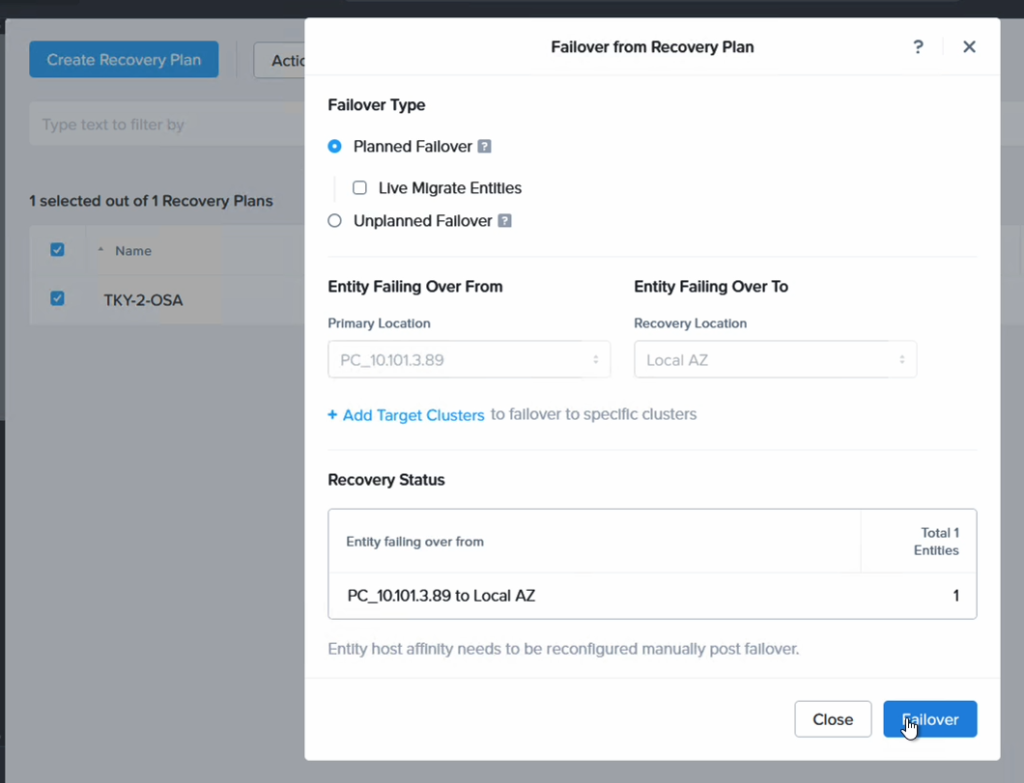

Finally we proceed to fail over our VM from NC2 in Tokyo to NC2 in Osaka

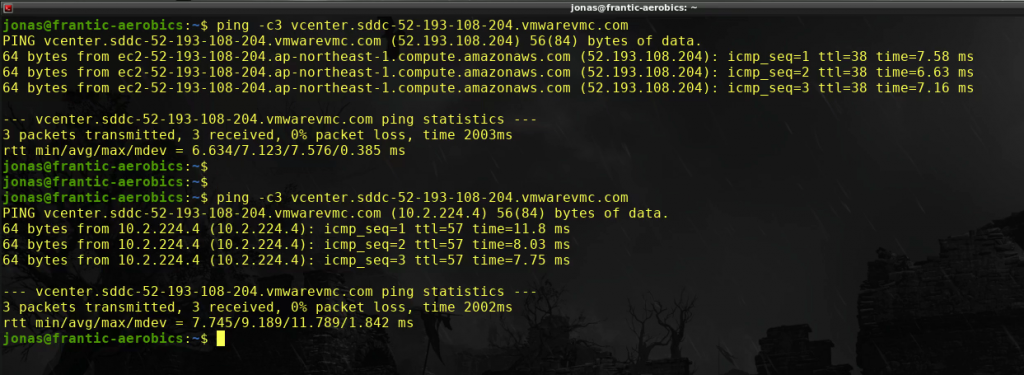

After failing over we can confirm that the VM is not only powered up in Osaka, but that it has also retained the IP address, as expected.

Updating the routing to point to Osaka rather than Tokyo

After failing over from Tokyo to Osaka we need to also update the routes pointing to the 172.30.1.0/24 network by modifying the TGW in Osaka. From a diagram perspective it will look like follows.

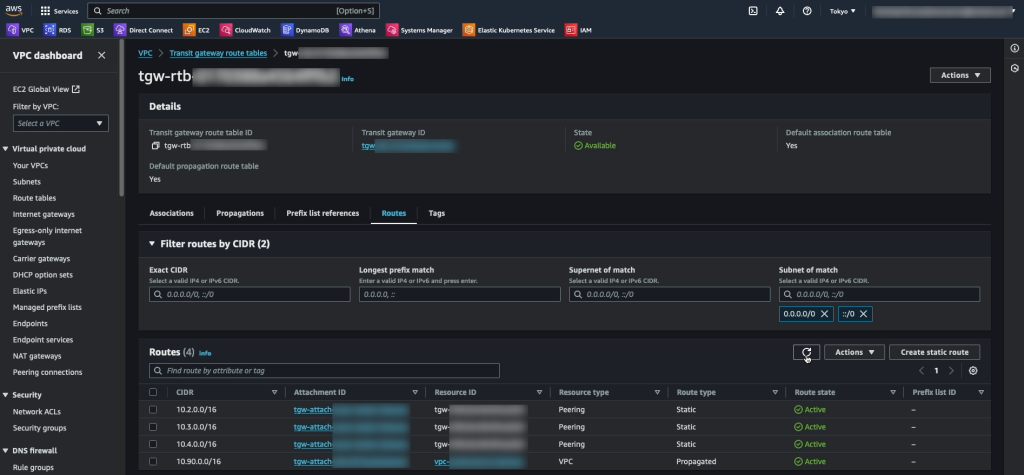

On the Osaka TGW we create a static route to the 172.30.0.0/16 network pointing to the Osaka NC2 VPC

We also update the static route on the Tokyo TGW which points to the local NC2 VPC and instead set it to point to the peering connection to Osaka

Results and wrap-up

With these routing changes implemented it is now possible for users on the on-premises DC to continue to access the very same VMs with the very same IP addresses. This possible is even after those workloads have been migrated from EC2 to NC2 in Tokyo and then further having been failed over with a DR plan from Tokyo region to Osaka region.

Hope that was helpful! Please reach out to your local Nutanix representative for discussions if this type of solution is of interest. Thank you for reading!

Links

- Terraform / Open Tofu templates used to build the test environment

- PowerShell and Python code used

- Nutanix NC2 page

- Nutanix Move manual