This is a quick note to show a concept of using the ChatGPT API for translation. In this case for English to Japanese, but it could be changed into any language. Since we want to talk to it we use Amazon Transcribe to turn speech into text. That text is sent to ChatGPT for translation. When the translated text comes back we feed it into Amazon Polly to turn it into speech again.

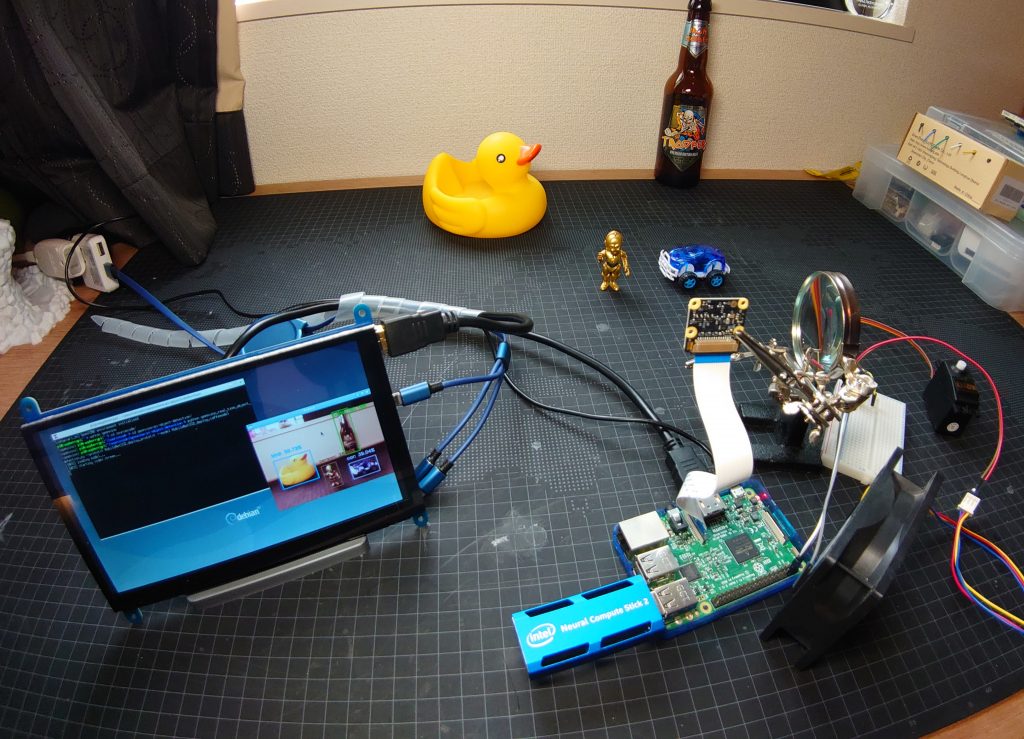

This is all running on a Raspberry Pi 3b+

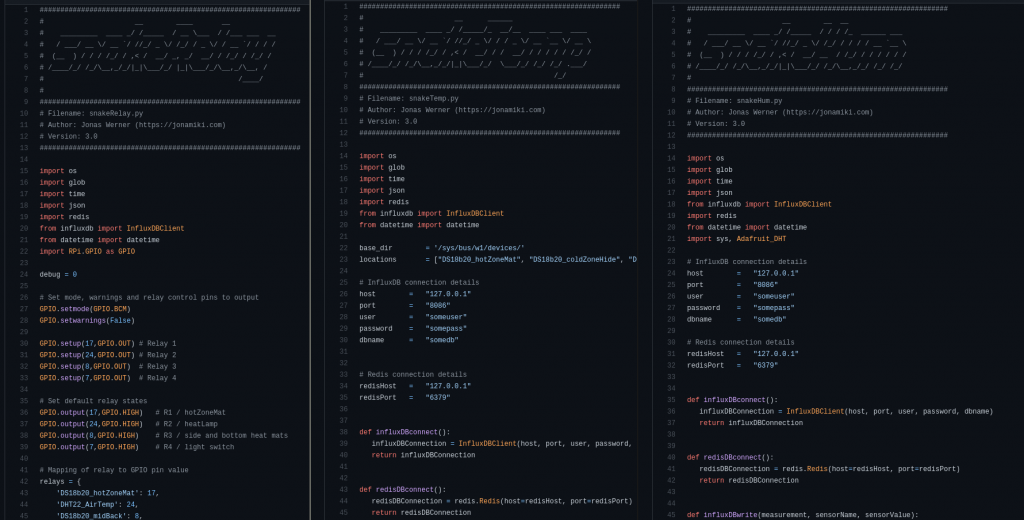

Source code

Source code can be found on GitHub:

https://github.com/jonas-werner/chatBot