Summary

In this blog post we explore two ways to use Nutanix Move 5.3 to migrate Virtual Machines from an existing VMware Cloud on AWS (VMC) environment to Nutanix Cloud Clusters on AWS (NC2). This is done while preserving both IP and MAC addresses of the VMs being migrated.

The most straight forward method is to deploy NC2 into the Connected VPC. This is a VPC which is attached at time of deployment of the VMware Cloud on AWS environment and is owned by the customer. Alternatively, we can deploy NC2 into a completely separate VPC and connect to the VMware Cloud on AWS cluster through a VMware Transit Connect (VTGW).

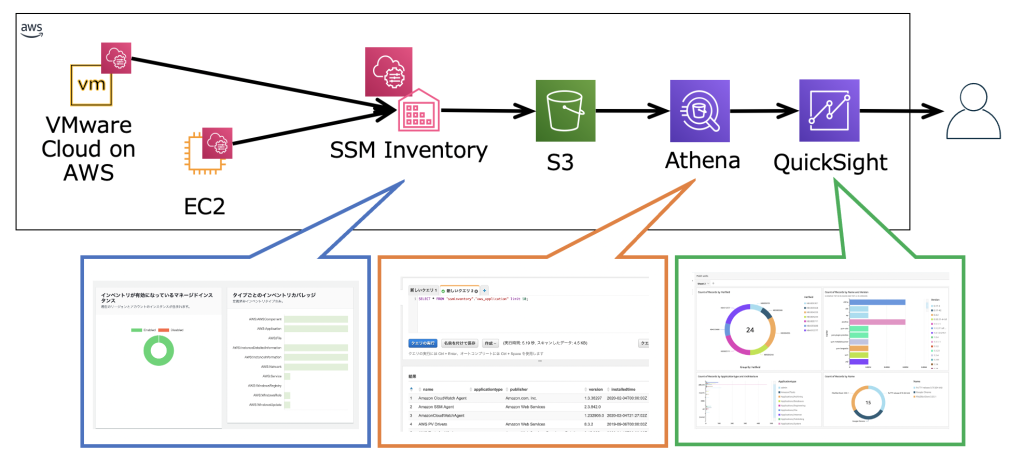

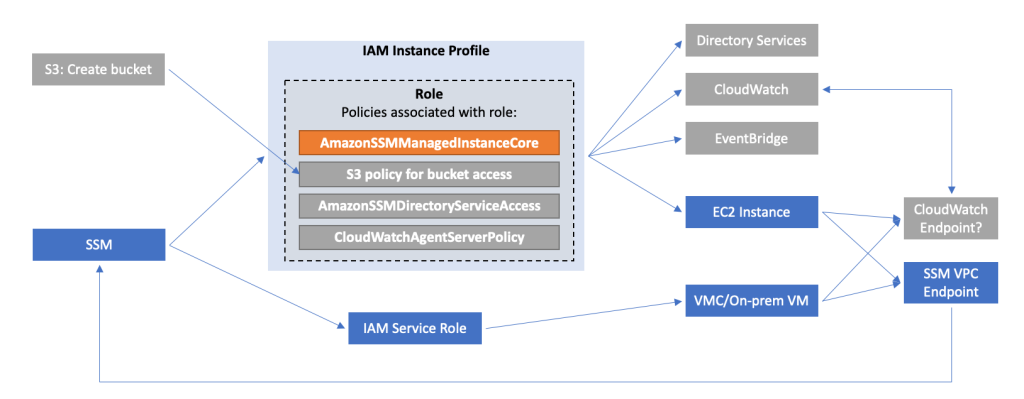

Architecture overview

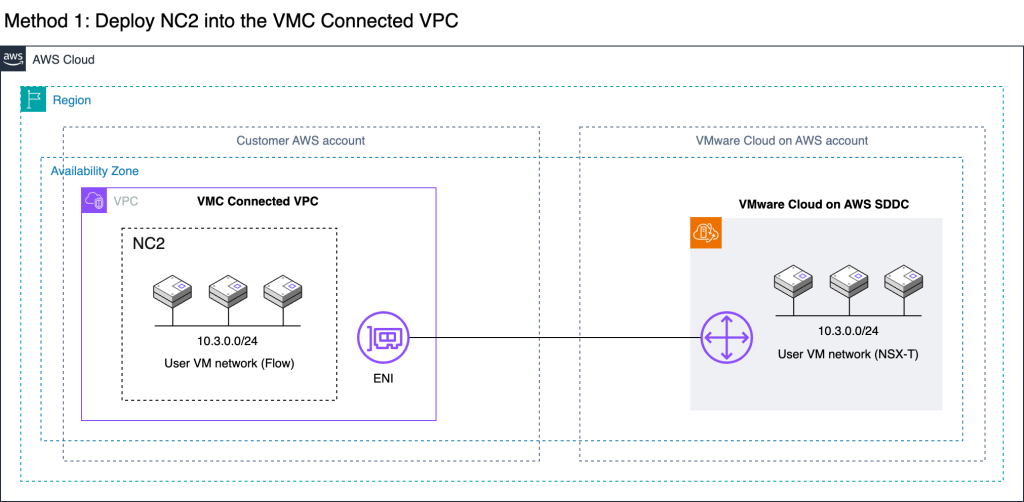

The two methods are illustrated below. Method 1 is recommended due to the ease of setup, simple networking and no data transfer charges. However, care need to be taken to ensure there is no overlap with any existing resources deployed into the Connected VPC. For example by creating new private subnets in the Connected VPC specifically for the NC2 deployment.

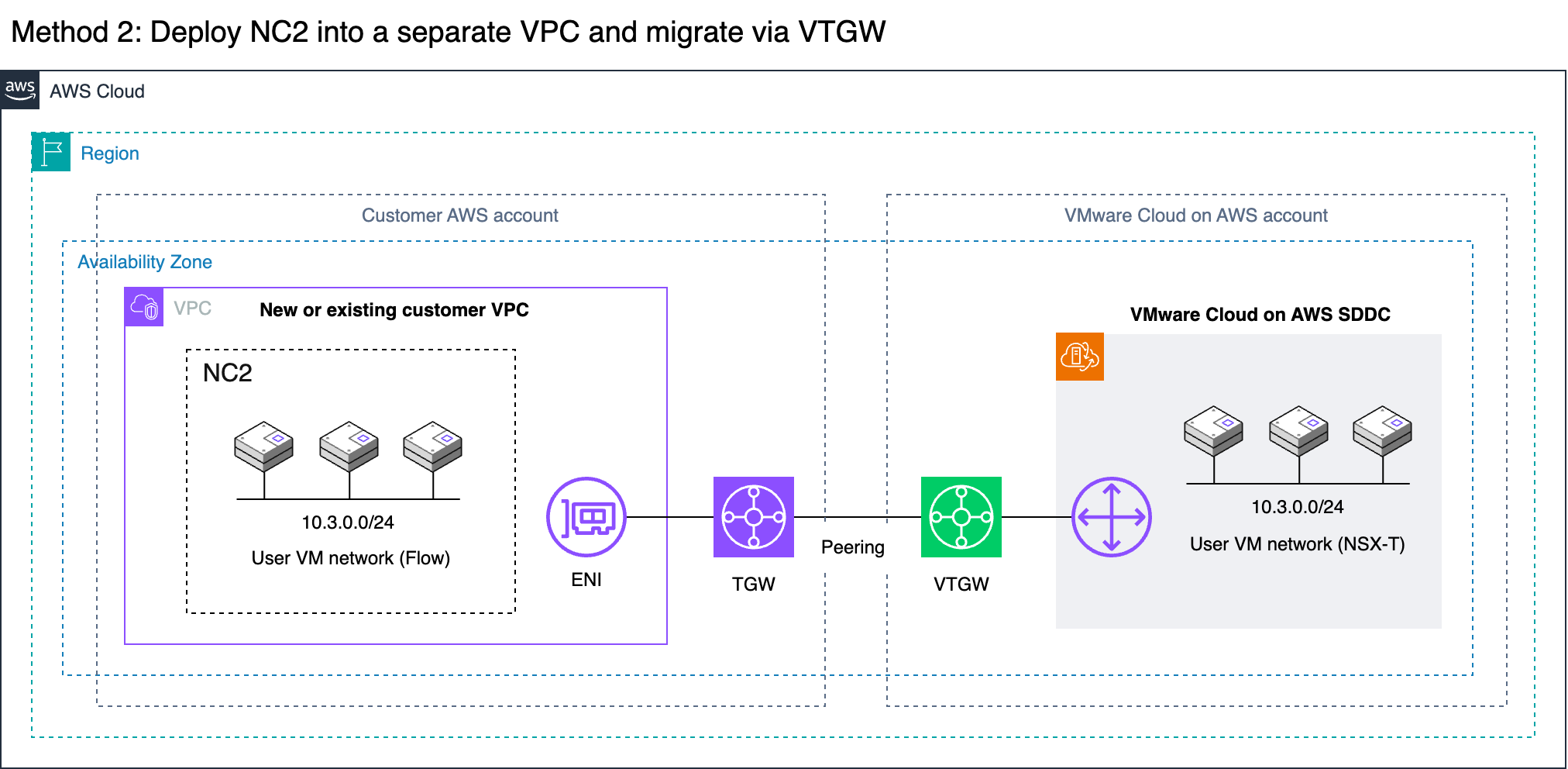

Method 2 covers migrating via a VMware Transit Connect (VTGW). Although it has additional routing considerations, this is also a fully viable option. In this example we peer the VTGW with a normal customer-controlled AWS Transit Gateway (TGW). Note that with Method 2 the VTGW can also connect directly to a VPC without the need for a TGW, but this will limit the routing options for the customer.

It’s important to keep in mind that both options can migrate VMs from VMC without changing IP or MAC addresses. Neither option require L2 Extension of user VM networks. This underlines the ease of which a migration like this can be done. There are of course some caveats. Refer to the VM networking section below for more detail.

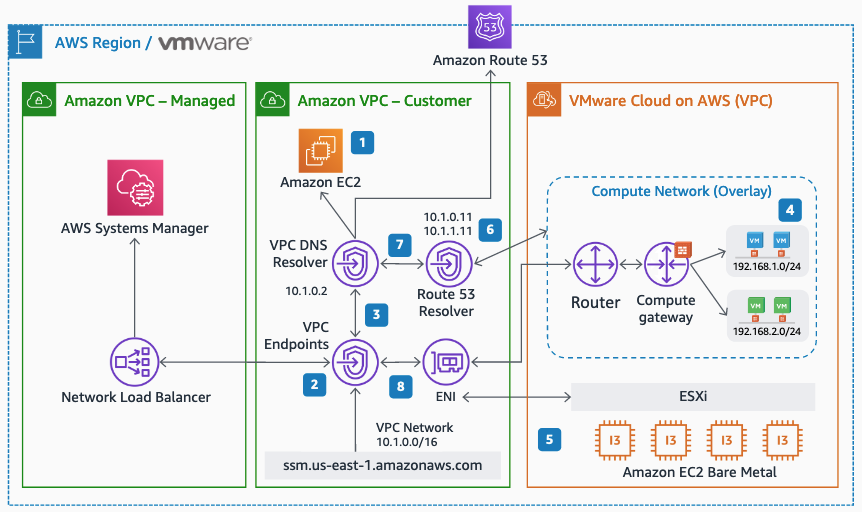

VM networking

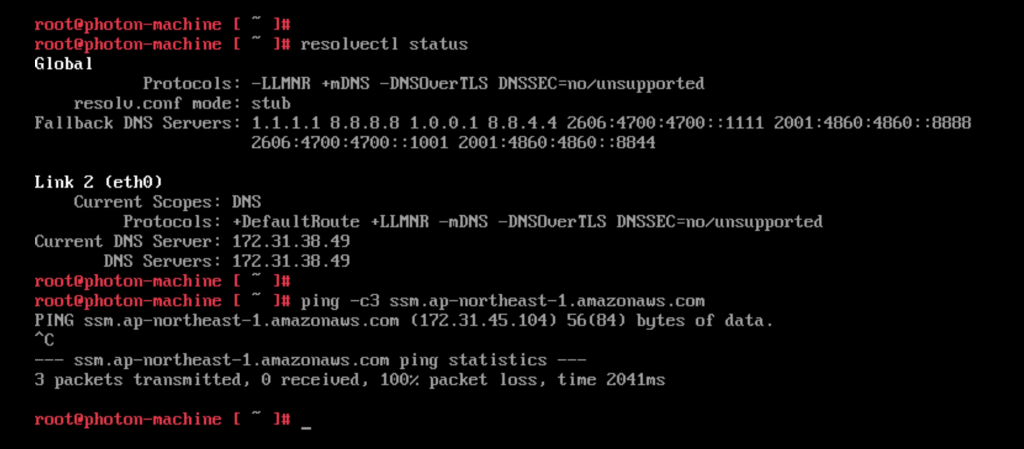

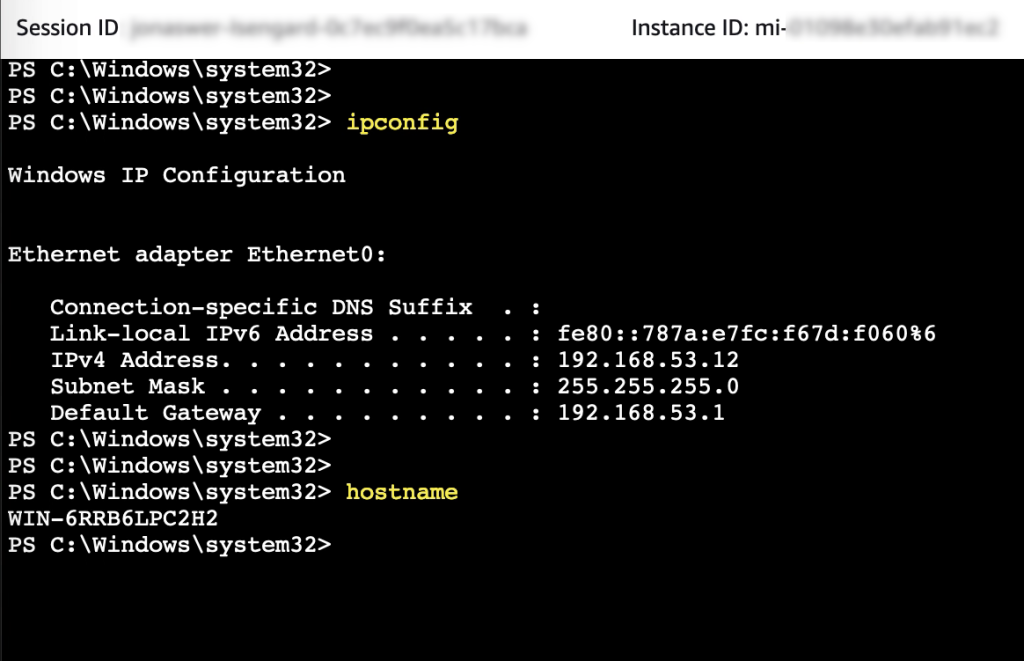

The whole migration can be done without L2 extension of VM networks. On the VMware Cloud on AWS side Virtual Machines in VMC are connected to overlay networks created with NSX-T using the VMC management console. These are represented by the “10.3.0.0/24” network in this example. The same CIDR ranges can be created as overlay networks by using Nutanix Flow on the NC2 cluster. Thereby, when VMs are migrated from VMC to NC2, they don’t need to change their IP or MAC addresses.

Note that if L2 Extension is not used, there is no communication between the overlay networks in NC2 and the overlay networks in VMC. Therefore, plan the migration so that VMs which need to communicate are moved together.

Also note that Flow does not advertise the overlay networks into the VPC route table. As long as VMware Cloud on AWS is attached to the connected VPC, the routes for the VMs will point to the active ENI created during the VMC cluster deployment. Destroying the VMC cluster will remove these ENIs and the corresponding routes from the Connected VPC route table.

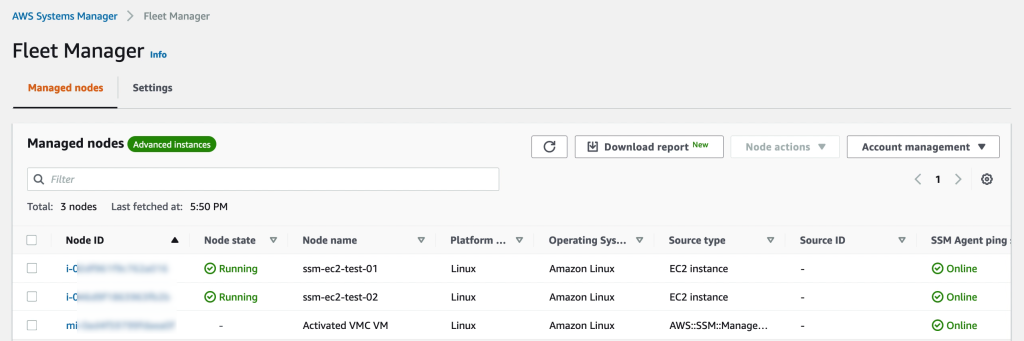

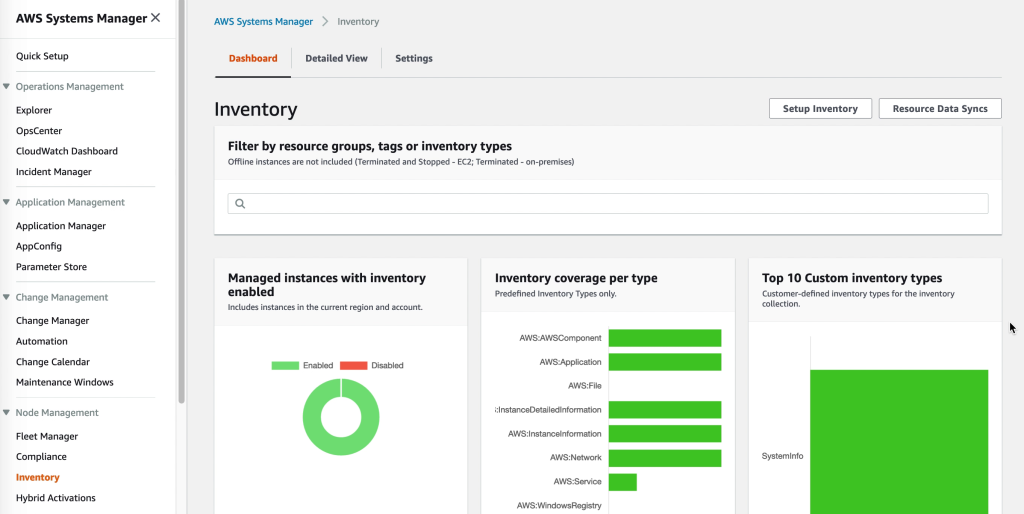

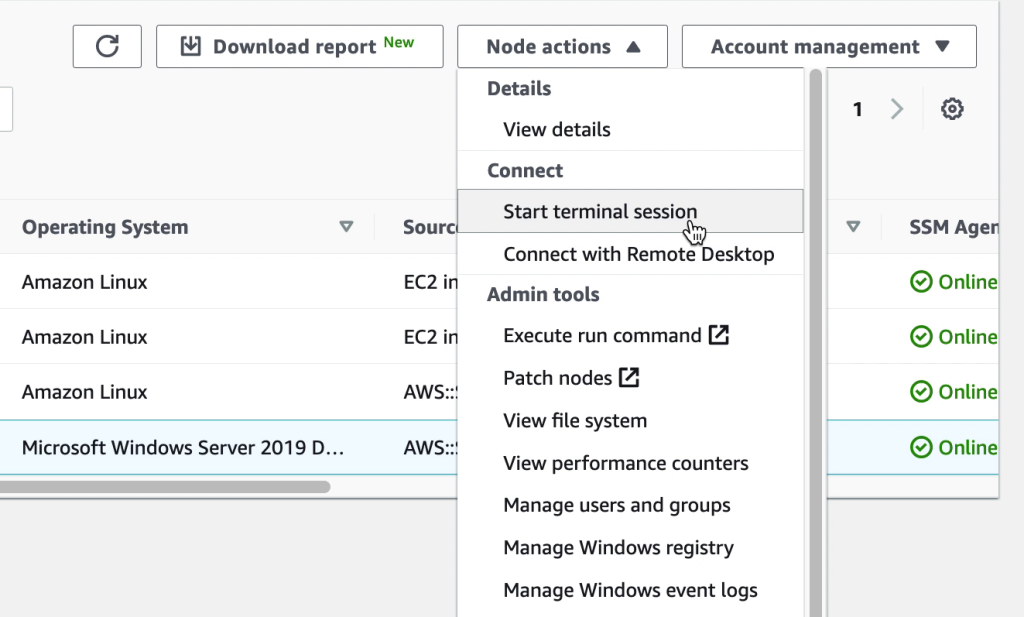

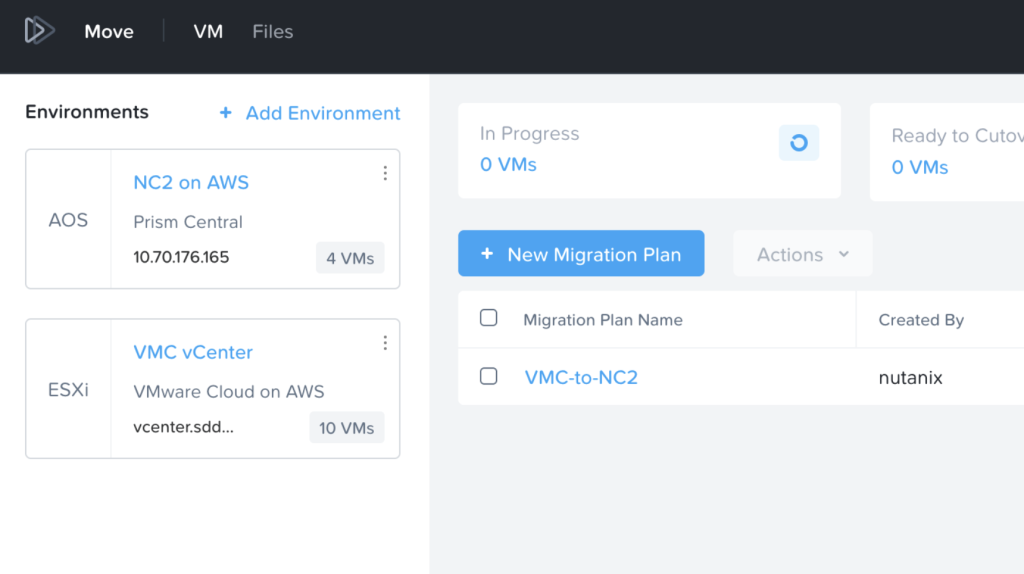

Migration tool: Move

The Nutanix Move migration tool has with the recent 5.3 release added support for migrations from VMware Cloud on AWS. In this example, Move is deployed into the NC2 cluster. Both the VMC and the NC2 environments have been registered with Move and the inventories of both show up and are available for migration. More details in the Move deployment section below.

Method 1: Deploy NC2 into the Connected VPC

If there is enough space to deploy NC2 into the already existing Connected VPC in the customer account, this is the easiest and most straight-forward option. Connectivity and routing between the Connected VPC and the VMware Cloud on AWS environment is already configured as part of the VMC deployment. Do make sure that the CIDR ranges of any existing subnets are sufficient for deployment of NC2 and that there aren’t already resources deployed into those subnets which could interfere with the NC2 components. If the VPC CIDR range has space for new subnets, consider creating new private subnets to hold the NC2 deployment.

- Benefits

- No need to create new VPC and subnets

- VPC is already connected to VMC and routing is configured

- Data transfer is free of charge

- High link / data transfer speed

- Drawbacks

- VPC may already be fully populated with resources

- VPC may not have the correct CIDR ranges for NC2

Method 1: Steps to deploy

Simply sign up for NC2 or start a trial and deploy through the NC2 deployment wizard. Select the latest version – 6.8, to get Flow overlay networking and the centralized management through Prism Central included.

Note that NC2 can only be deployed into private AWS subnets and that internet connectivity need to be present. If no direct internet connectivity is available, proxy support is also available through the deployment wizard.

VMware Cloud on AWS automatically updates the default route table in the connected VPC with the routes to vCenter, ESXi and the user networks. However, if NC2 is deployed into a subnet which doesn’t use the default route table those routes won’t be present. Ensure the subnet NC2 is deployed into is updated with the routes to the VMware Cloud on AWS environment. Particularly the management subnet which holds vCenter and the ESXi hosts. Also, if necessary, update the security group on the active VMC ENI to allow access from the NC2 subnet.

After the NC2 cluster is deployed, follow the steps further down in this article to open the VMC firewall for vCenter and ESXi, deploy Move 5.3 on top of NC2 and register both the NC2 cluster and the VMC vCenter instance.

Method 2: Deploy NC2 into a separate VPC and migrate through a VTGW

If deploying NC2 into the Connected VPC is not possible or desirable, there is another option available. VMware Cloud on AWS supports creating a VMware Transit Connect (VTGW). The VTGW is a VMware-controlled Transit Gateway (TGW) – basically a regional cloud router. The VTGW can in turn be attached either directly to another VPC or peered with a customer controlled TGW. The TGW can then be attached to one or several VPCs of the customers choosing. Do keep cross-AZ and cross-region charges in mind when planning the architecture so that they can be minimized or avoided.

- Benefits

- Once set up, migration is straight forward

- The customer can use any VPC for the NC2 deployment, including a new one

- High link / data transfer speed

- Drawbacks

- Routing requires additional steps and knowledge

- Data transfer is charged (roughly 2 cents/Gb)

- Although this example use a TGW and a VTGW, data transfer charges do not end up being doubled. The peering attachment does not incur data transfer charges unless they go across AZs or regions.

- (V)TGW attachments are charged (roughly 7 cents/h in ap-northeast-1)

Method 2: Steps to deploy: Create the VTGW

Unless already present, go to the AWS console and deploy a Transit Gateway (TGW) in the same region as VMC. Then, in the VMware Cloud on AWS management console, go to “SDDC groups” and deploy a new VTGW. Once deployed, navigate to the “External TGW” tab, click “Add TGW” and enter the account number and the ID of the customer TGW to connect to as well as the regions to use.

In the “Routes” box, enter the CIDR range of the VPC which NC2 is to be deployed into. In this example, “10.90.0.0/16”.

This will advertise the NC2 VPC CIDR to VMware Cloud on AWS and also create a peering attachment invitation from the VMware AWS account to the customer AWS account.

Method 2: Steps to deploy: Configure the TGW

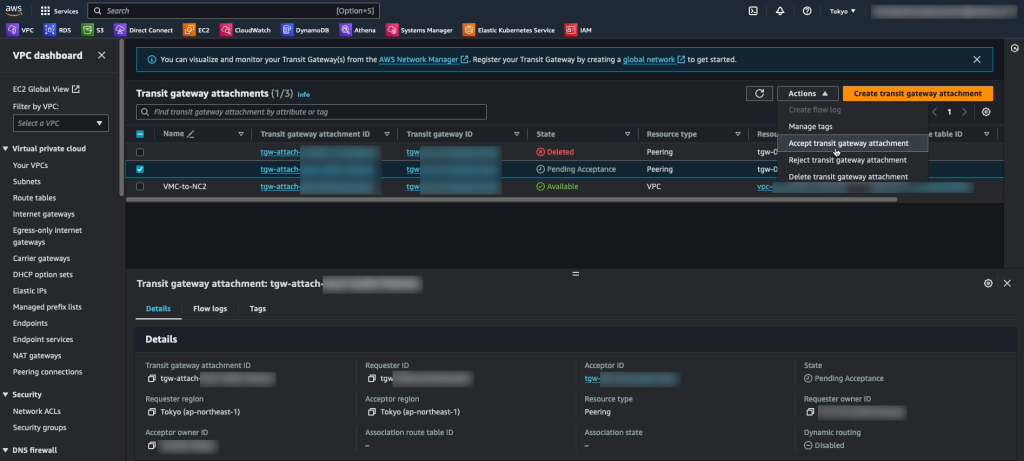

The invitation to add the peering attachment can be accepted through “Transit Gateway Attachments” in the AWS console in the customer account.

While here, take the opportunity to add an attachment to the VPC into which NC2 will be deployed.

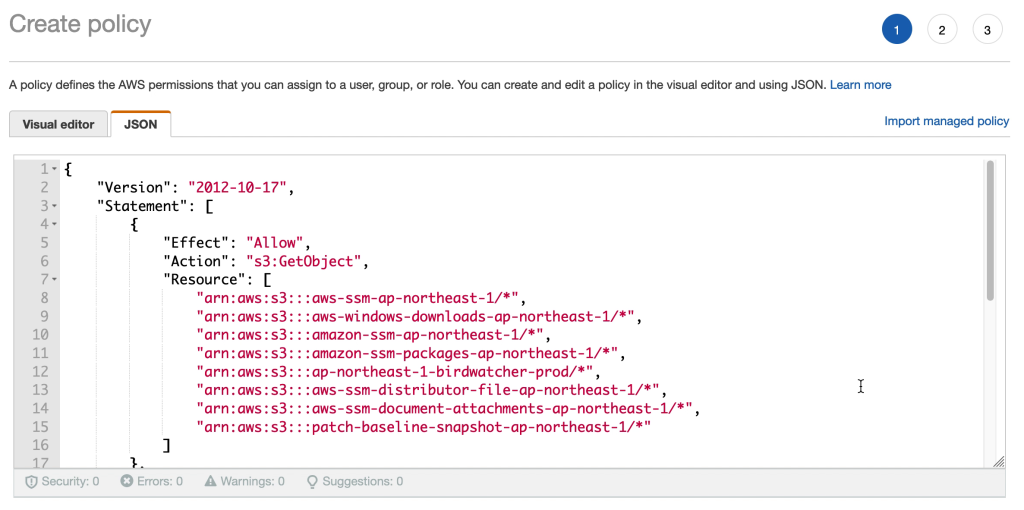

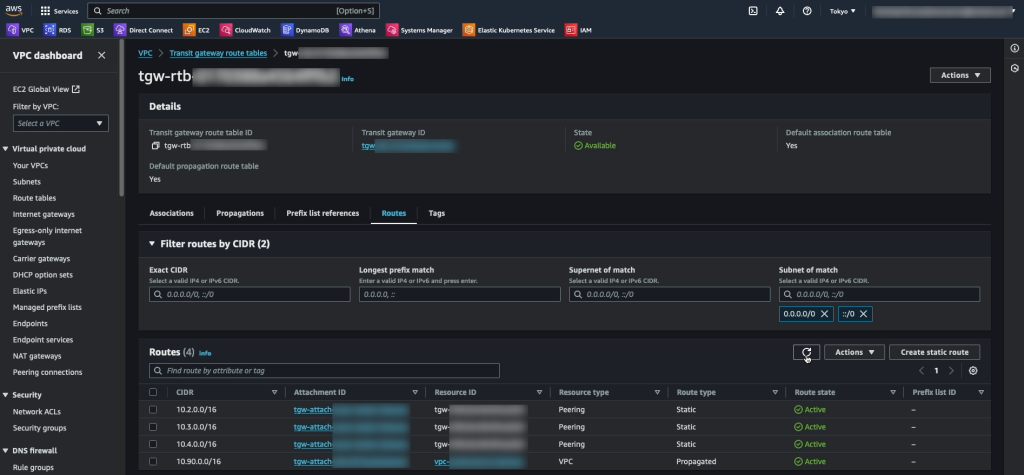

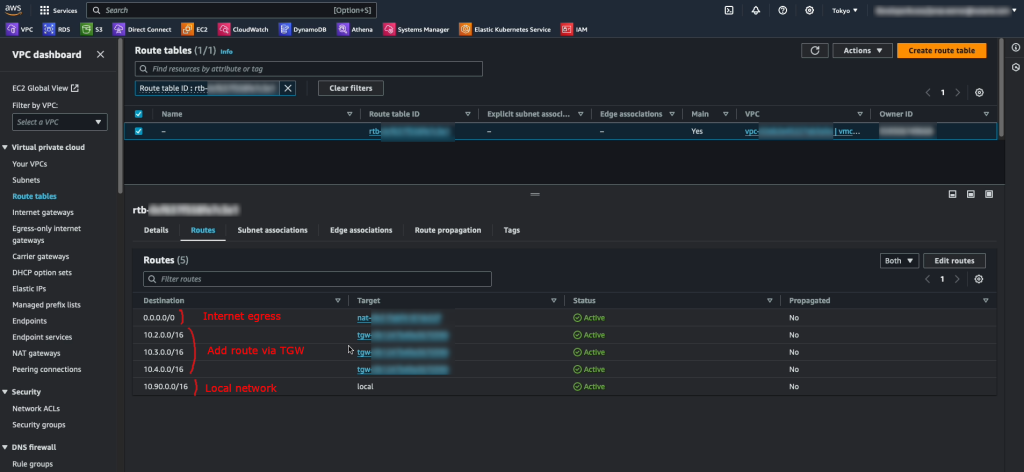

In the customer AWS account, navigate to the Transit Gateway route table section, select the route table for the TGW peered with VMC and add the routes for the VMC networks. In this case “10.2.0.0/16”, “10.3.0.0/16” and “10.4.0.0/16”. Note that these are added as Static routes.

In addition we have the “10.90.0.0/16” network added via the VPC attachment. There is no need to add static routes for this network as it is propagated automatically.

Method 2: Steps to deploy: Configure the routes to the VMC cluster in the NC2 VPC

The final step for the routing is to add the routes for VMware Cloud on AWS into the route table(s) of the VPC which NC2 is to be deployed into. In our example, “10.2.0.0/16”, “10.3.0.0/16” and “10.4.0.0/16” are added as routes via the TGW attachment. “10.90.0.0/16” is our local network and there is a quad-zero route to the internet via a NAT GW.

This concludes the setup steps specific to Method 2. Please continue with the firewall settings, NC2 cluster deployment and Move installation below.

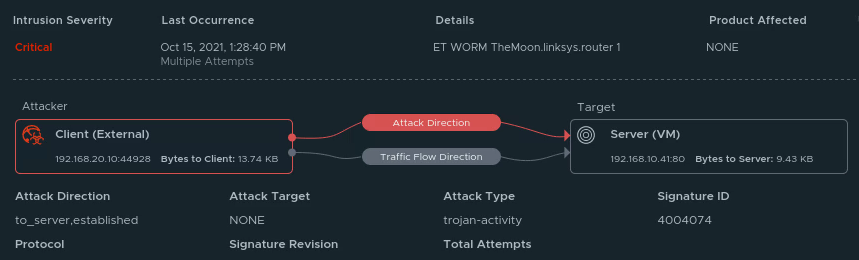

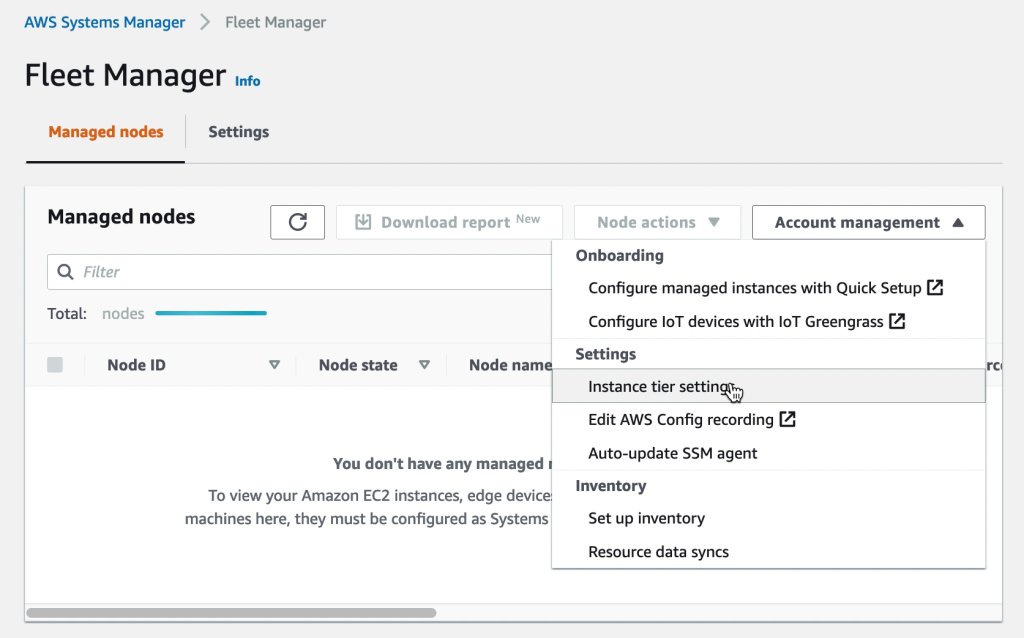

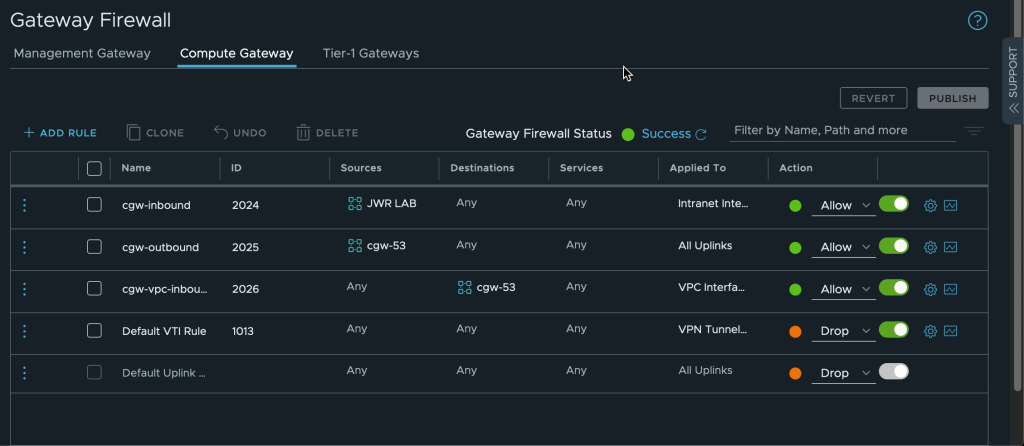

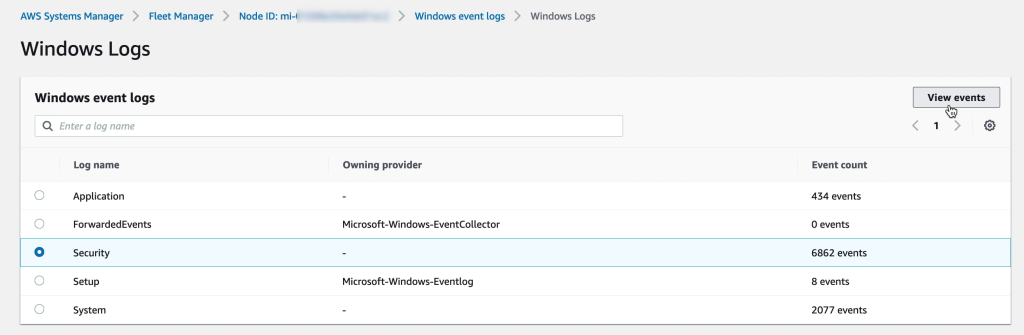

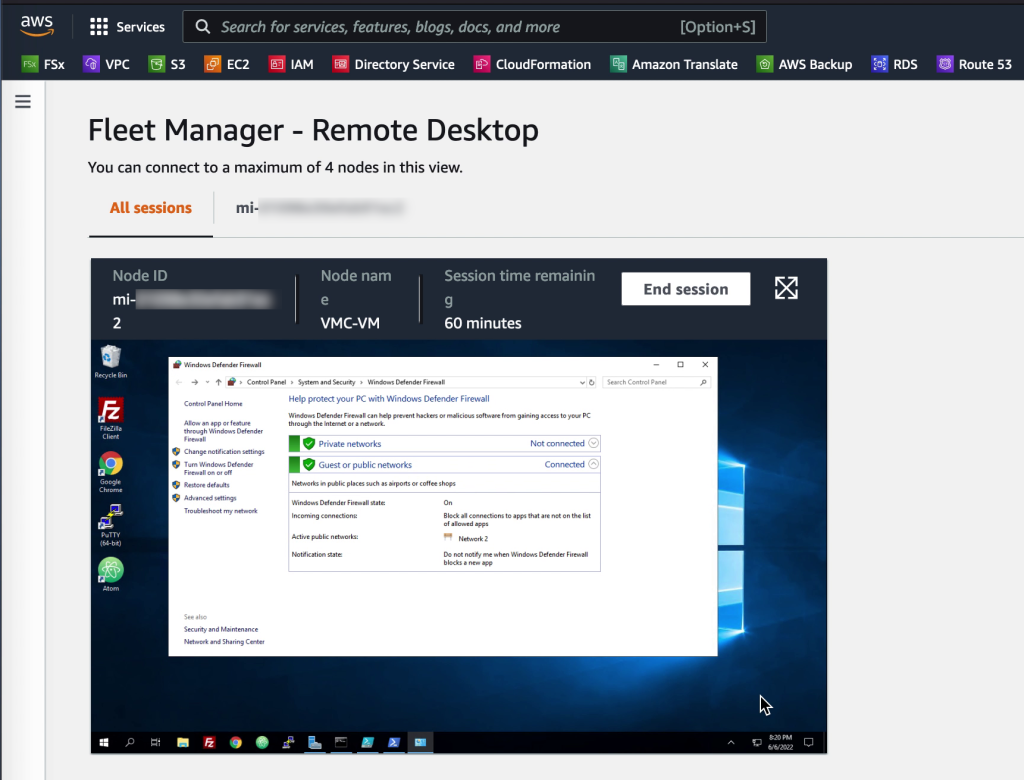

Firewall settings: Allow Move to access the VMC vCenter and ESXi hosts

Move requires access to the VMC vCenter instance and the ESXi hosts in order to migrate virtual machines. Through the VMware Cloud on AWS console, add a Management Gateway firewall rule to allow the NC2 VPC to access these resources.

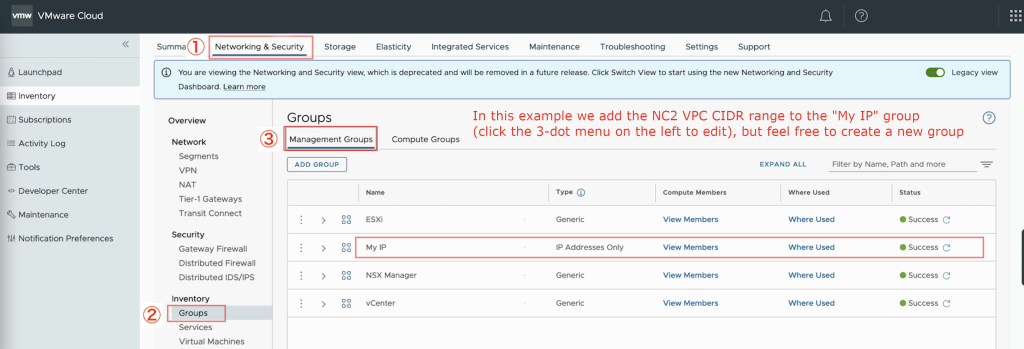

- Add the NC2 VPC CIDR range to an MGW inventory group

- Navigate to “Networking & Security”

- Click “Groups” under “Inventory”

- Click “Management Groups” to edit the groups pertaining to the MGW

- Add or modify a group and add the CIDR range of the VPC which NC2 is deployed into

- To modify an existing group, click the 3-dot menu on the left of the group and select “Edit”

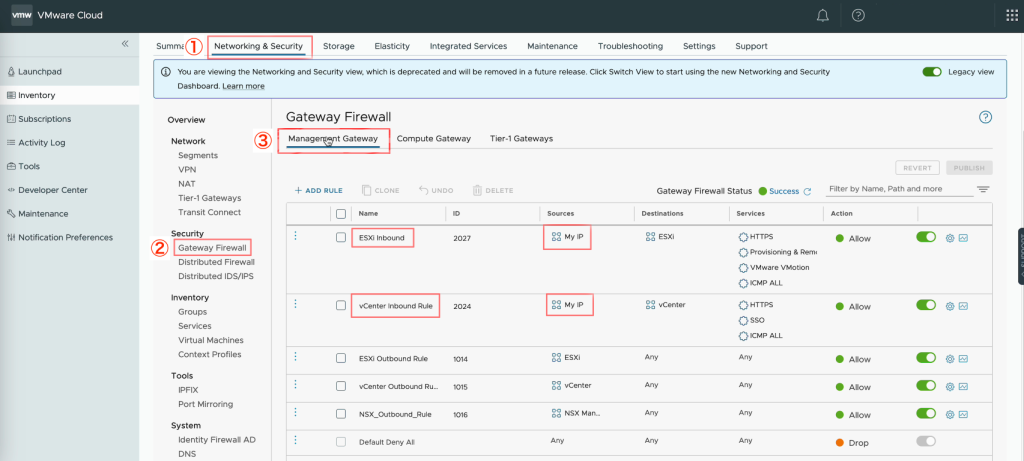

- Add the MGW inventory group to the MGW firewall rules

- Navigate to “Networking & Security”

- Click “Gateway Firewall” under “Security”

- Click “Management Gateway” to edit rules for the MGW

- Update the rules for ESXi and vCenter by adding the MGW inventory group containing the NC2 VPC CIDR range with the action “Allow”

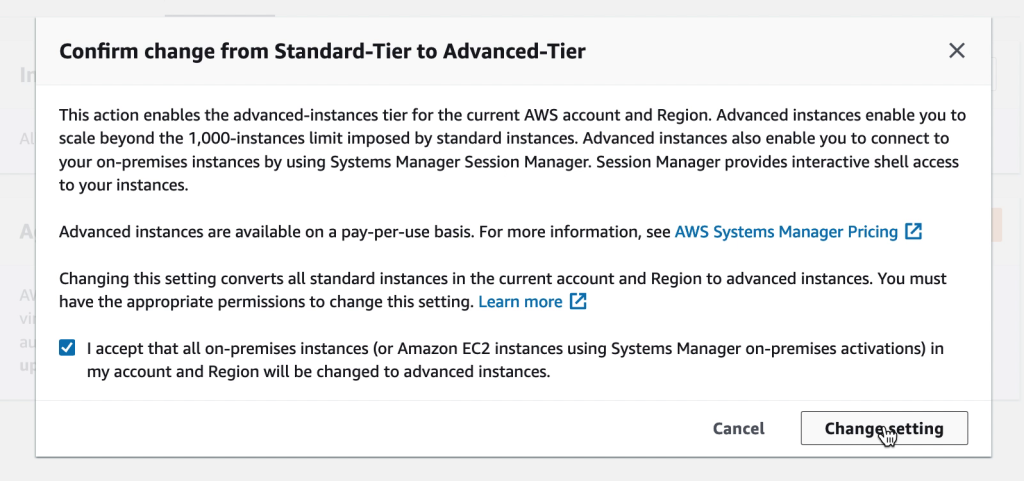

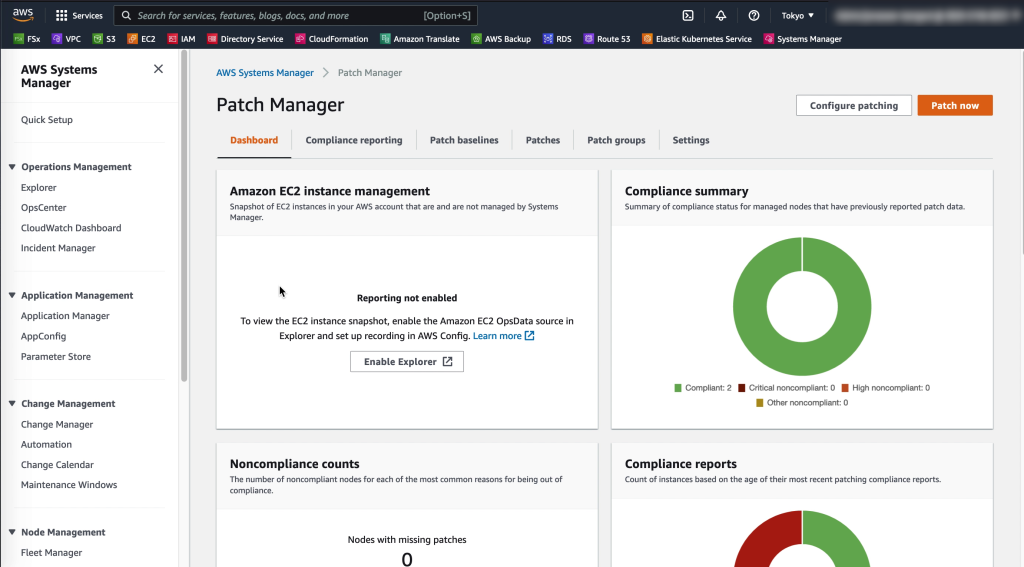

Deploy the NC2 cluster

Sign up for NC2 or start a trial and deploy through the NC2 deployment wizard. Select the latest version – 6.8, to get Flow overlay networking and the centralized management through Prism Central included. When asked, select the VPC and the private subnets desired. In this case subnets in the “10.90.0.0/16” VPC.

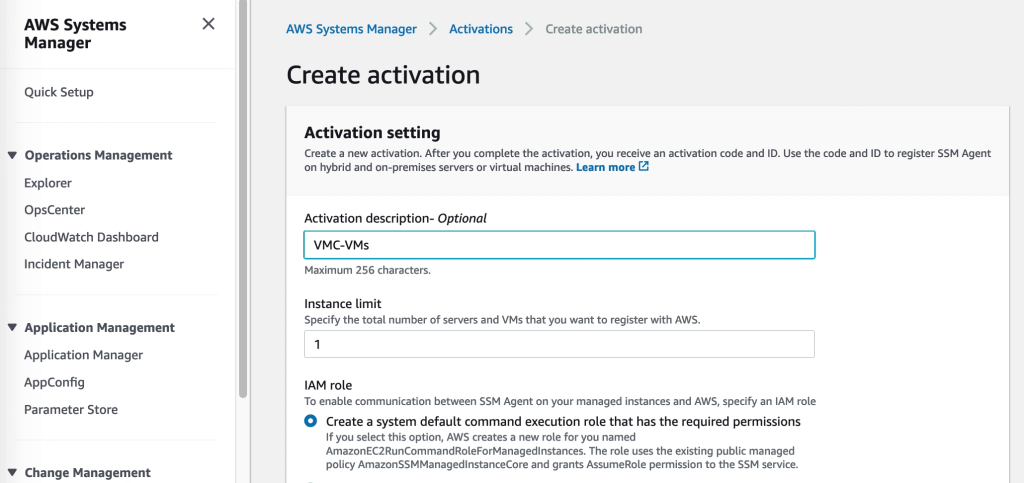

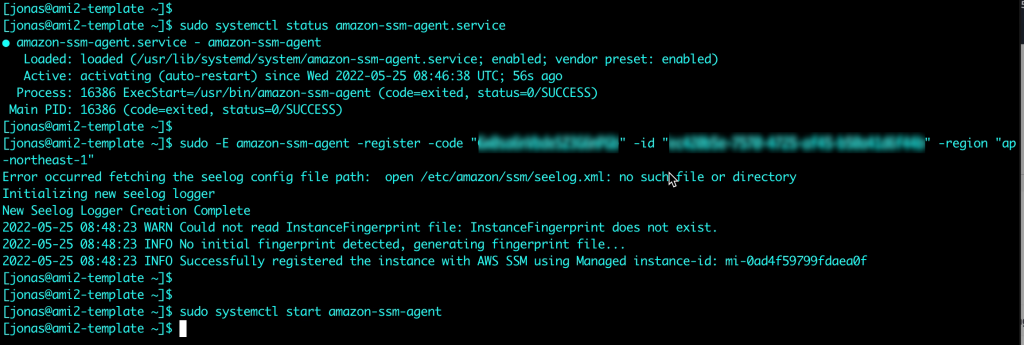

After the NC2 cluster is deployed, deploy Move 5.3 on top of NC2 and register both the NC2 cluster and vCenter from the VMC environment.

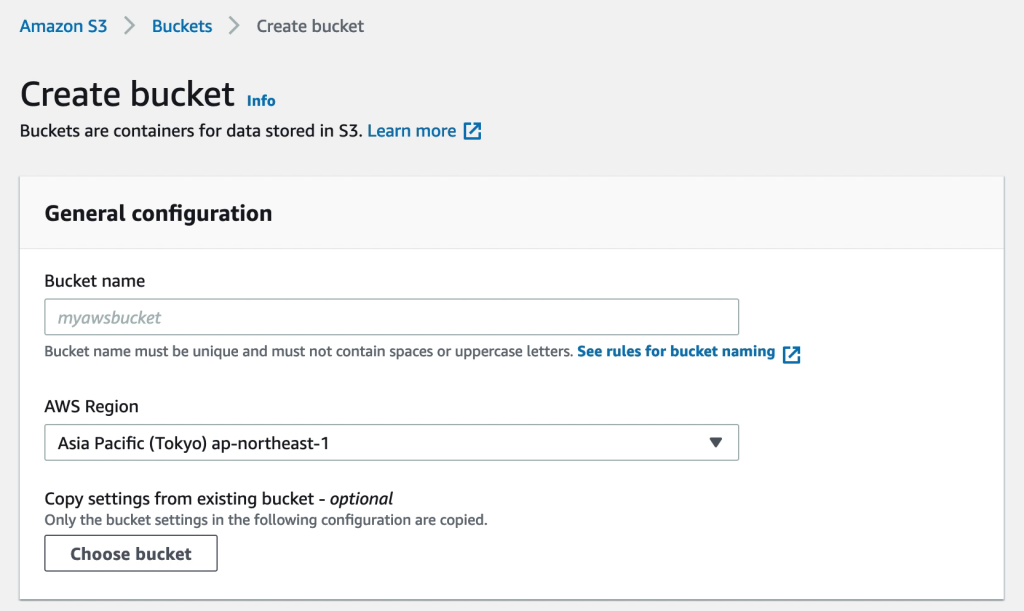

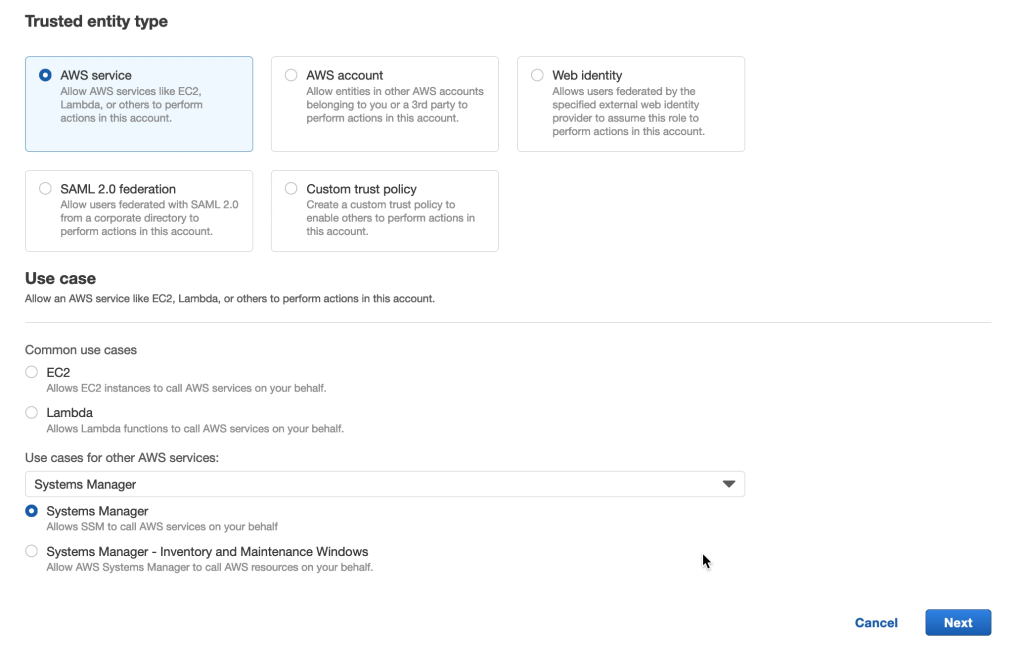

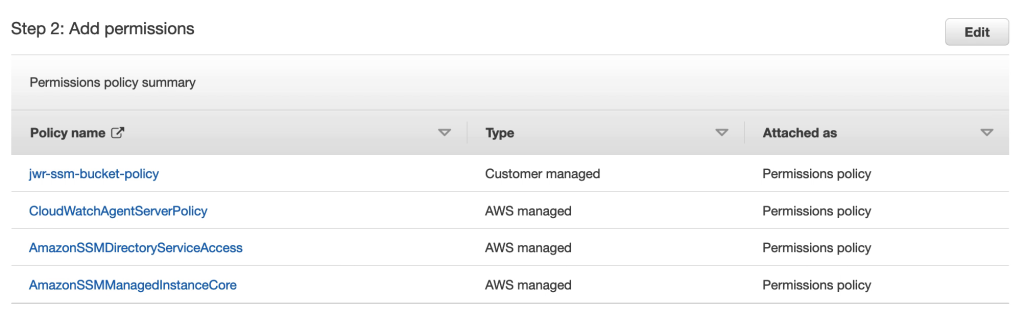

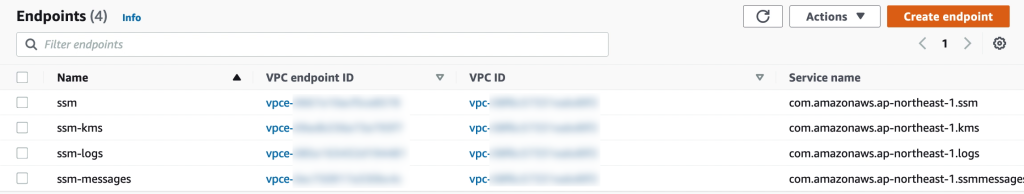

Install Move and register NC2 and VMC

Download Move 5.3 from the download page: https://portal.nutanix.com/page/downloads?product=move

Follow the Move manual to deploy Move 5.3 on the NC2 cluster in AWS: https://portal.nutanix.com/page/documents/list?type=software&filterKey=software&filterVal=Move

VDDK upload: After Move is deployed, add the NC2 and VMC environments. After adding the VMC environment it will prompt for a VDDK file. This file can be downloaded from the VMware support site. The version used in this example is: “VMware-vix-disklib-7.0.3-19513565.x86_64”. Please use the Linux version.

Migrate the VMs to NC2

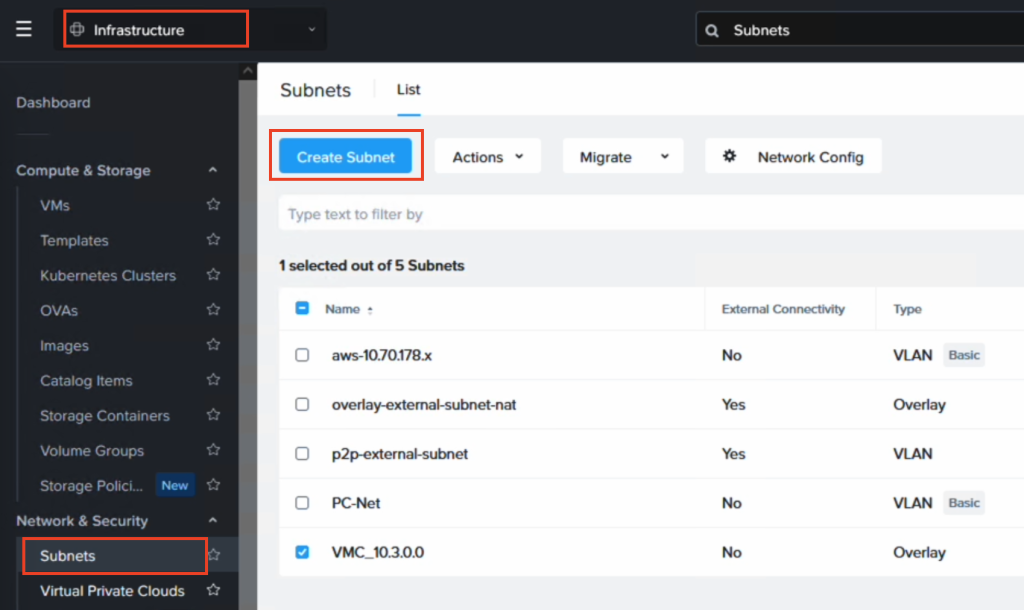

If IP retention is desired, use Flow in Prism Central to create an overlay VPC and subnet which matches the CIDR range of the NSX-T subnet in VMC from which the VMs will be migrated. In this example “10.3.0.0/24” is used.

Now the only thing remaining is to create a Migration Plan in Move where VMC is the source and NC2 is the target. Ensure to select the correct target network to ensure IP retention works as expected.

Wrap up

This has been an example of the steps required for migrating Virtual Machines from VMware Cloud on AWS (VMC) to Nutanix Cloud Clusters (NC2) without changing the IP or MAC addresses of the migrated VMs. For more information or for a demo, please reach out to your Nutanix representative or partner.