After seeing this awesome Arduino RADAR project by Dejan Nedelkovski I simply had to build one myself. It’s actually a SONAR though as it utilizes sound for detection rather than radio waves. It was a fairly quick and easy build but it requires both the Arduino sketch as well as a separate one for Processing to draw the GUI. Here it is in action:

Logitech / Logicool G13 cleaning / washing

After knocking over a full pint of beer into my Belkin ergonomic keyboard and my much loved Logitech/Logicool G13 programmable gaming keyboard I had to find a way to save them from the garbage bin.

Unfortunately the Belkin was beyond repair. Pressing any of the keys would result in gibberish and washing out the beer with water didn’t improve things. The G13 however could be taken apart more easily and I was happy to see that it can be separated into two parts which makes the keys very easy to clean off without affecting the underlying circuit boards.

Note that although the G13 had most of the keys fused together by the dried beer it still seemed to function better than the Belkin keyboard. The underlying circuit boards appeared undamaged or unaffected.

If you want to try this, start off with a few tools. I used a razor-knife and a small pair of scissors as well as a Phillips screwdriver. You’ll also need a sponge, dish washing liquid and a hair dryer.

Carefully peal off the protective rubber feet so they don’t break. The scissors were useful here as the razor knife risk cutting the feet while removing them. They don’t have to come all the way off but I removed them anyway to get full access to the screws underneath.

There are six screws that need to be removed in total and each is hidden behind a rubber foot or, as is the case with the middle one, a thin plastic seal. Once the rubber covers are removed, unscrew the six screws which hold the keyboard together:

Once the screws are removed, use the razor knife to gently split the keyboard apart at the seams. I started at the joystick side, worked my way down and around from there. Finally the upper part could also be loosened although the dried beer held it together fairly well.

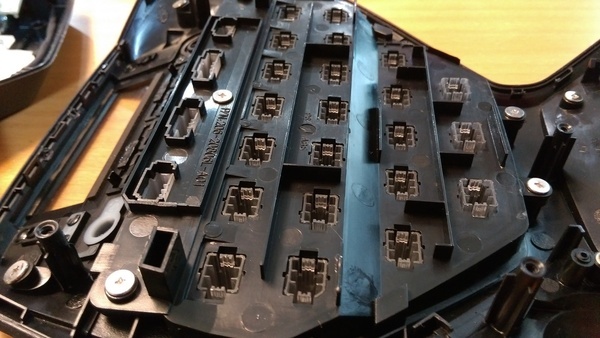

After the key part has been removed from the base it’ll look like this:

Now the upper part with the keys can be washed with dishwashing liquid and a sponge to remove the beer / sugar / etc depending on what was spilled into it in the first place.

Rinse and dry thoroughly with a hairdryer to ensure there is no water left between the keys. After that it’s just a matter to snap the key section back on top of the base, screw in the screws and finally add the rubber covers / feet to the bottom of the keyboard. After the procedure the keyboard is good as new and works just fine when connected to the PC again.

Pepper meets Microsoft Azure

Redfish Whitepaper

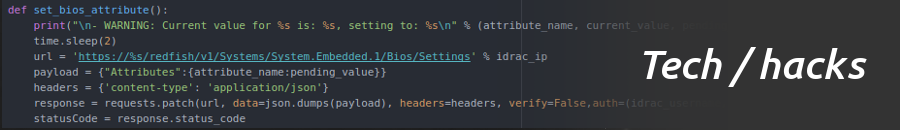

Finally there is a modern replacement for IPMI. There is a new systems management standard called Redfish which is now available on Dell servers in the form of the 2.30.30.30 firmware update for iDRAC and LC. Redfish makes use of a REST API interface and employs JSON as its data format. Now it’s possible for anyone to programmatically control servers via Python or Java and to do so without having to care which brand the server is – yes, it’s vendor neutral. Other vendors will release (or already have released) their own versions, but since it’s a standard the implementations all work the same way.

I was the co-author on a whitepaper for the Redfish API and anyone interested can download the document here.

OpenStack Neutron – Expand and / or update floating IP range

Sometimes you run out of public IP addresses and need to expand the floating IP range. If a non-interrupted range is available to expand into from the current range simply use:

neutron subnet-update –allocation-pool start=<original-start-ip>,end=<new-end-ip>

This will overwrite the existing range and expand it to the new end-IP.

To add an extra, separate IP range while still keeping the original range, use:

neutron subnet-update <subnet-id> –allocation-pool start=<original-start-ip>,end=<original-end-ip> –allocation-pool start=<additional-start-ip>,end=<additional-end-ip>

Example of extending a continuous IP range:

View the subnet detail

Update the subnet

Example of adding an additional range to an already existing range: