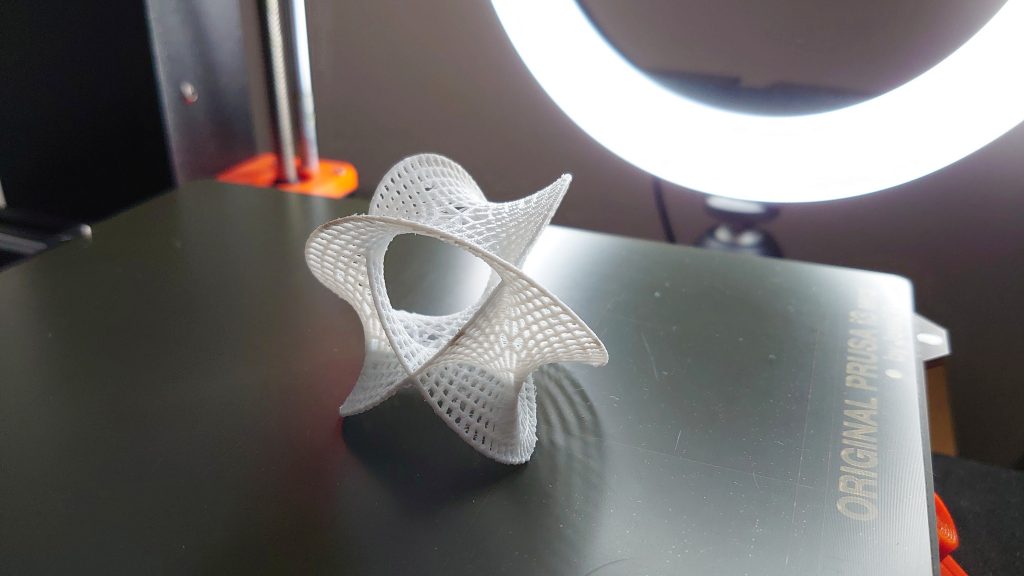

After seeing the 3D print designs from Akyelle on Thingiverse I wanted to see if I could make a version which could be controlled from a smartphone over Bluetooth.

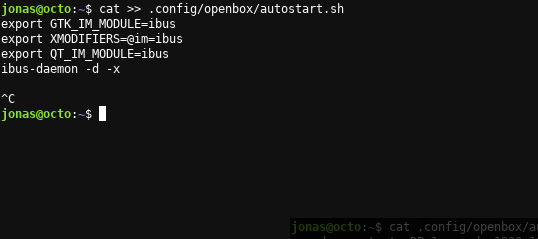

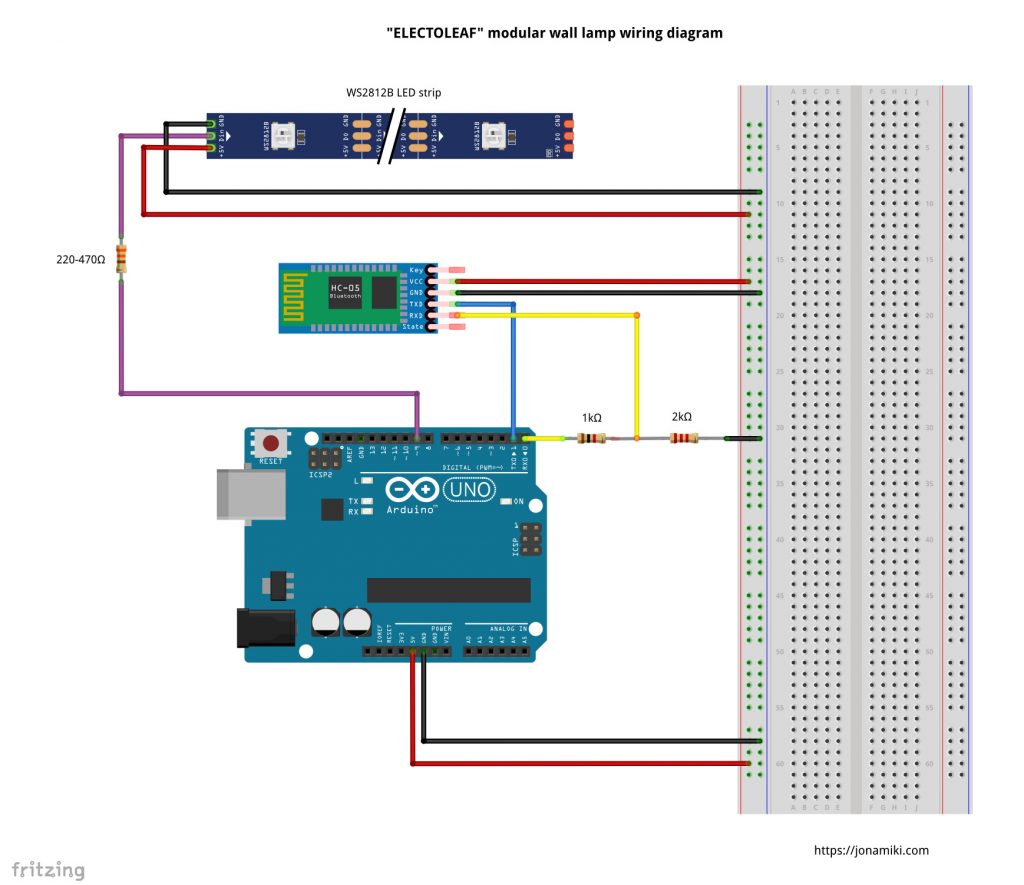

I had code for controlling addressable WS2812B LEDs / Neopixels since from previous lamp projects but in this case that wasn’t enough. Code for controlling the LEDs over Bluetooth (HC-05 Bluetooth module) as well as some info on how to make an app that could talk to it was also needed. In the end I used HowtoMechatronics excellent guide on Bluetooth and Arduino apps for that part and combined it with the lamp code to create the below

The modular lamp parts are printed on a Prusa i3 MK3s in black and white PLA. Each LED is soldered together with its neighbor – laborious work as each has 6 connectors (5V, GND, Signal in/out on each end). Currently 6 panels with 9 LEDs each means 342 soldering points just for this small size version 🙂

Wiring diagram

Breadboard schematic showing the wiring and components

Links to resources

- STL for 3D printing: https://www.thingiverse.com/thing:4686921

- Code for Arduino: https://github.com/jonas-werner/electroleaf

- HowtoMechatronics excellent guide on Bluetooth with Arduino and Android apps for sending / receiving data

A note on programming the Arduino: Please disconnect the TX and RX leads from the Arduino board when programming it or the Arduino sketch download will fail

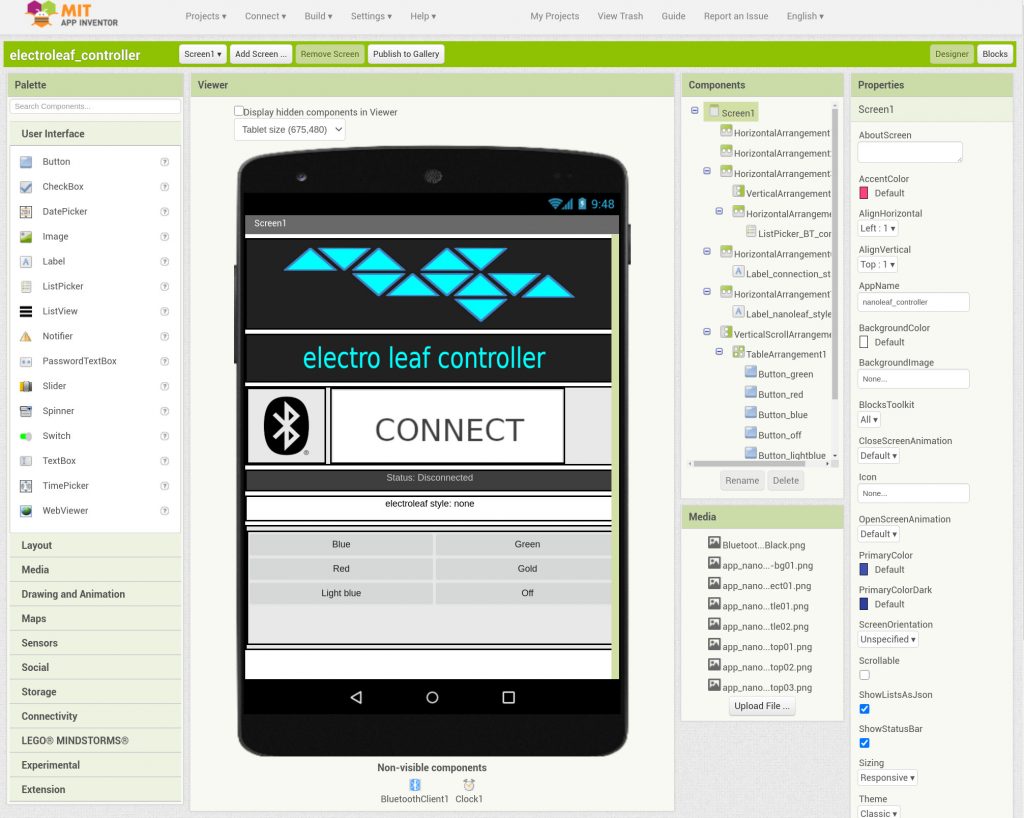

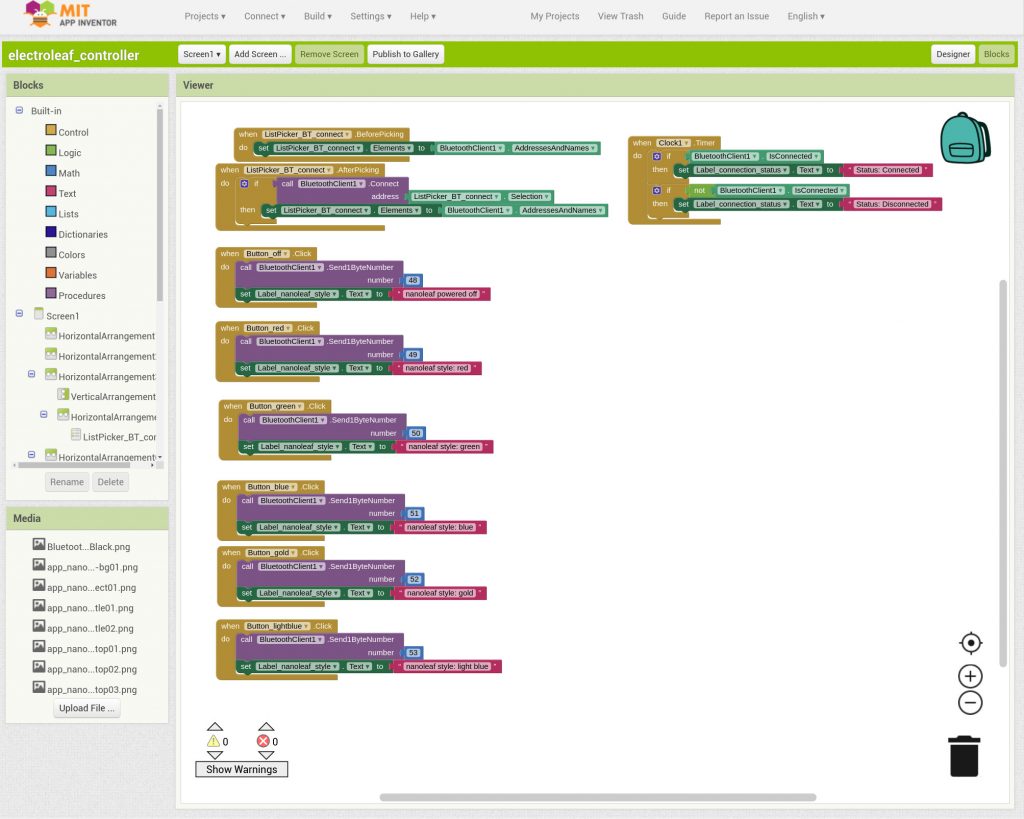

Android app

The app is made almost exactly according to HowtoMechatronics guide but with some minor changes. If the code for the Arduino is used without any major modifications it’s possible to simply download and use the app as-is from here: link

However, I’d highly recommend creating your own using MIT App Inventor as it’s pretty easy when following the guide. A couple of screenshots of the process are included below for reference but I won’t duplicate the work done in the HowtoMechatronics guide.

The UI layout

The app logic

That’s it 🙂 Have fun creating your own lamps. As someone said: “Why buy something when you can build it for three times the cost?“