Exporting the details from a vSphere installation can be extremely useful prior to a migration to VMware Cloud on AWS. Alternatively it can be a useful way to gather a point-in-time snapshot of a VMware environment for documentation or planning purposes. It may even provide details and insights you didn’t even know were there 🙂

Tutorial for deploying and configuring VMware HCX in both on-premises and VMware Cloud on AWS with service mesh creation and L2 extension

Deploying HCX (VMware Hybrid Cloud Extensions) is considered to be complex and difficult by most. It doesn’t help that it’s usually one of those things you’d only do once so it doesn’t pay to spend a lot of effort to learn. However, as with everything it’s not hard once you know how to do it. This video aims to show how to deploy HCX both in VMC (VMware Cloud on AWS) and in the on-premises DC or lab.

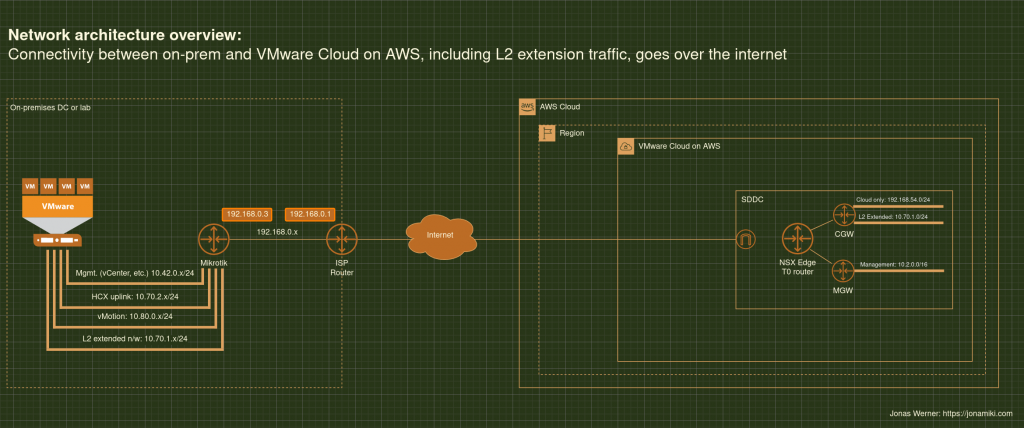

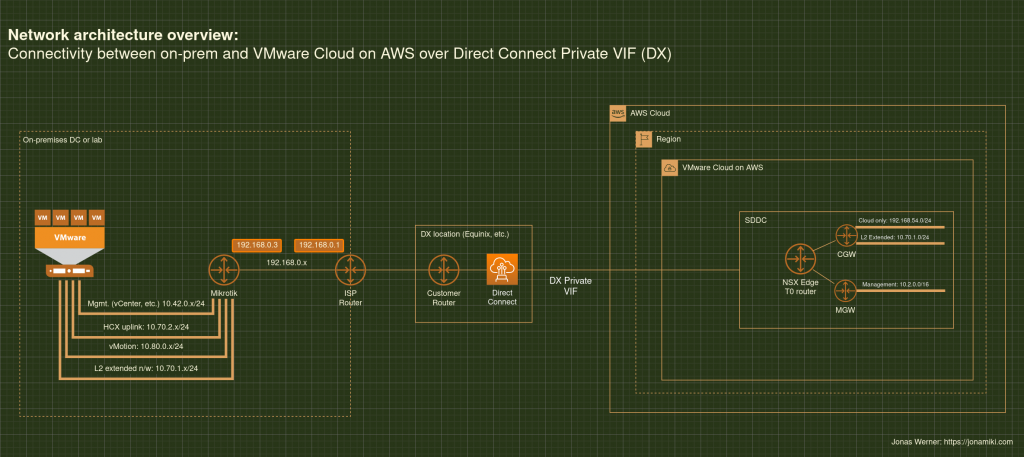

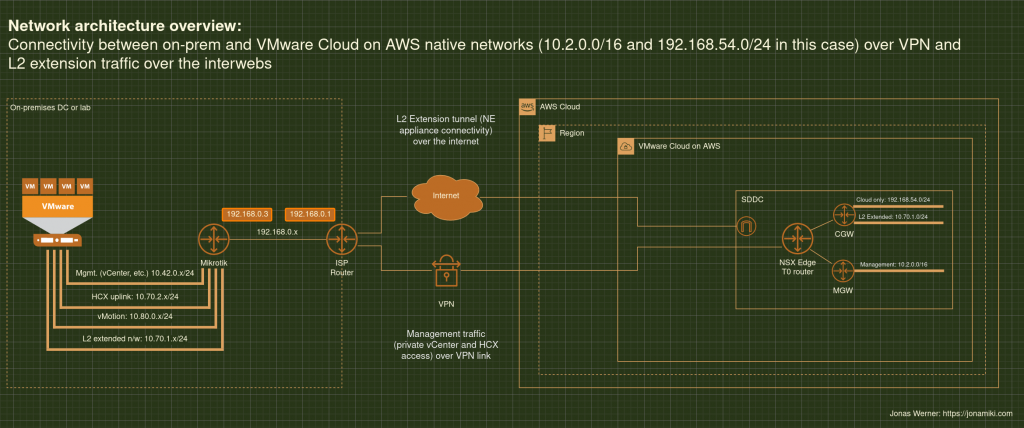

It uses both the method of creating the service mesh over the internet as well as how to create it over a private connection, like DX (AWS Direct Connect) or a VPN.

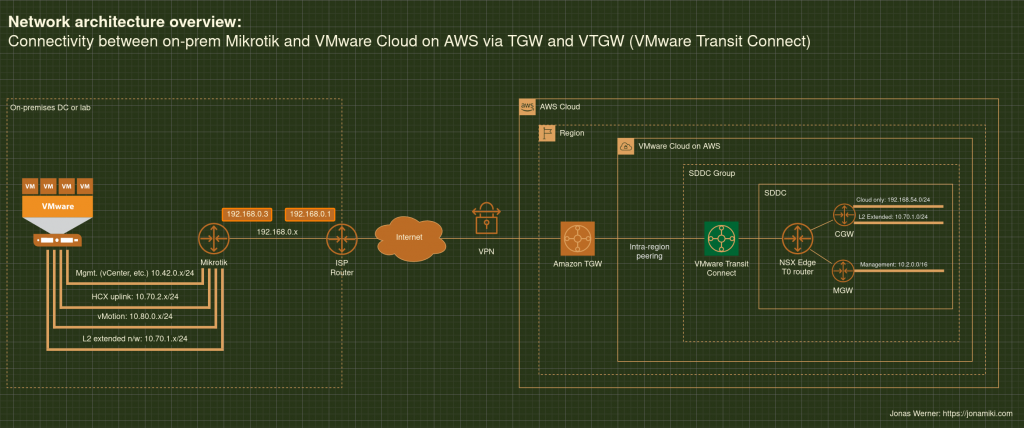

A VPN cannot be used for L2 Extension if it is terminated on the VMC SDDC. In this tutorial I’ll use a VPN which is terminated on an AWS TGW which is in turn peered with a VTGW connected to the SDDC we’re attaching to.

Video chapters

- Switching vCenter to private IP and deploying HCX Cloud in VMC: https://youtu.be/ho2DY-TP-SA?t=43

- Initial SDDC firewall configuration: https://youtu.be/ho2DY-TP-SA?t=97

- Switching HCX to private IP and adding HCX firewall rules: https://youtu.be/ho2DY-TP-SA?t=405

- Downloading and deploying HCX for the on-prem DC side: https://youtu.be/ho2DY-TP-SA?t=585

- Adding HCX license, linking on-prem HCX with vCenter: https://youtu.be/ho2DY-TP-SA?t=740

- HCX site pairing between HCX Connector and HCX Cloud: https://youtu.be/ho2DY-TP-SA?t=959

- Creating HCX Network and Compute profiles: https://youtu.be/ho2DY-TP-SA?t=1011

- Choice: Deploy service mesh over public IP or private IP: https://youtu.be/ho2DY-TP-SA?t=1374

- Deploy service mesh over public IP: https://youtu.be/ho2DY-TP-SA?t=1399

- Live migrating a VM to AWS: https://youtu.be/ho2DY-TP-SA?t=1679

- Deploy service mesh over private IP (DX, VPN to TGW): https://youtu.be/ho2DY-TP-SA?t=1789

Some architecture diagrams for reference

Migrate VMware VMs from an on-prem DC to VMware Cloud on AWS (VMC) using Veeam Backup and Replication

When migrating from an on-premises DC to VMware Cloud on AWS it is usually recommended to use Hybrid Cloud Extension (HCX) from VMware. However, in some cases the IT team managing the on-prem DC is already using Veeam for backup and want to use their solution also for the migration.

They may also prefer Veeam over HCX as HCX often requires professional services assistance for setup and migration planning. In addition, since HCX is primarily a tool for migrations, the customer is unlikely to have had experience setting it up in the past and while it is an excellent tool there is a learning curve to get started.

Migrating with Veeam vs. Migrating with HCX

| Veeam Backup & Recovery | VMware Hybrid Cloud Extension (HCX) |

|---|---|

| Licensed (non-free) solution | Free with VMware Cloud on AWS |

| Arguably easy to set up and configure | Arguably challenging to set up and configure |

| Can do offline migrations of VMs, single or in bulk | Can do online migrations (no downtime), offline migrations, bulk migrations and online migrations in bulk (RAV), etc. |

| Can not do L2 extension | Can do L2 extension of VLANs if they are connected to a vDS |

| Can be used for backup of VMs after they have been migrated | Is primarily used for migration. Does not have backup functionality |

| Support for migrating from older on-prem vSphere environments | At time of writing, full support for on-prem vSphere 6.5 or newer. Limited support for vSphere 6.0 up to March 12th 2023 |

What we are building

This guide covers installing and configuring a single Veeam Backup and Recovery installation in the on-prem VMware environment and linking it to both vCenter on-prem as well as in VMware Cloud on AWS. Finally we do an offline migration of a VM to the cloud to prove it that it works.

Prerequisites

The guide assumes the following is already set up and available

- On-premises vSphere environment with admin access (7.0 used in this example)

- Windows Server VM to be used for Veeam install

- Min spec here

- Windows Server 2019 was used for this guide

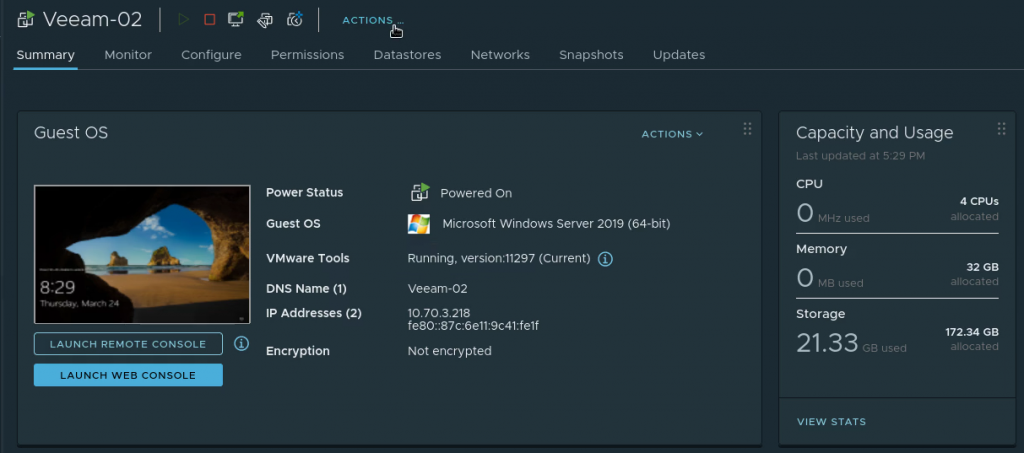

- Note: I initially used 2 vCPU, 4GB RAM and 60 GB HDD for my Veeam VM but during the first migration the entire thing stalled and wouldn’t finish. After changing to 4 vCPU, 32Gb RAM and 170 GB HDD the migration finished quickly and with no errors. Recommend to assign as much resources as is practical to the Veeam VM to facilitate and speed up the migration

- One VMware Cloud on AWS (VMC) Software Defined Datacenter (SDDC)

- Private IP connectivity to the VMC SDDC

- Use Direct Connect (DX) or VPN but it must be private IP connectivity or it won’t work

- For this setup I used a VPN to a TGW, then a peering to a VMware Transit Connect (VTGW) which had an attachment to the SDDC, but any private connectivity setup will be OK

- A test VM to use for migration

Downloading and installing Veeam

Unless you already have a licensed copy, sign up for a trial license and then download Veeam Backup and Recovery from here. Version 11.0.1.1216 used in this guide.

In your on-premises vSphere environment, create or select a Windows Server VM to use for the Veeam installation. The VM spec used for this install are as follows:

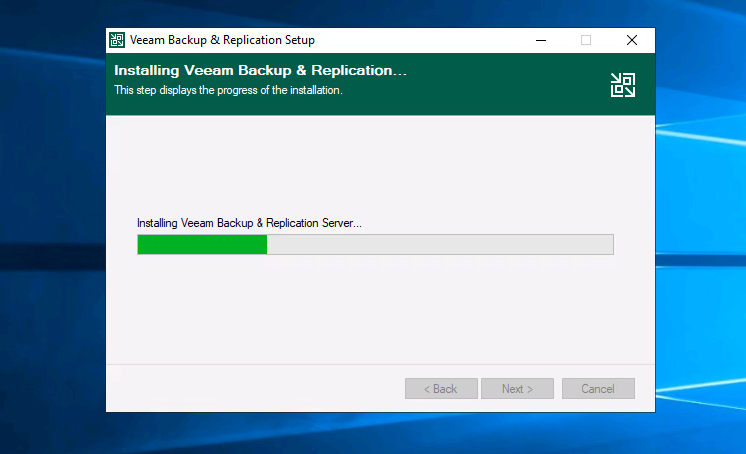

Run the install with default settings (next, next, next, etc.)

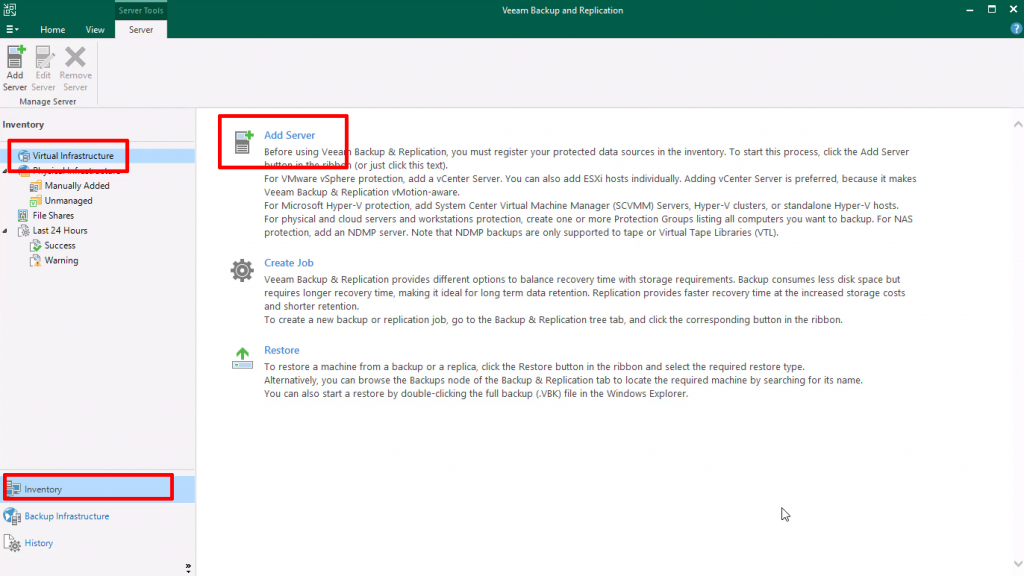

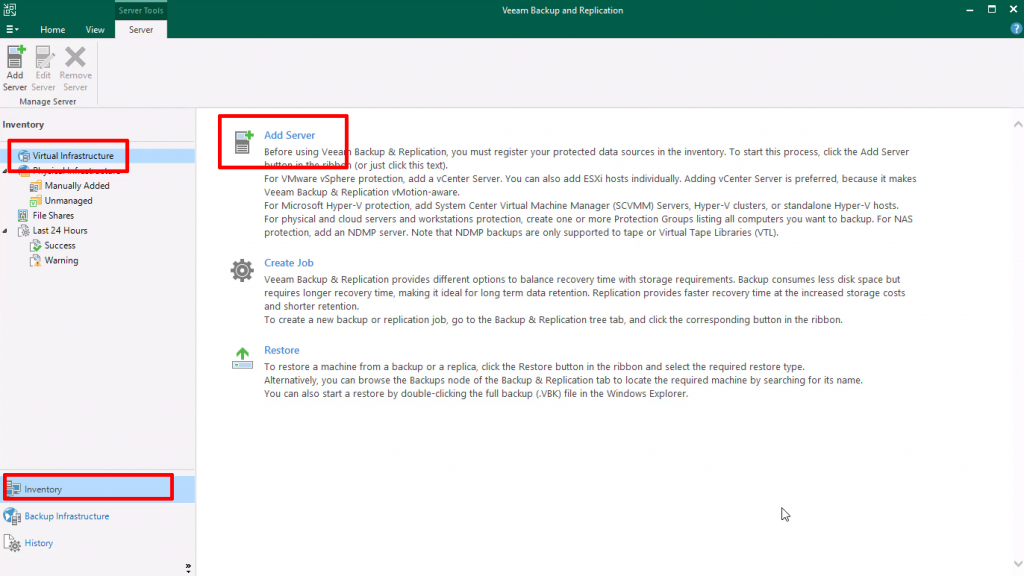

Register the on-prem vCenter in Veeam

Navigate to “Inventory” at the bottom left, then “Virtual Infrastructure” and click “Add Server” to register the on-prem vCenter server

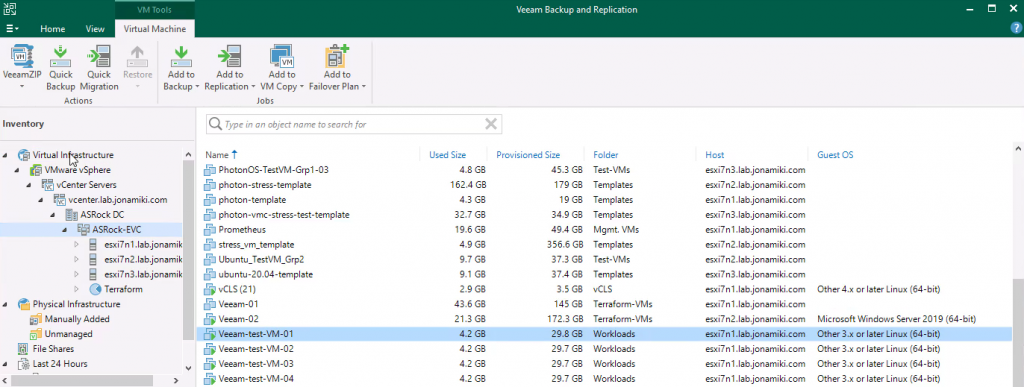

Listing VMs in the on-prem vSphere environment after the vCenter server has been registered in the Veeam Backup & Recovery console

Switching on-prem connectivity to VMware Cloud on AWS SDDC to use private IP addresses

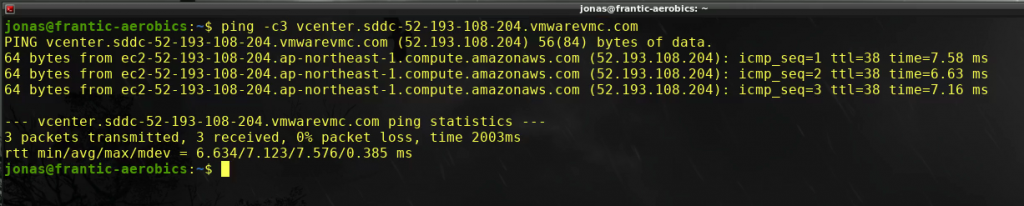

For this setup there is a VPN from the on-premises DC to the SDDC (via a TGW and VTGW in this case) but the SDDC FQDN is still configured to return the public IP address. Let’s verify by pinging the FQDN

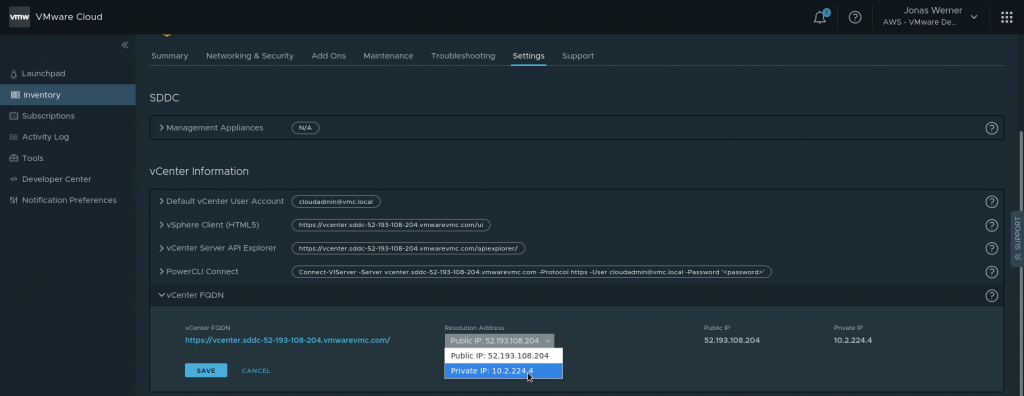

Switching the SDDC to return the private IP is easy. In the VMware Cloud on AWS web console, navigate to “Settings” and flip the IP to return from public to private

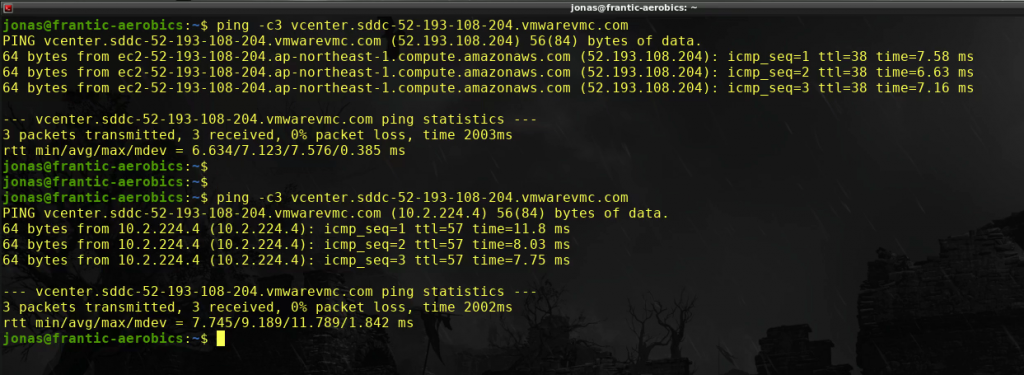

Ping the vCenter FQDN again to verify that private IP is returned by DNS and that we can ping it successfully over the VPN

All looks good. The private IP is returned. Time to register the VMware Cloud on AWS vCenter instance in the Veeam console

Registering the VMC vCenter instance with Veeam

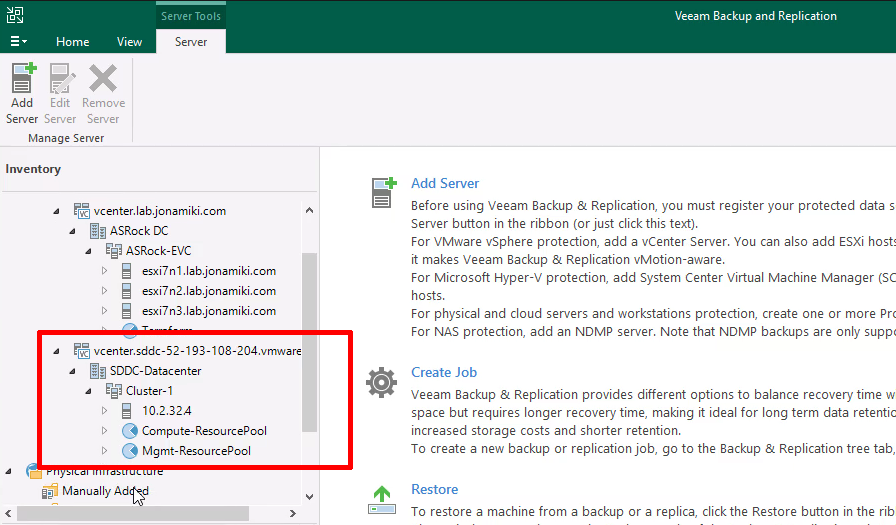

Just use the same method as used when adding the on-premises vCenter server: Navigate to “Inventory” at the bottom left, then “Virtual Infrastructure” and click “Add Server” to register the on-prem vCenter server with Veeam

After adding the VMware Cloud on AWS SDDC vCenter the resource pools will be visible in the Veeam console

Now both vSphere environments are registered. Time to migrate a VM to the cloud!

Migrating a VM to VMware Cloud on AWS

Below is both a video and a series of screenshots describing the migration / replication job creation for the VM.

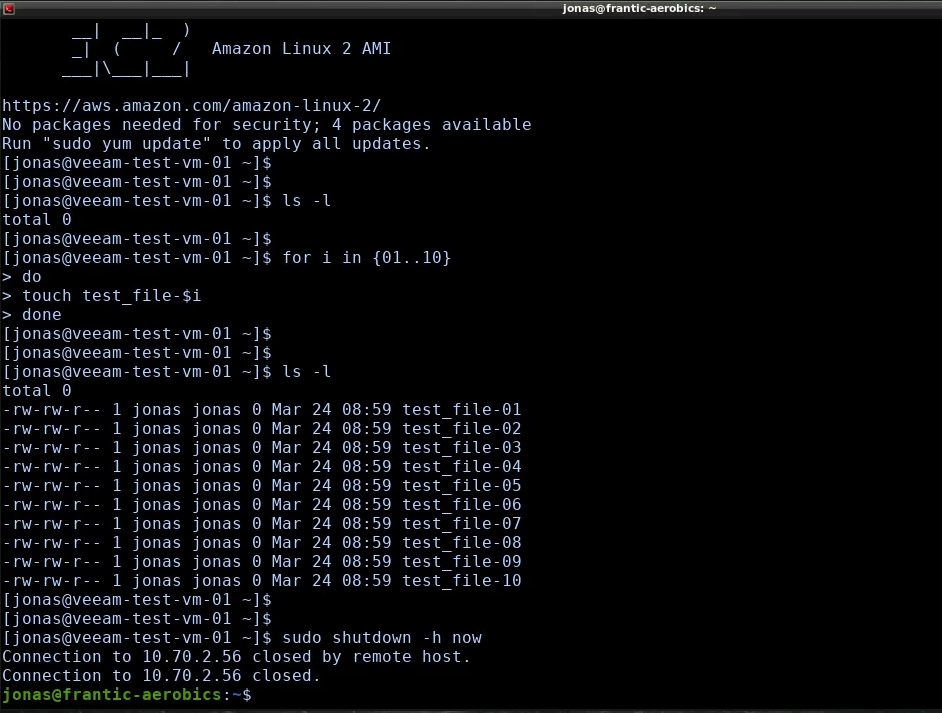

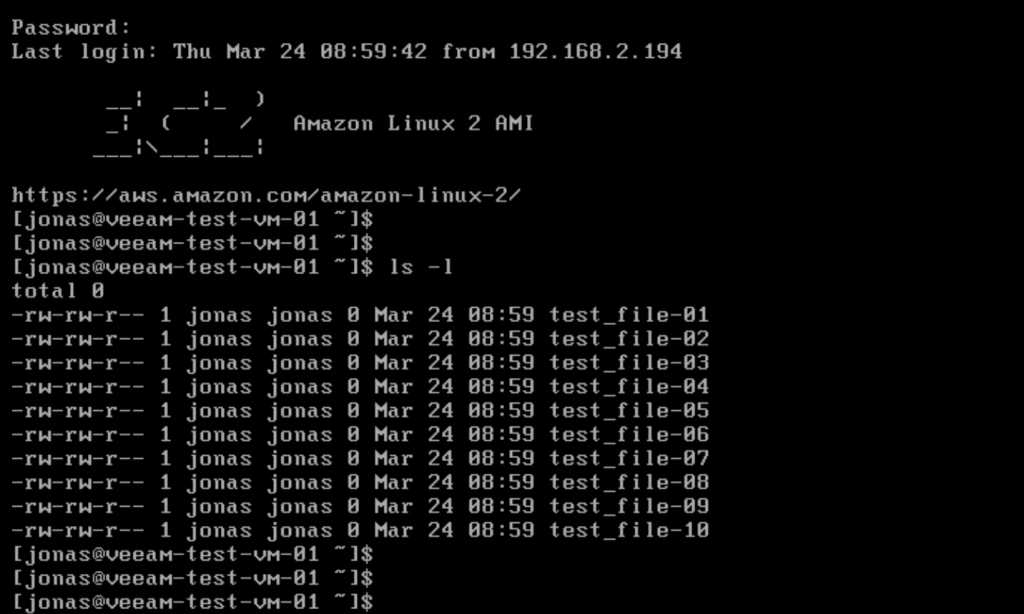

Creating some test files on the source VM to be migrated

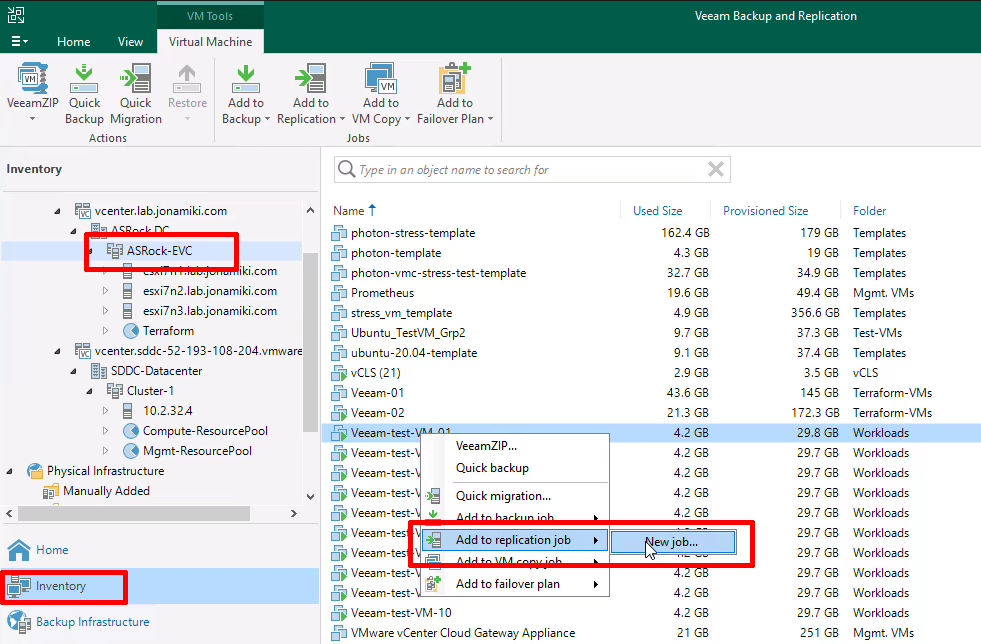

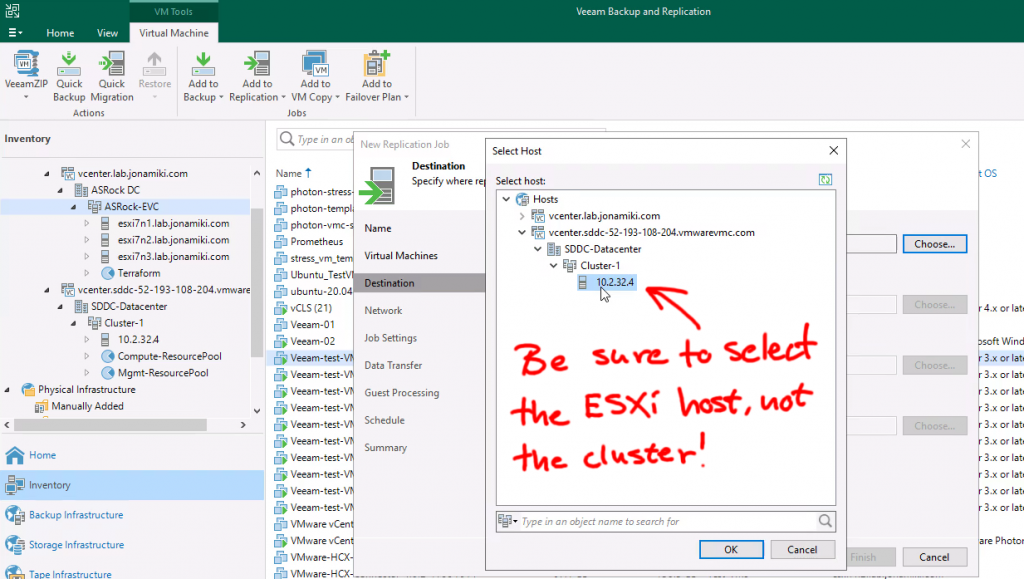

Navigate to “Inventory” using the bottom left menu, click the on-premises vCenter server / Cluster and locate a VM to migrate in the on-premises DC VM inventory. Right-click the VM to migrate and create a replication job

When selecting the target for the replication, be sure to expand the VMware cloud on AWS cluster and select one of the ESXi servers. Selecting the cluster is not enough to list up the required resources, like storage volumes

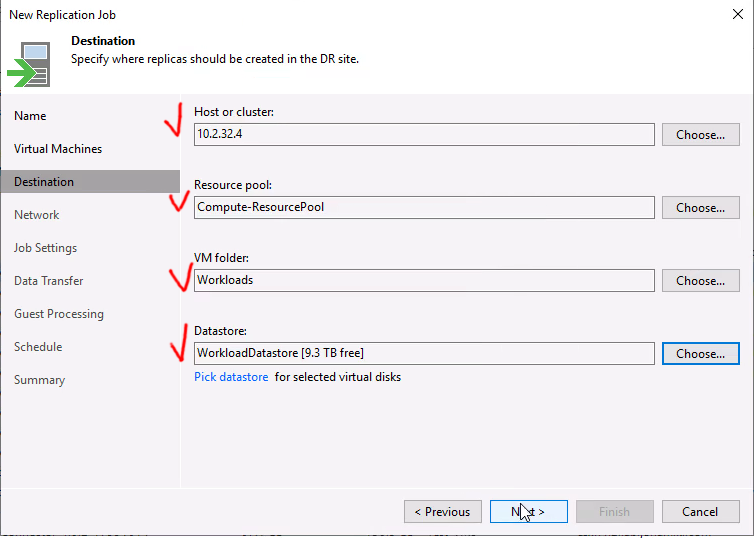

Since VMC is a managed environment we want to select the customer-side of the storage, folder and resource pools

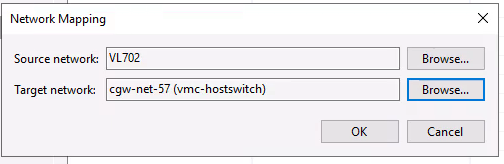

If you checked the box for remapping the network is even possible to select a target VLAN for the VM to be connected to on the cloud side!

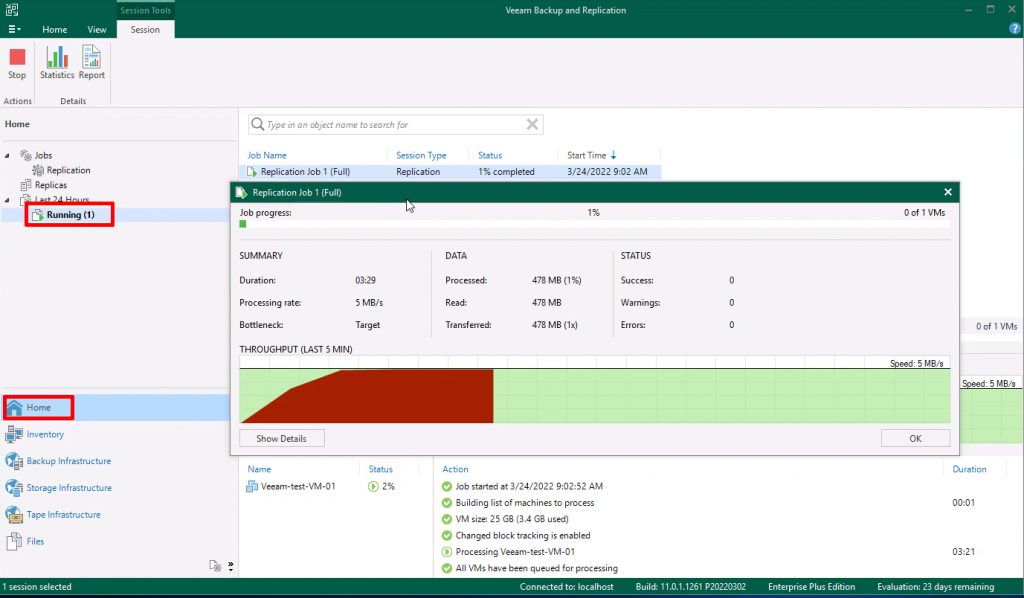

Select to start the “Run the job when I click finish” and move to the “Home” tab to view the “Running jobs”

The migration of the test VM finished in less than 9 minutes

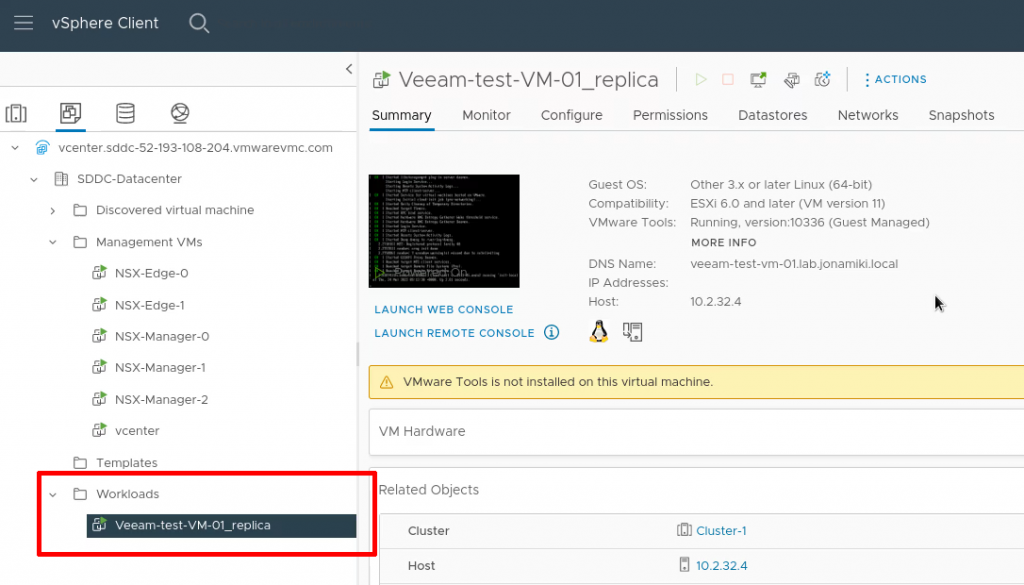

In the vCenter client for VMware Cloud on AWS we can verify that the replicated VM is present

After logging in and listing the files we can verify that the VM is not only working but also have the test files present in the home directory

Thank you for reading! Hopefully this has provided an easy-to-understand summary of the steps required for a successful migration / replication of VMs to VMC using Veeam

Using NSX Autonomous Edge to extend L2 networks from on-prem to VMware Cloud on AWS

This is a quick, practical and unofficial guide showing how to use NSX Autonomous Edge to do L2 extension / stretching of VLANs from on-prem to VMware Cloud on AWS.

Note: The guide covers how to do L2 network extension using NSX Autonomous Edge. It doesn’t cover the deployment or use of HCX or HLM for migrations.

Why do L2 extension?

One use case for L2 extension is for live migration of workloads to the cloud. If the on-prem network is L2 extended / stretched there will be no interruption to service while migrating and no need to change IP or MAC address on the VM being migrated.

Why NSX Autonomous Edge?

VMware offers a very powerful tool – HCX (Hybrid Cloud Extension) to make both L2 extension and migrations of workloads a breeze. It is also provided free of charge when purchasing VMware Cloud on AWS. Why would one use another solution?

- No need to have a vSphere Enterprise Plus license

Because L2 extension with HCX requires Distributed vSwitches and those in turn are only available with the top level vSphere Enterprise Plus license. Many customers only have the Standard vSphere license and therefore can’t use HCX for L2 extension (although they can use it for the migration itself which will be shown later in this post). NSX Autonomous Edge works just fine with standard vSwitches and therefore the standard vSphere license is enough

- Active / standby HA capabilities

Because HCX doesn’t include active / standby redundancy. Sure, you can enable HA and even FT on the cluster, but FT maxes out at 4 VMs / cluster and HA might not be enough if your VMs are completely reliant on HCX for connectivity. NSX Autonomous Edge allows two appliances to be deployed in a HA configuration.

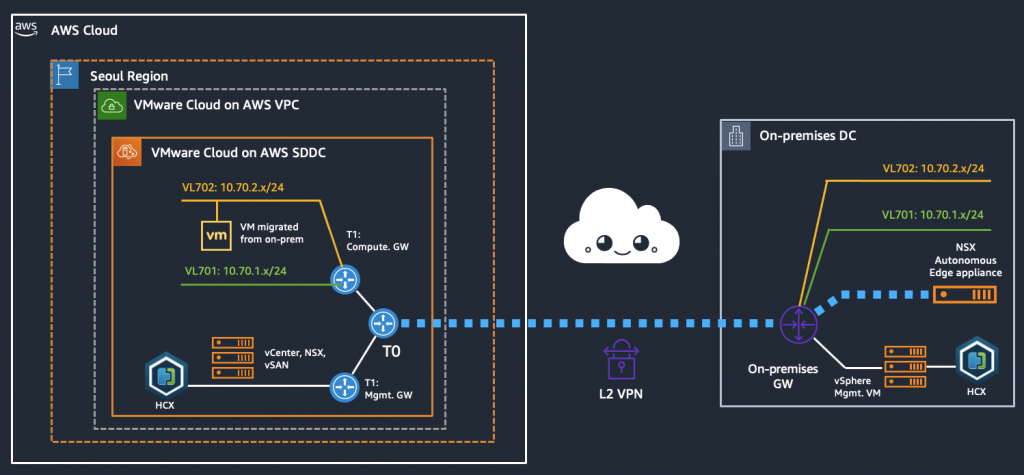

Configuration diagram (what are we creating?)

We have an on-prem environment with multiple VLANs, two of which we want to stretch to VMware Cloud on AWS and then migrate a VM across, verifying that it can be used throughout the migration. In this case we use NSX Autonomous Edge for the L2 extension of the networks while using HCX for the actual migration.

Prerequisites

- A deployed VMware Cloud on AWS SDDC environment

- Open firewall rules on your SDDC to allow traffic from your on-prem DC network (create a management GW firewall rule and add your IP as allowed to access vCenter, HCX, etc.)

- If HCX is used for vMotion: A deployed HCX environment and service mesh (configuration of HCX is out of scope for this guide)

Summary of configuration steps

- Enable L2 VPN on your VMC on AWS SDDC

- Download the NSX Autonomous Edge appliance from VMware

- Download the L2 VPN Peer code from the VMC on AWS console

- Create two new port groups for the NSX Autonomous Edge appliance

- Deploy the NSX Autonomous Edge appliance

- L2 VPN link-up

- Add the extended network segments in the VMC on AWS console

- VM migration using HCX (HCX deployment not shown in this guide)

Video of the setup process

As an alternative / addition to the guide below, feel free to refer to the video below. It covers the same steps but quicker and in a slightly different order. The outcome is the same however.

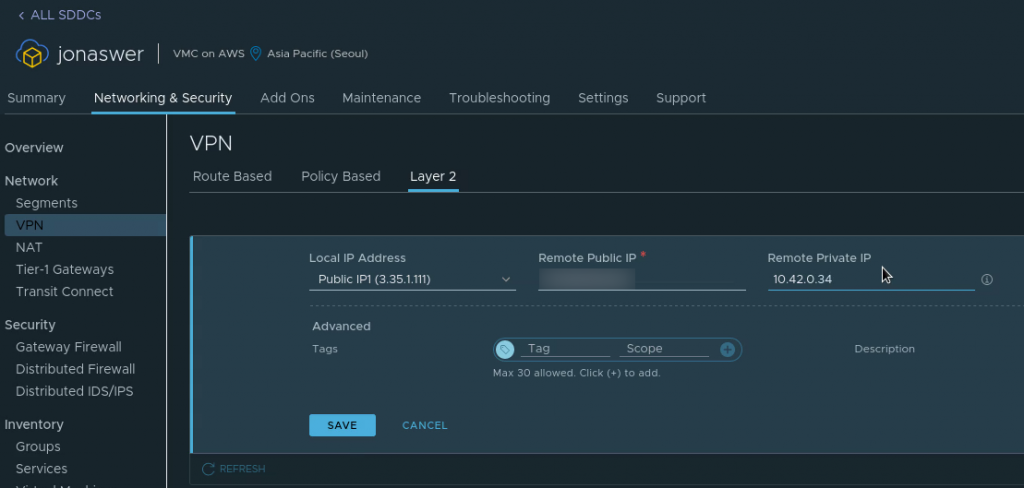

Enable L2 VPN on your VMC on AWS SDDC

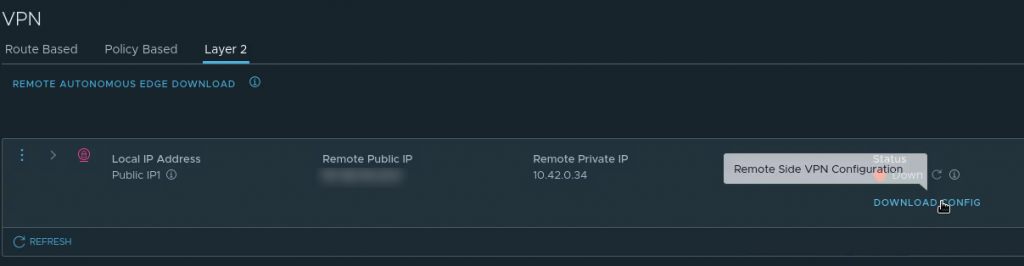

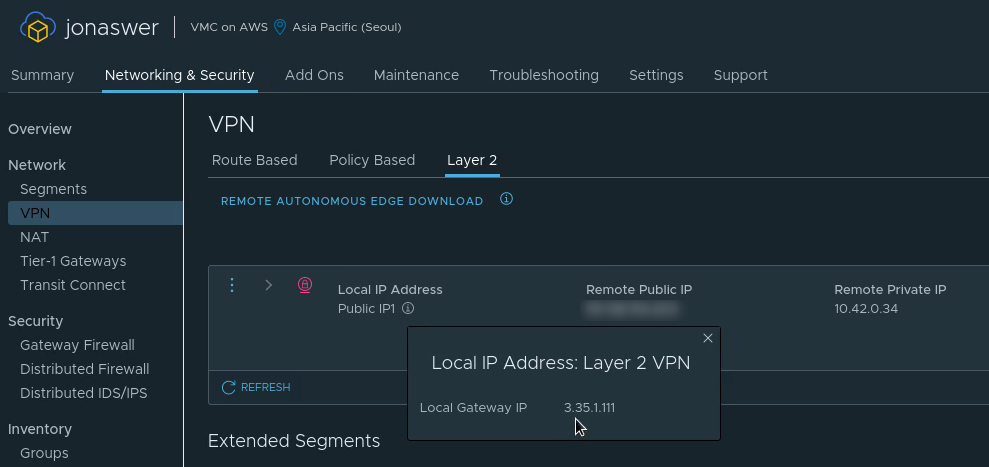

- Navigate to “Networking & Security”, Click “VPN” and go to the “Layer 2” tab

- Click “Add VPN tunnel”

- Set the “Local IP address” to be the VMC on AWS public IP

- Set the “Remote public IP” to be the public IP address of your on-prem network

- Set the “Remote private IP” to be the internal IP you intend to assign the NSX Autonomous Edge appliance when deploying it in a later step

Download the NSX Autonomous Edge appliance

After deploying the L2 VPN in VMC on AWS there will be a pop-up with links for downloading the virtual appliance files as well as a link to a deployment guide

- Download link for NSX Autonomous Edge (Choose NSX Edge for VMware ESXi)

- Link to deployment guide (Please use instead if you find my guide to be a bit rubbish)

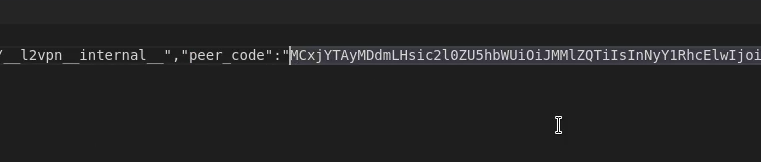

Download the L2 VPN Peer code from the VMC on AWS console

Download the Peer code for your new L2 VPN from the “Download config” link on the L2 VPN page in the VMware Cloud on AWS console. It will be available after creating the VPN in the previous step and can be saved as a text file

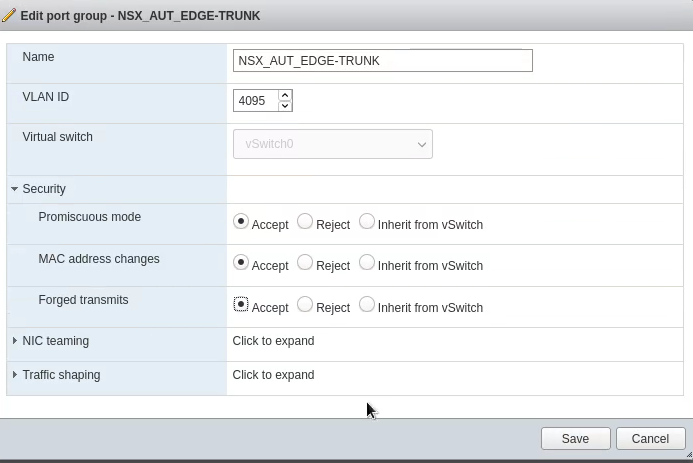

Create two new port groups for the NSX Autonomous Edge appliance

This is for your on-prem vSphere environment. The official VMware deployment guide suggests creating a port group for the “uplink” and another for the “trunk”. The uplink provides internet access through which the L2 VPN is created. The “trunk” port connects to all VLANs you wish to extend.

In this case I used an existing port group with internet access for the uplink and only created a new one for the trunk.

For the “trunk” port group: Since this PG need to talk to all VLANs you wish to extend, please create it under a vSwitch with uplinks which has those VLANs tagged.

A port group would normally only have a single VLAN set. How do we “catch them all”? Simply set “4095” as the VLAN number. Also set the port group to “Accept” the following:

- Promiscuous mode

- MAC Address changes

- Forged transmits

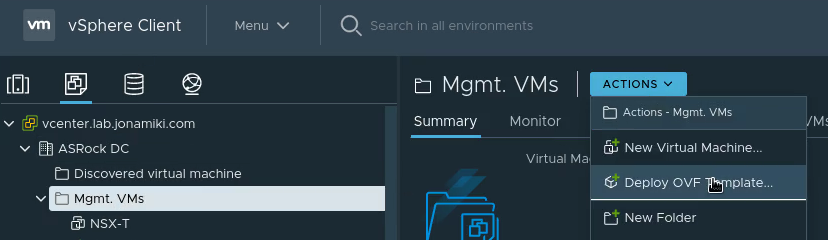

Deploy the NSX Autonomous Edge appliance

The NSX Autonomous Edge can be deployed as an OVF template. In the on-prem vSphere environment, select deploy OVF template

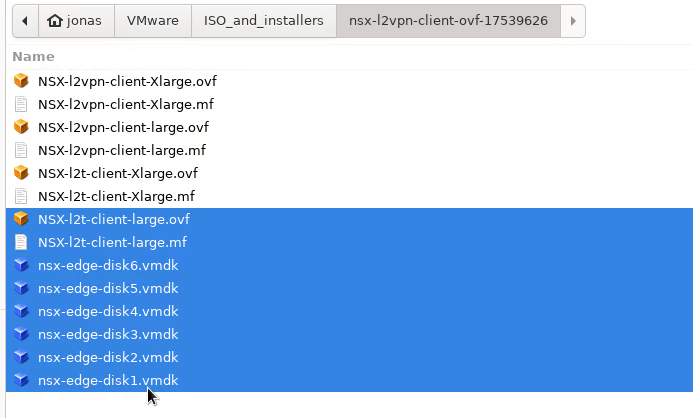

Browse to where you downloaded the NSX Autonomous Edge appliance from the VMware support page. The downloaded appliance files will likely contain several appliance types using the same base disks. I used the “NSX-l2t-client-large” appliance:

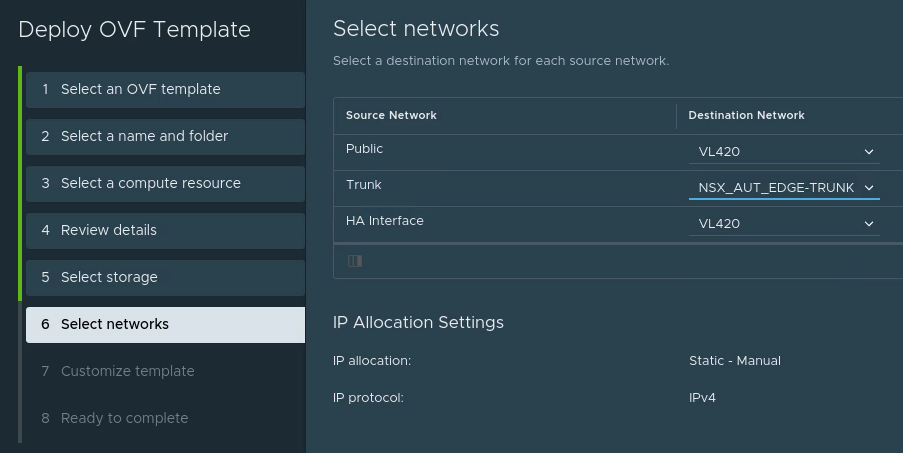

For the network settings:

- Use any network with a route to the internet and good throughput as the “Public” network.

- The “Trunk” network should be the port group with VLAN 4095 and the changed security settings we created earlier.

- The “HA Interface” should be whatever network you wish to use for HA. In this case HA wasn’t used as it was a test deployment, so the same network as “Public” was selected.

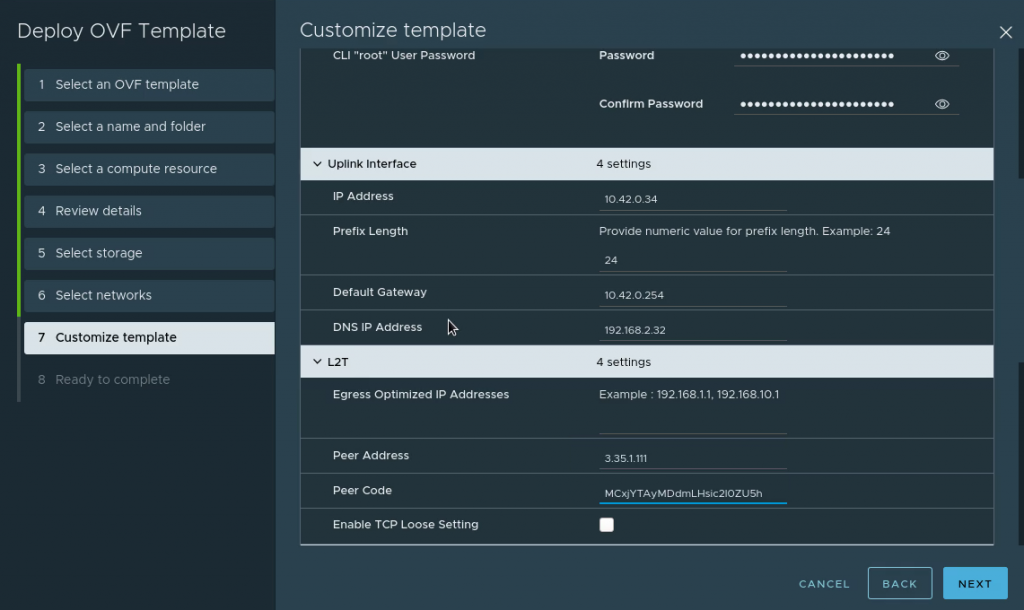

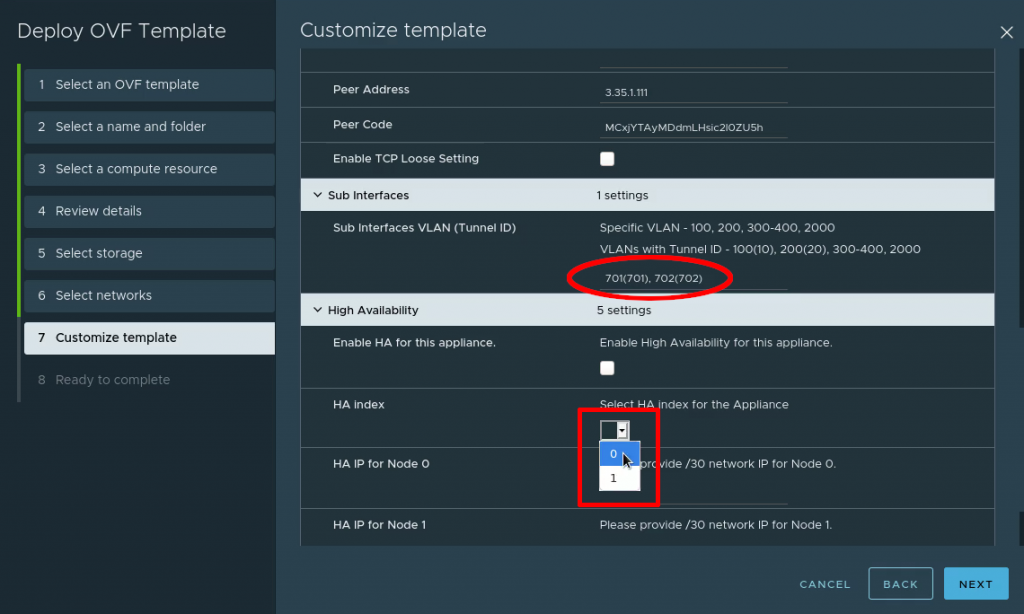

For the Customize template part, enter the following:

- Passwords: Desired passwords

- Uplink interface: Set the IP you wish the appliance to have on your local network (match with what you set for the “Remote Private IP” in the L2 VPN settings in VMC on AWS at the beginning)

- L2T: Set the public IP address shown in VMC on AWS console for your L2 VPN and use the Peering code downloaded when creating the L2 VPN at the start.

Enable TCP Loose Setting: To keep any existing connections alive during migration, check this box. For example, if you have an SSH session to the VM you want to migrate which you wish to keep alive.

The Sub interfaces: This is the most vital part and the easiest place to make mistakes. For the Sub interfaces, add your VLAN number followed by the tunnel ID in brackets. This will assign each VLAN a tunnel ID and we will use it on the other end (the cloud side) to separate out the VLANs.

They should be written as: VLAN(tunnel-ID). Example for VLAN 100 and tunnel ID 22 would look like this: 100(22). For our lab we extend VLANs 701 and 702 and will also assign them tunnel IDs which match the VLAN number. For multiple VLANs, use comma followed by space to separate them. Don’t use ranges. Enter each VLAN with its respective tunnel ID individually.

HA index: Funny detail – HA is optional but if you don’t set the HA index on your initial appliance anyway it won’t boot. Even if you don’t intend to use HA, please set this to “0”. HA section is not marked as “required” by the wizard when deploying, so it is fully possible to deploy a non-functioning appliance.

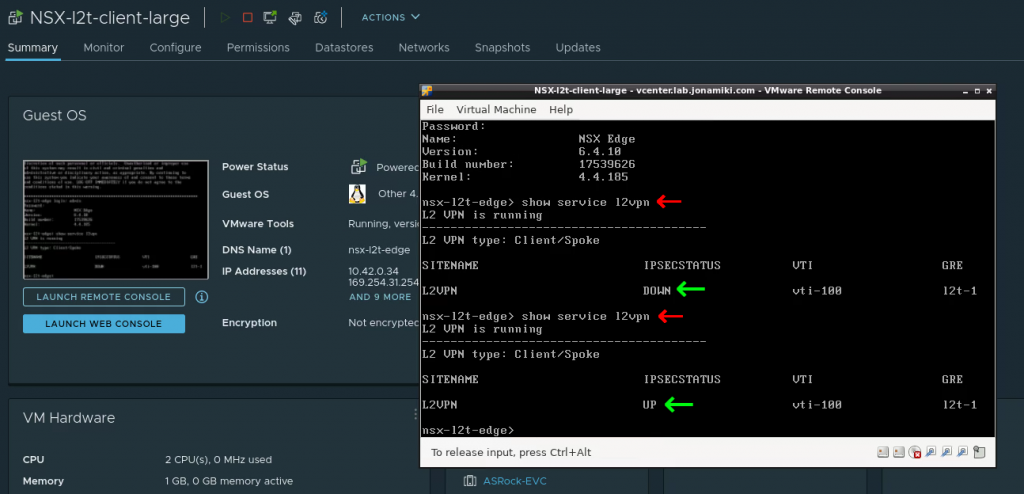

L2 VPN link-up

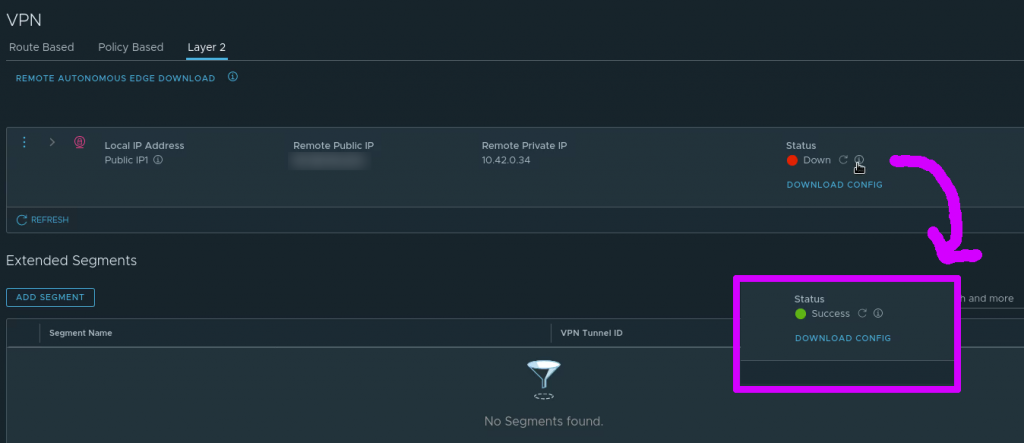

The L2 VPN tunnel will connect automatically using the settings provided when deploying the appliance. Open the console of the L2 VPN appliance, log in with “admin / <your password>” and issue the command “show service l2vpn”. After a moment the link will come up (provided that the settings used during deployment were correct).

In the VMC on AWS console the VPN can also be seen to change status from “Down” to “Success”

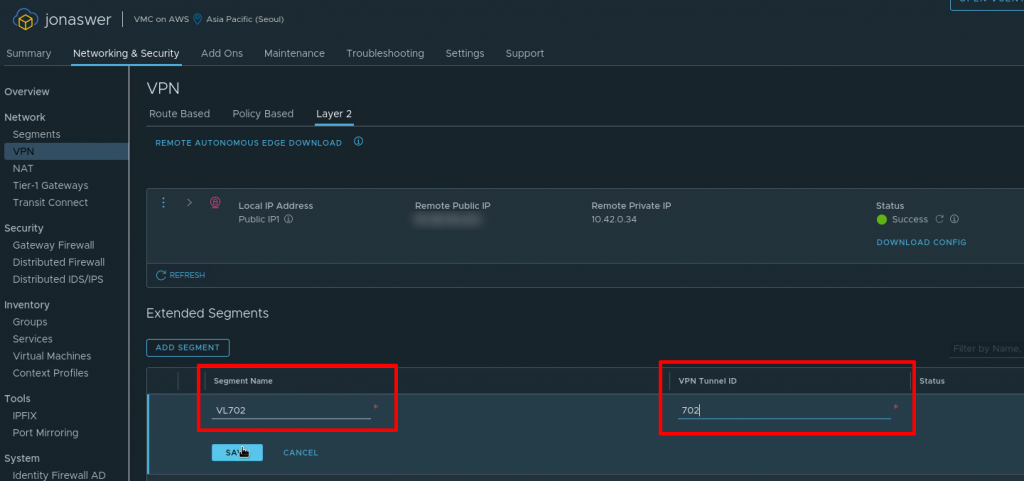

Add the extended network segments in the VMC on AWS console

Under the L2 VPN settings tab in the VMC on AWS console it is now time to add the VLANs we want to extend from on-prem. In this example we will add the single VLAN 702 which we gave the tunnel ID “702” during the NSX Autonomous Edge deployment

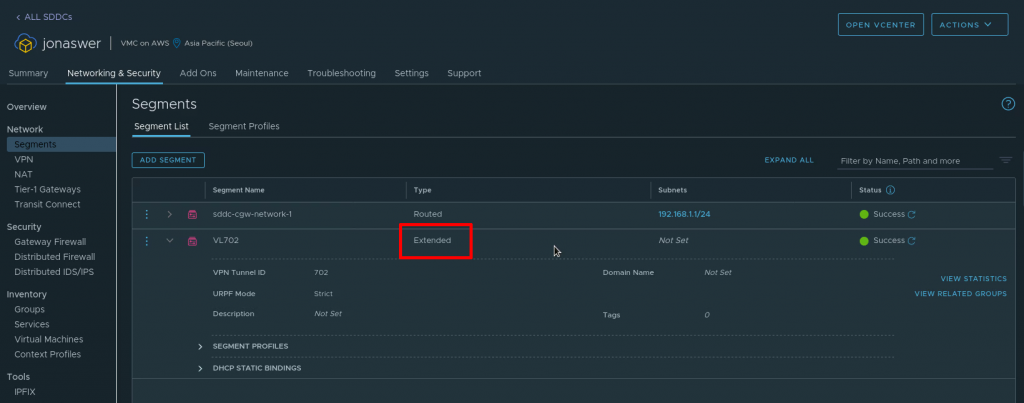

The extended network can now be viewd under “Segments” in the VMC on AWS console and will be listed as type “Extended”

VM migration using HCX

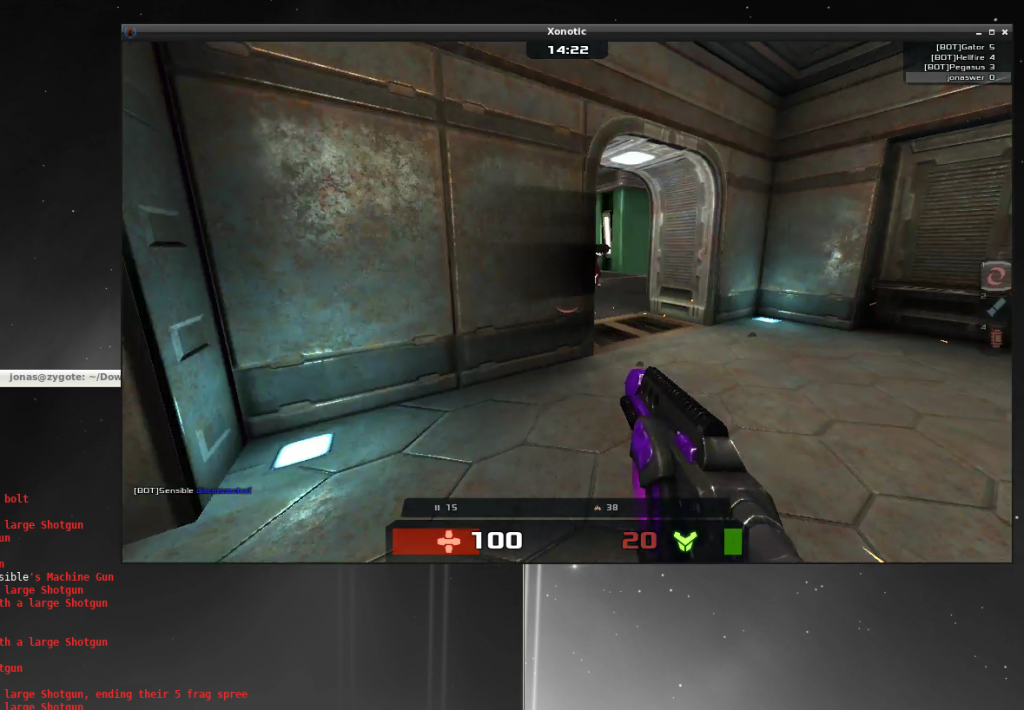

Now the network has been extended and we can test it by migrating a VM from on-prem to VMC on AWS. To verify if it works we’ll be running Xonotic – an open source shooter – on the VM and run a game throughout the migration.

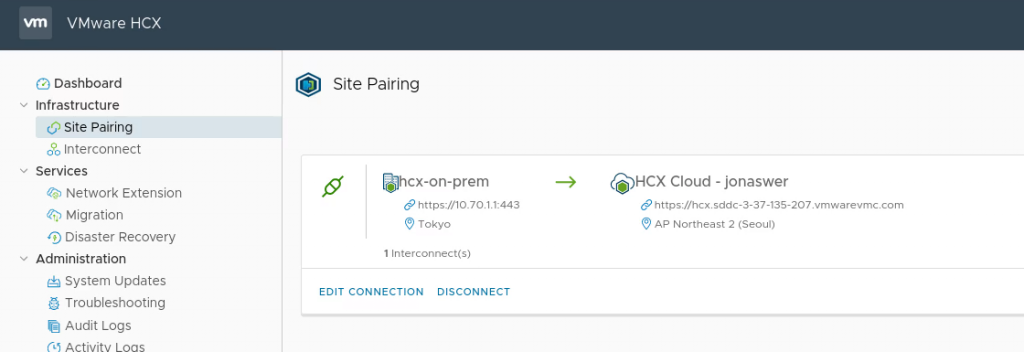

Verifying that our HCX link to the VMC on AWS environment is up

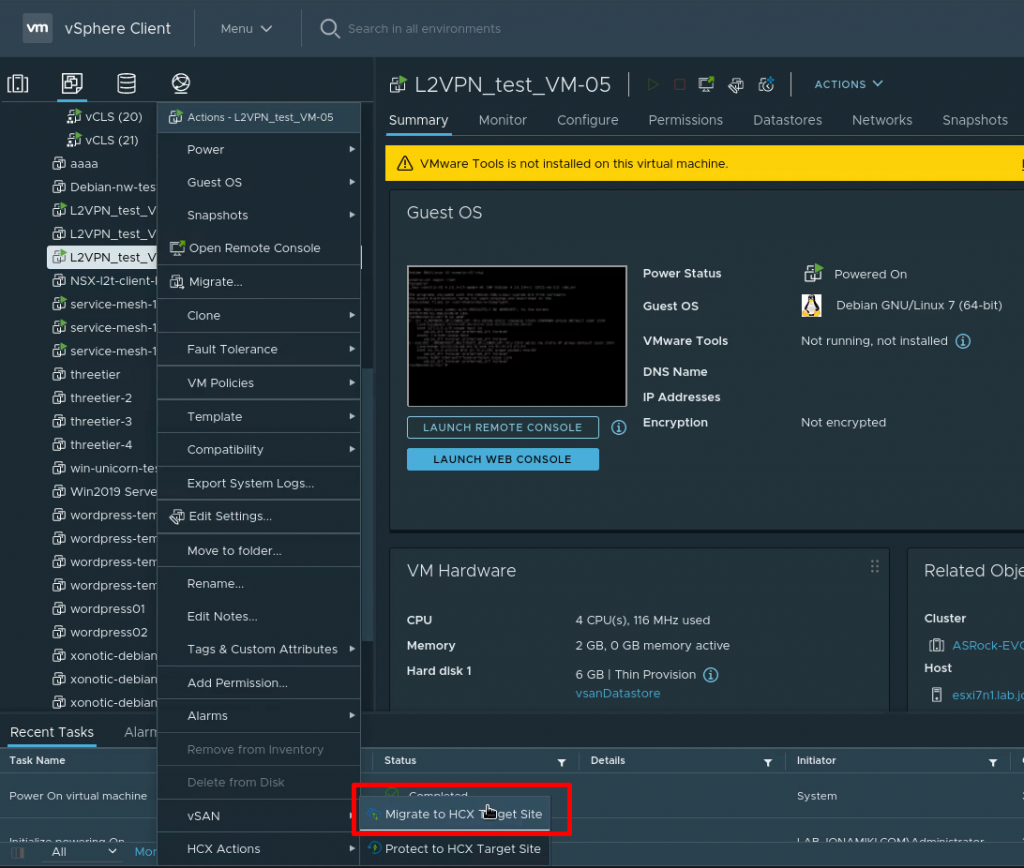

Starting the migration by right-clicking the VM in our local on-prem vCenter environment

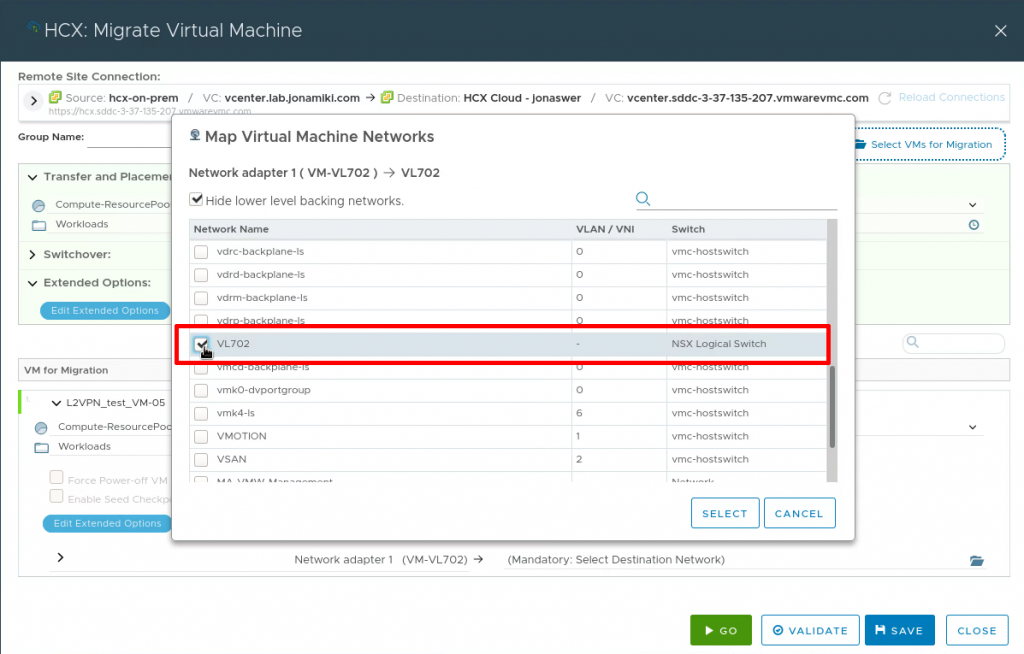

Selecting our L2 extended network segment as the target network for the virtual machine

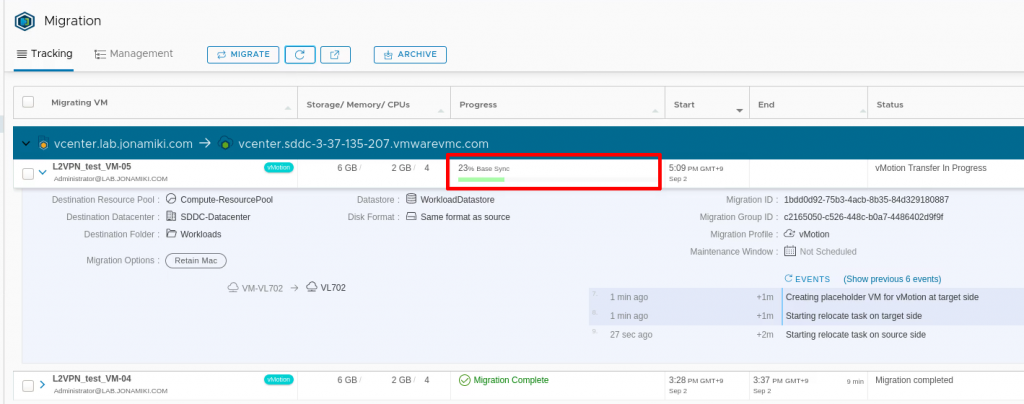

Monitoring the migration from the HCX console

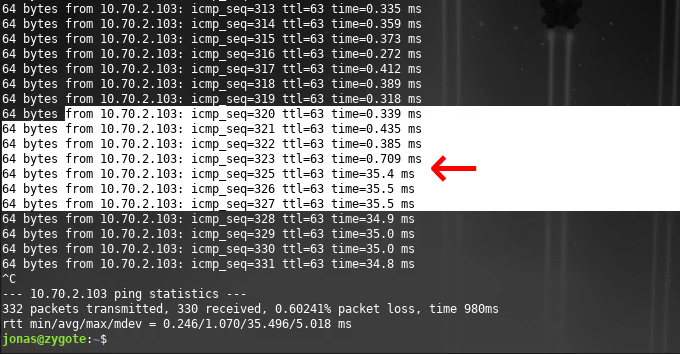

If the VM is pinged continuously during migration: Once the migration is complete the ping time will go from sub-millisec to around 35ms (migrating from Tokyo to Seoul in this case)

Throughout migration – and of course after the migration is done – our Xonotic game session is still running, albeit with a new 35ms lag after migration 🙂

Conclusion

That’s it – the network is now extended from on-prem. VMs can be migrated using vMotion via HCX or using HLM (Hybrid Linked Mode) with their existing IPs and uninterrupted service.

Any VMs migrated can be pinged throughout migration and if the “Enable TCP loose Setting” was checked any existing TCP sessions would continue uninterrupted.

Also, any VMs deployed to the extended network on the VMC on AWS side would be able to use DHCP, DNS, etc. served on-prem through the L2 tunnel.

if you followed along this far: Thank you and I hope you now have a fully working L2 extended network to the cloud!